Nvidia Forum Tensorrt

Currently the project includes.

Nvidia forum tensorrt. Food and drug administration to market their subtlemr imaging processing software. With tensorrt you can optimize neural network models trained. Pre trained models for human pose estimation capable of running in real time on jetson nano. This tensorrt 7 2 1 developer guide demonstrates how to use the c and python apis for implementing the most common deep learning layers.

Although tensorrt has a unary layer uff parser doesn t support it. 1 it seems that when the width of the input is not equal to the height of the input the output is wrong. Achieve superhuman nlu accuracy in real time with bert large inference in just 5 8 ms on nvidia t4 gpus through new optimizations. It shows how you can take an existing model built with a deep learning framework and use that to build a tensorrt engine using the provided parsers.

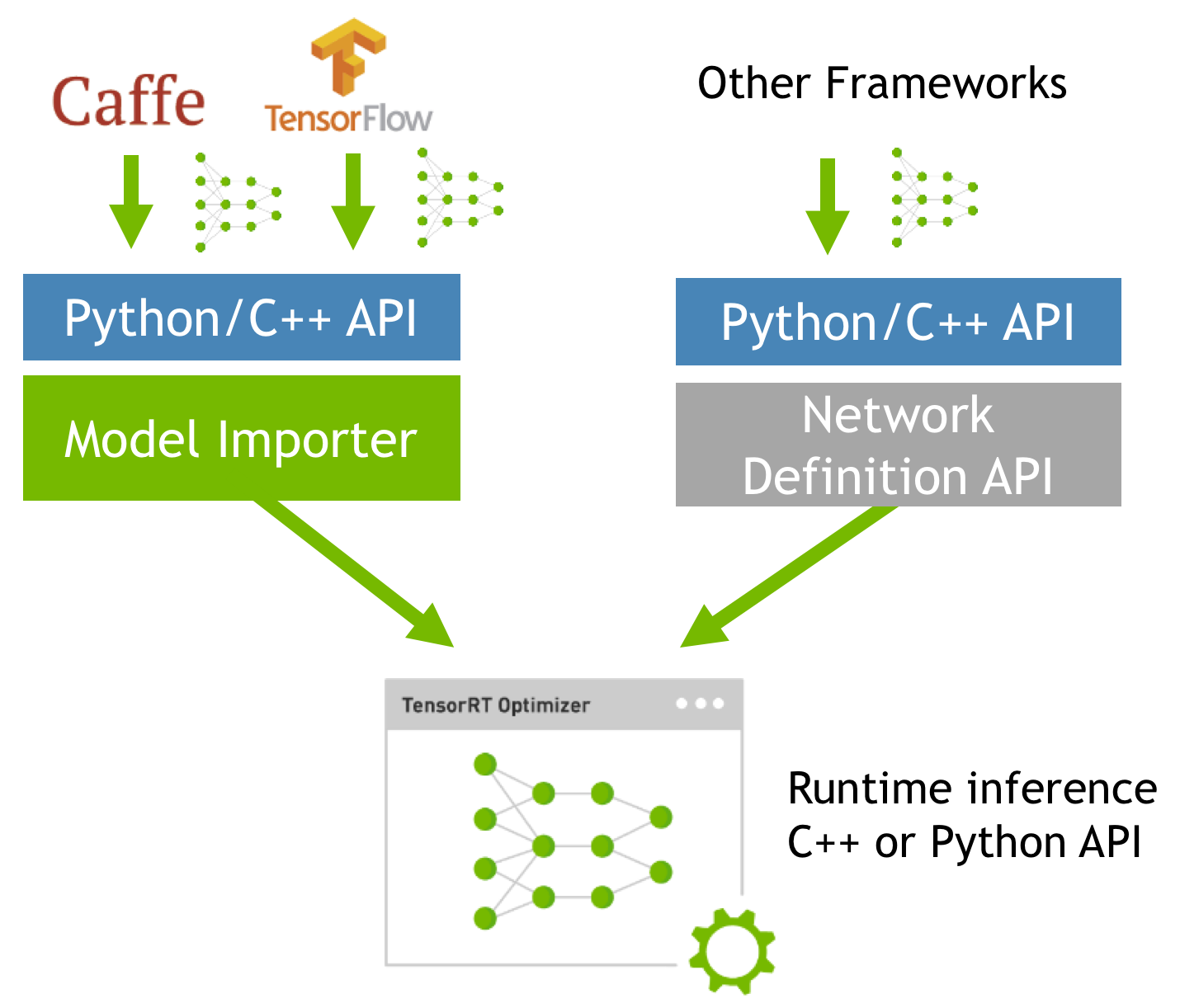

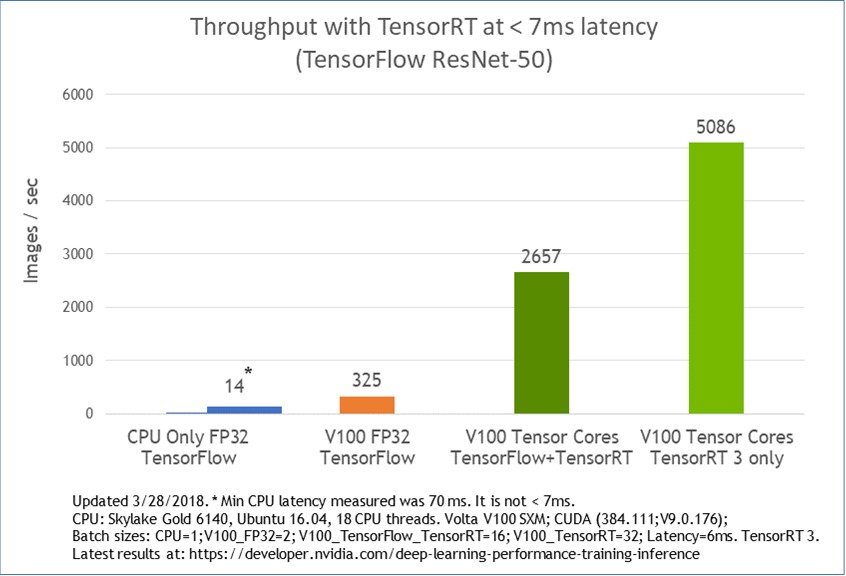

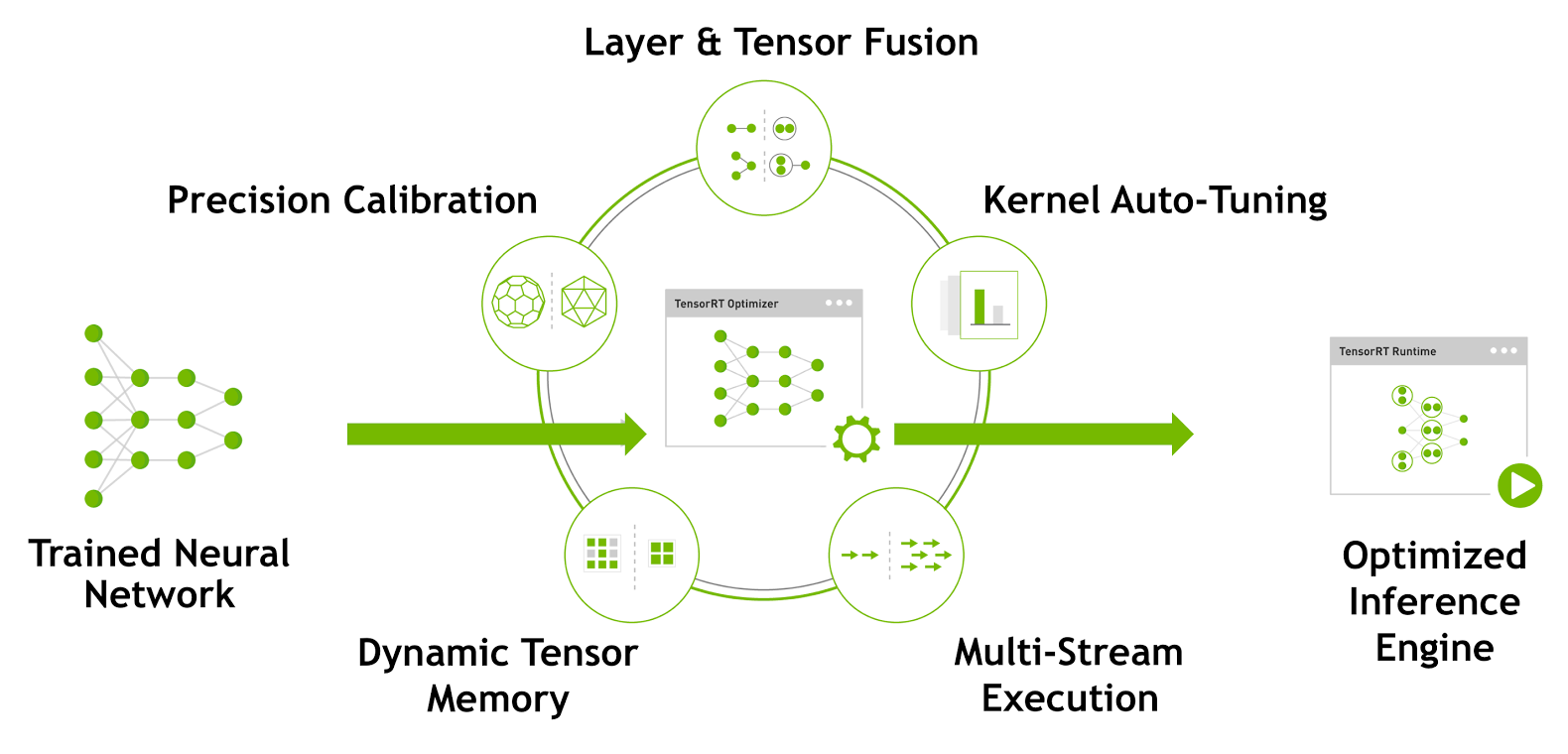

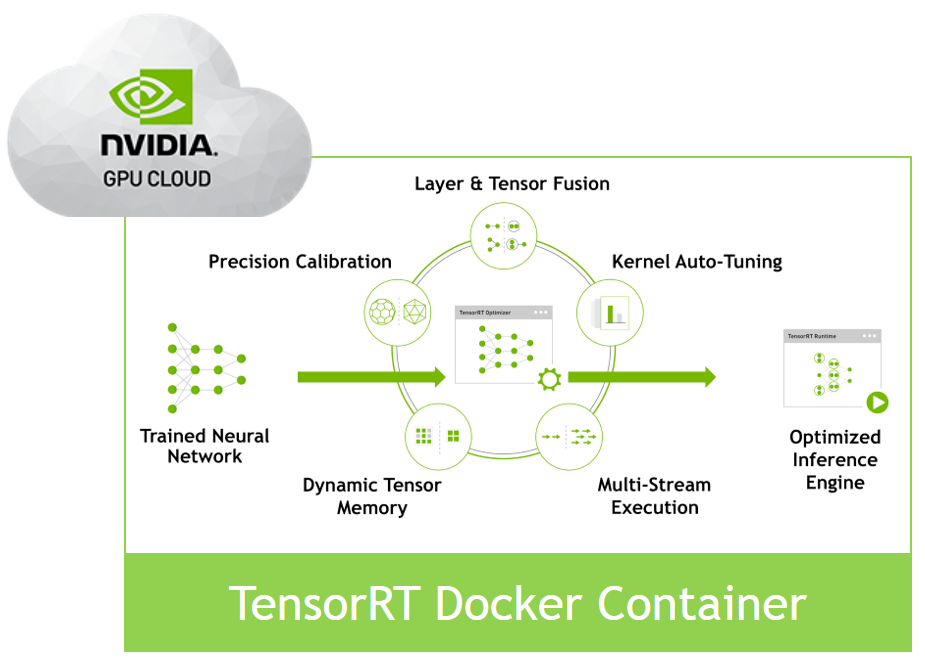

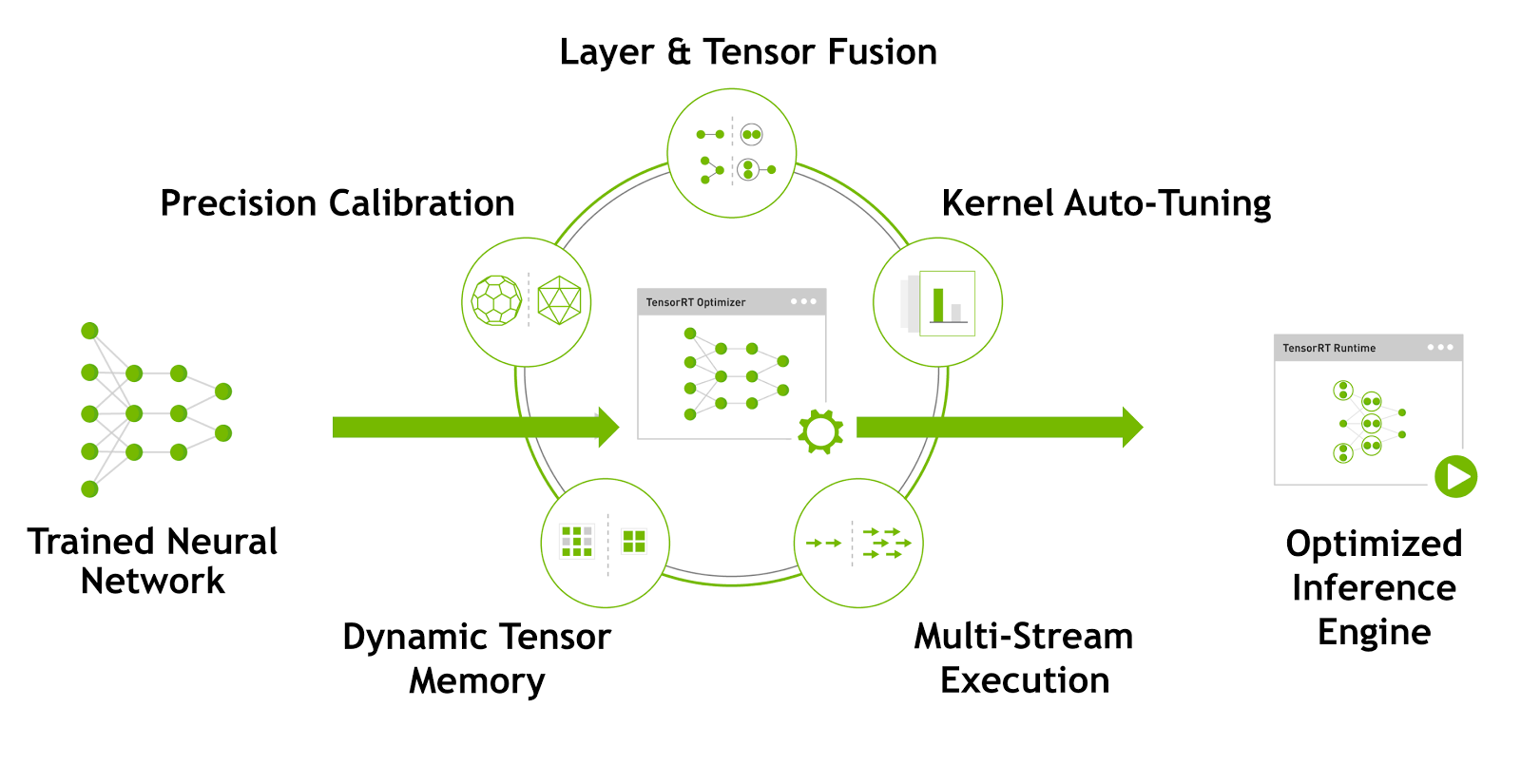

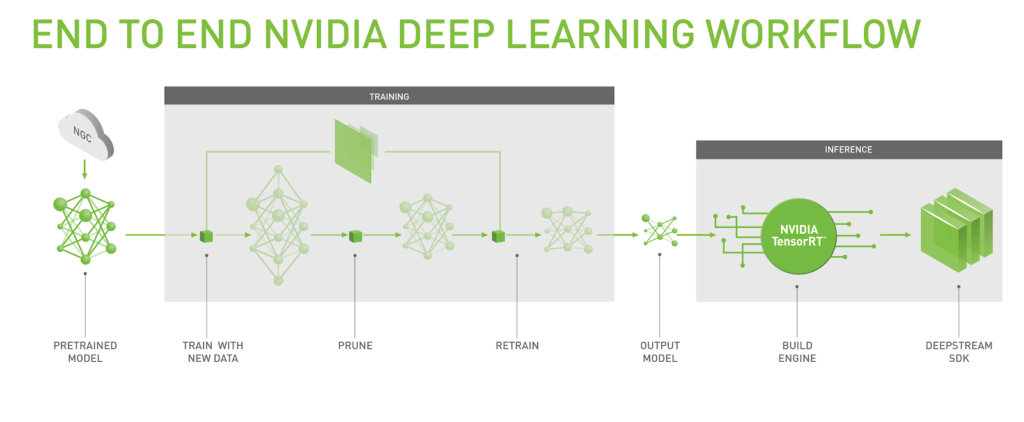

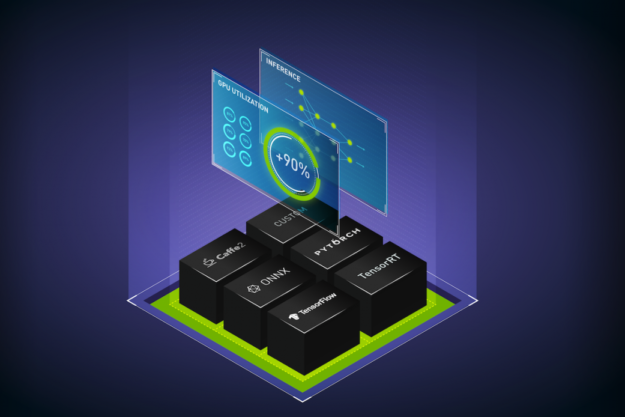

Nvidia tensorrt is an sdk for high performance deep learning inference. It includes a deep learning inference optimizer and runtime that delivers low latency and high throughput for deep learning inference applications. Tensorrt open source software. Included are the sources for tensorrt plugins and parsers caffe and onnx as well as sample applications demonstrating usage and capabilities of the tensorrt platform.

Ai deep learning computer vision machine vision cudnn healthcare life sciences machine learning artificial intelligence nvidia inception tensorrt nadeem mohammad posted oct 16 2019 subtle medical a member of nvidia s startup accelerator inception received today approval from the u s. The developer guide also provides step by step instructions for common user tasks such as creating a. Trt pose is aimed at enabling real time pose estimation on nvidia jetson. You may find it useful for other nvidia platforms as well.

Tensorrt based applications perform up to 40x faster than cpu only platforms during inference. This makes it easy to detect features like left eye left elbow right ankle etc. Tensorrt docs 2 3 2 2 4 supported tensorflow operations negative abs sqrt rsqrt pow exp and log are converted into a uff unary layer nvidia rep. Tensorrt applies graph optimizations layer fusion among other optimizations while also finding the fastest implementation of that model leveraging a diverse collection of.