Nvidia Cuda Kubernetes

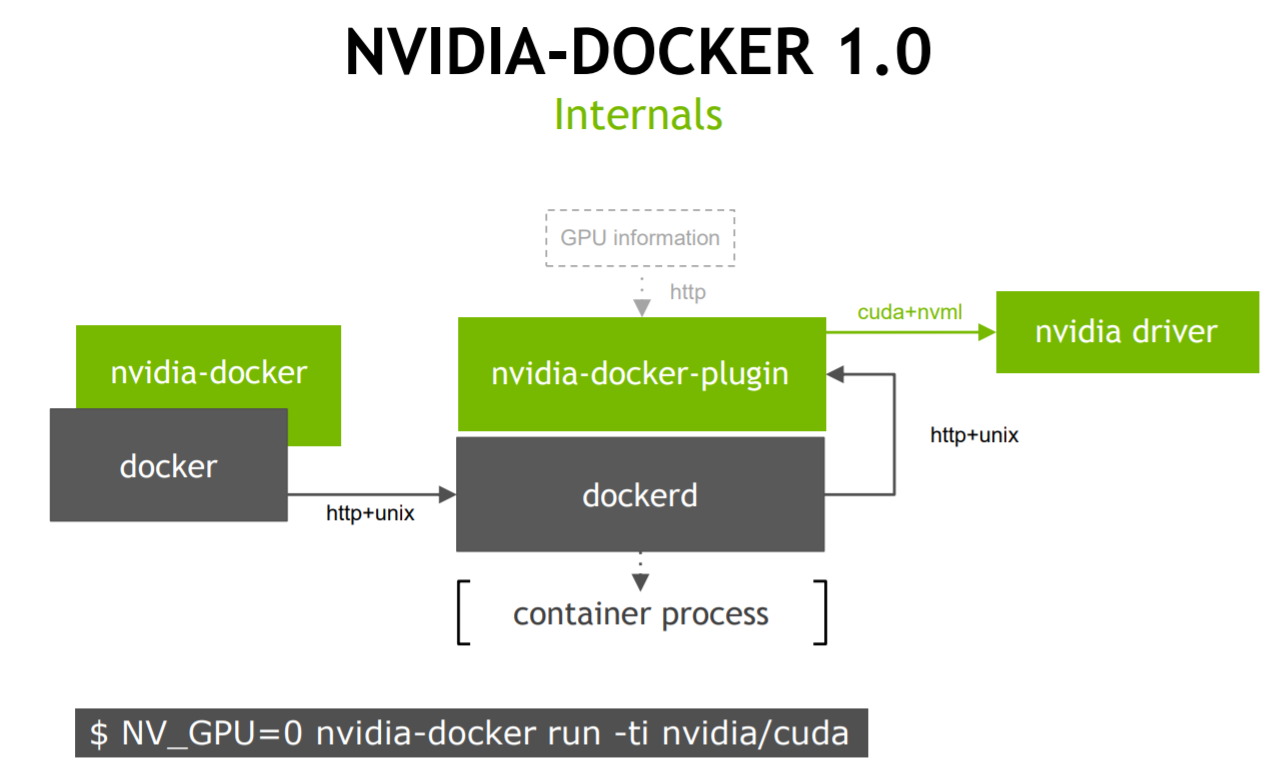

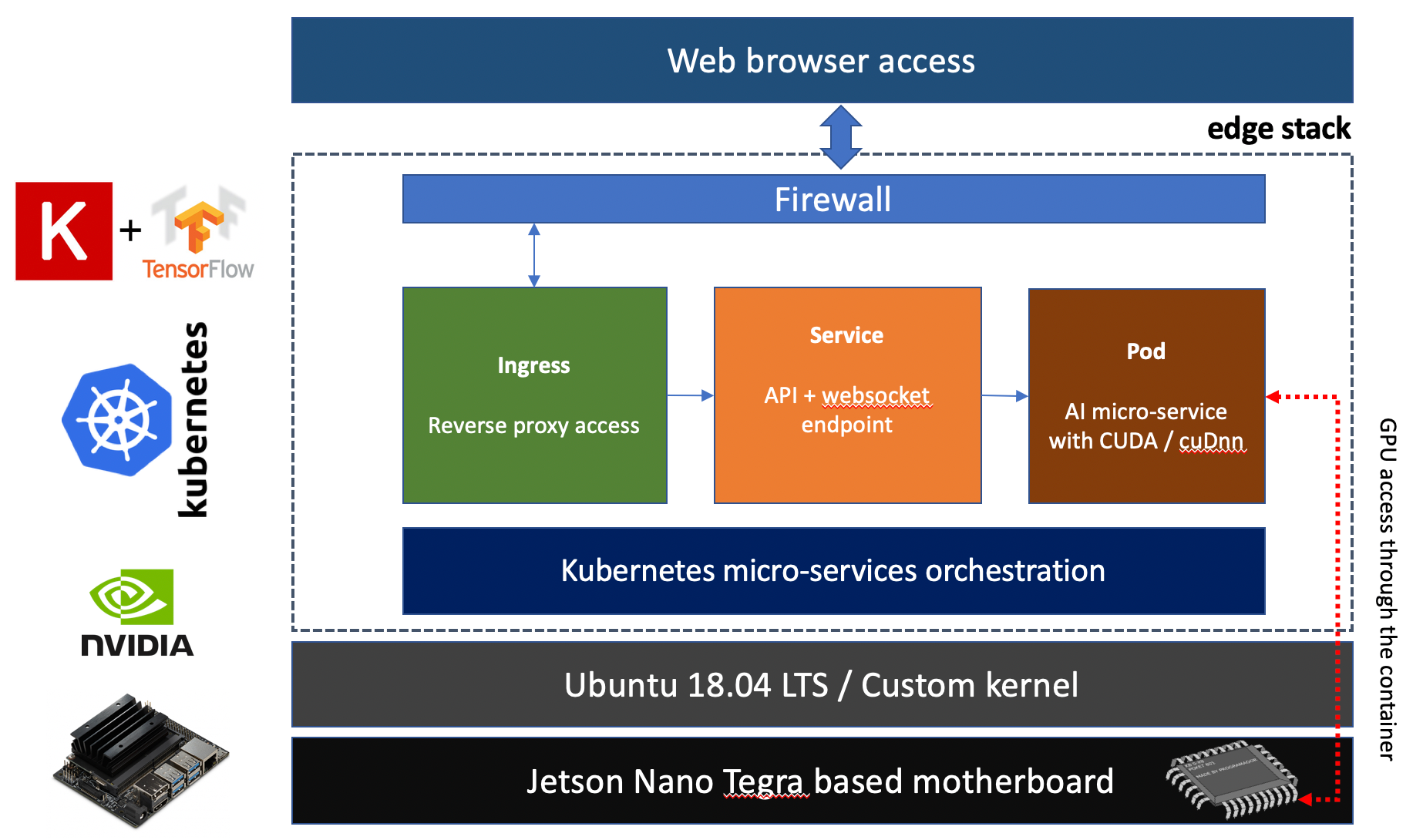

Nvidia container runtime must be configured as the default runtime for docker instead of runc.

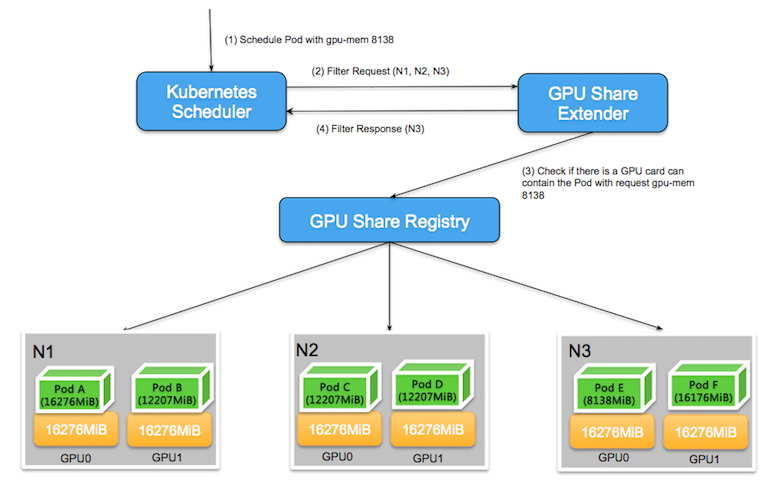

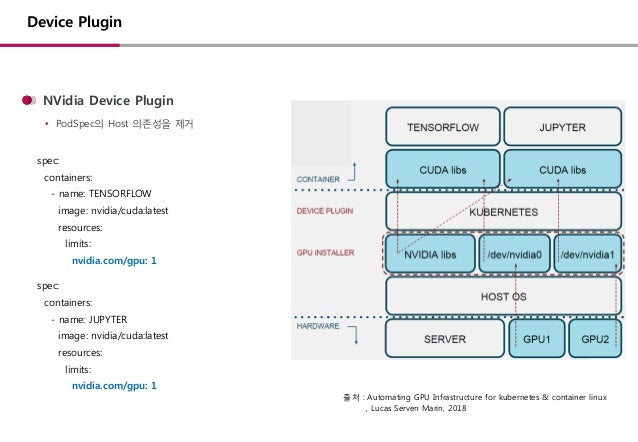

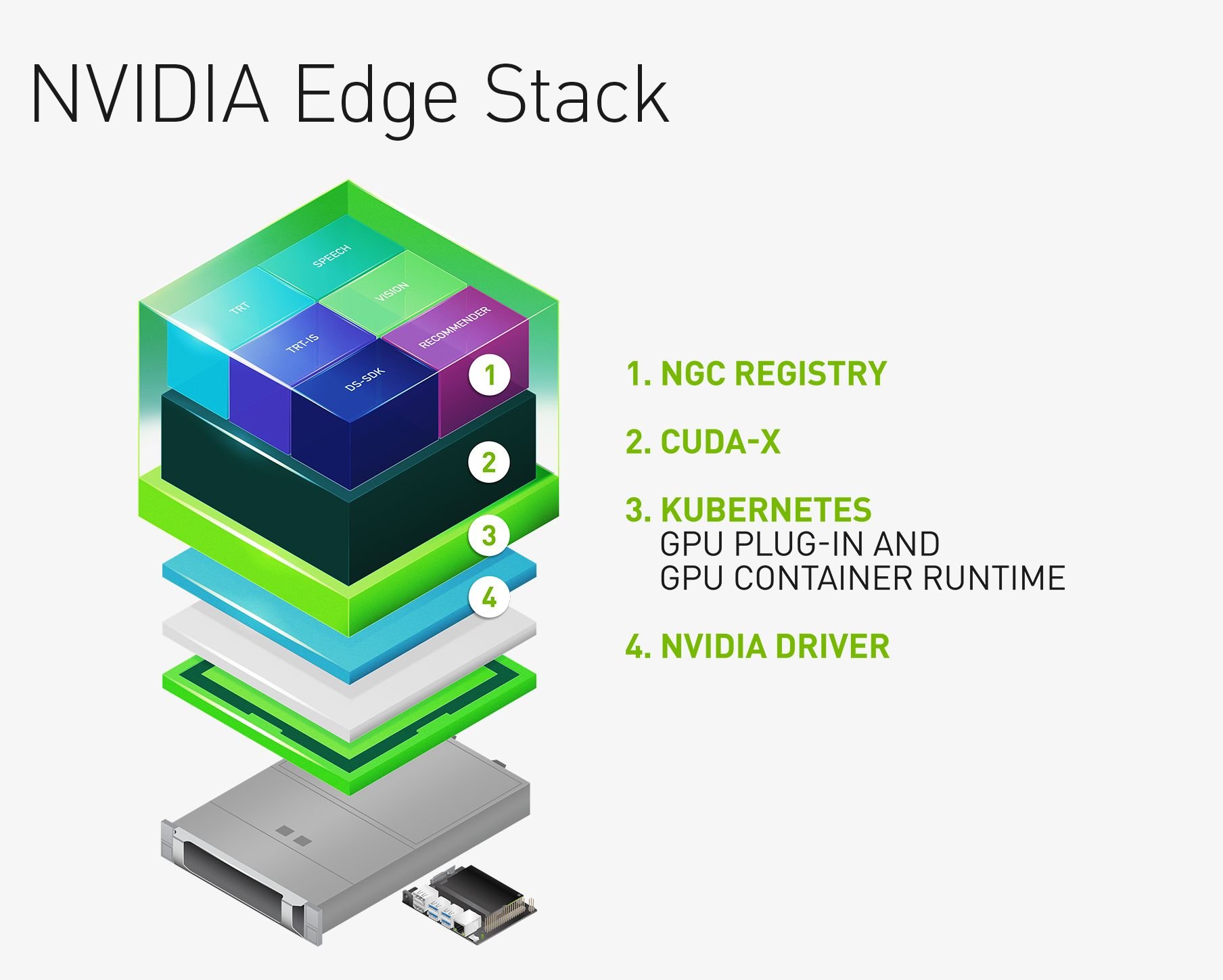

Nvidia cuda kubernetes. Kubernetes version 1 10 kubeadm installation. These components include the nvidia drivers to enable cuda kubernetes device plugin for gpus the nvidia container runtime automatic node labelling dcgm based monitoring and others. Kubernetes nodes have to be pre installed with nvidia docker 2 0. It lets you automate the deployment maintenance scheduling and operation of multiple gpu accelerated application containers across clusters of nodes.

Here are some additional useful links. Kubernetes version 1 10. Docker configured with nvidia as the default runtime. Gce 使用的 nvidia gpu 设备插件 并不要求使用 nvidia docker 并且对于任何实现了 kubernetes cri 的容器运行时 都应该能够使用 这一实现已经在 container optimized os 上进行了测试 并且在 1 9 版本之后会有对于 ubuntu.

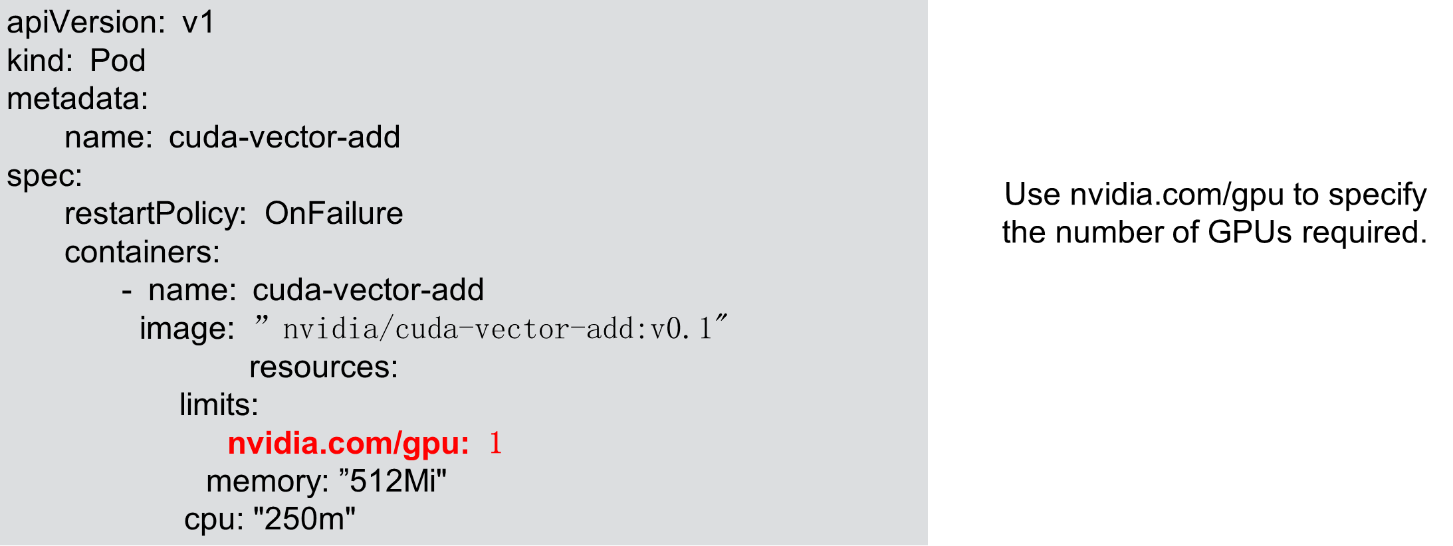

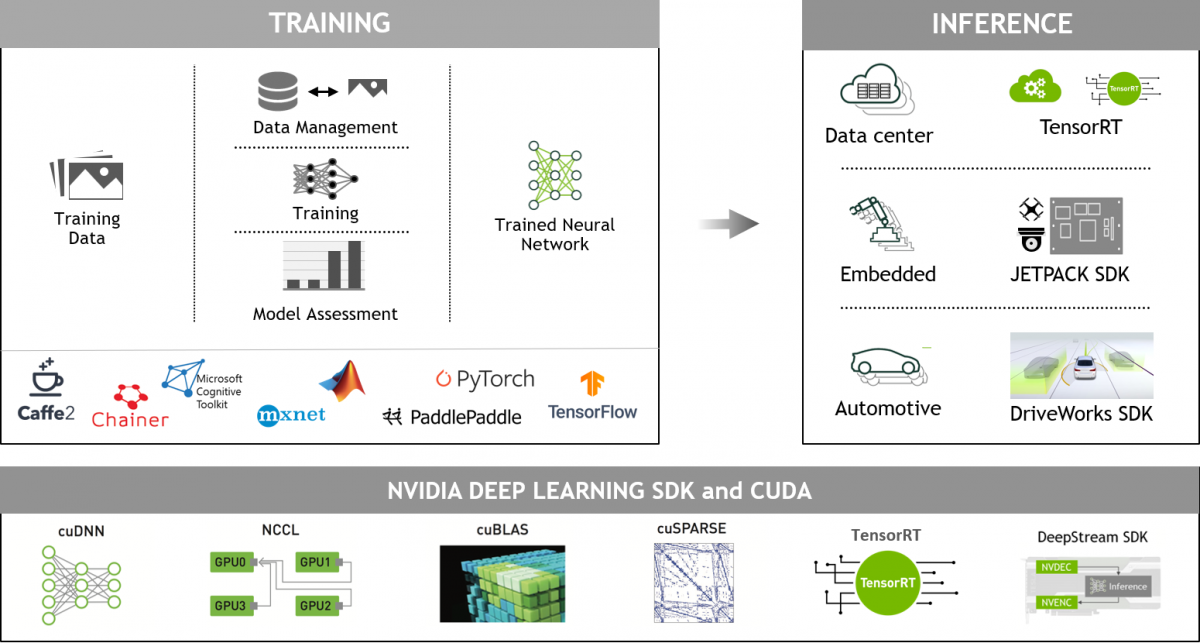

Kubernetes on nvidia gpus enables enterprises to scale up training and inference deployment to multi cloud gpu clusters seamlessly. Kubelet must use docker as its container runtime. Quick start preparing your gpu nodes. Kubernetes nodes have to be pre installed with nvidia drivers.

Gce 中使用的 nvidia gpu 设备插件. Kubernetes on nvidia gpus installation guide nvidia device plugin for kubernetes upgrading to the nvidia container runtime for docker schedule gpus if you are on a dgx station consider updating as per dgx os desktop v3 1 6 release notification or for dgx 1 refer to dgx os server v2 1 3 and v3 1 6 release notification. According to the aws g2 documentation g2 8xlarge servers have the following resources. With increasing number of ai powered applications and services and the broad availability of.

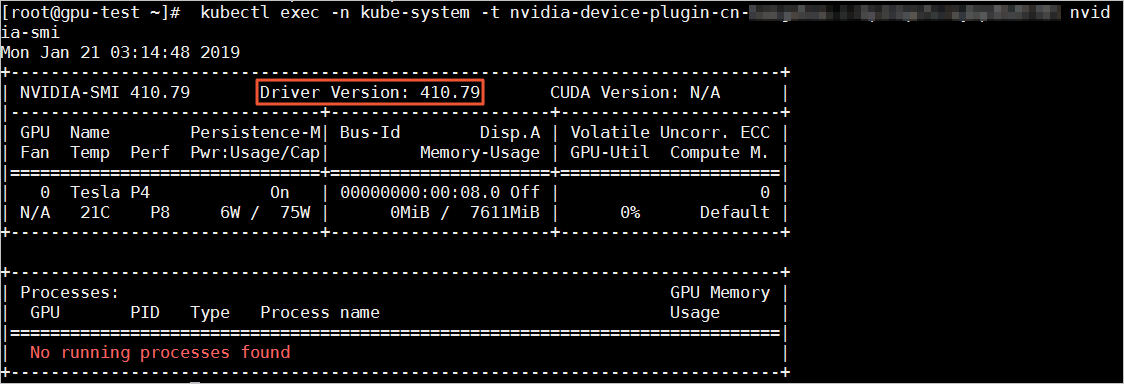

This readme assumes that the nvidia drivers and nvidia docker have been installed. The following steps need to be executed on all your gpu nodes. Nvidia docker version 2 0 see how to install and it s prerequisites docker configured with nvidia as the default runtime. Cuda device query runtime api version cudart static linking detected 1 cuda capable device s device 0.

The nvidia gpu operator uses the operator framework within kubernetes to automate the management of all nvidia software components needed to provision the gpu. Four nvidia grid gpus each with 1 536 cuda cores and 4 gb of video memory and the ability to encode either four real time hd video streams at 1080p or eight real time hd video streams at 720p. Kubernetes is an open source platform for automating deployment scaling and managing containerized applications. Nvidia tegra x1 cuda driver version runtime version 10 0 10 0 cuda capability major.