Nvidia A100 Memory Bandwidth

While the main memory bandwidth has increased on paper from 900 gb s v100 to 1 555 gb s a100 the speedup factors for the stream benchmark routines range between 1 6 and 1 72 for large data sets.

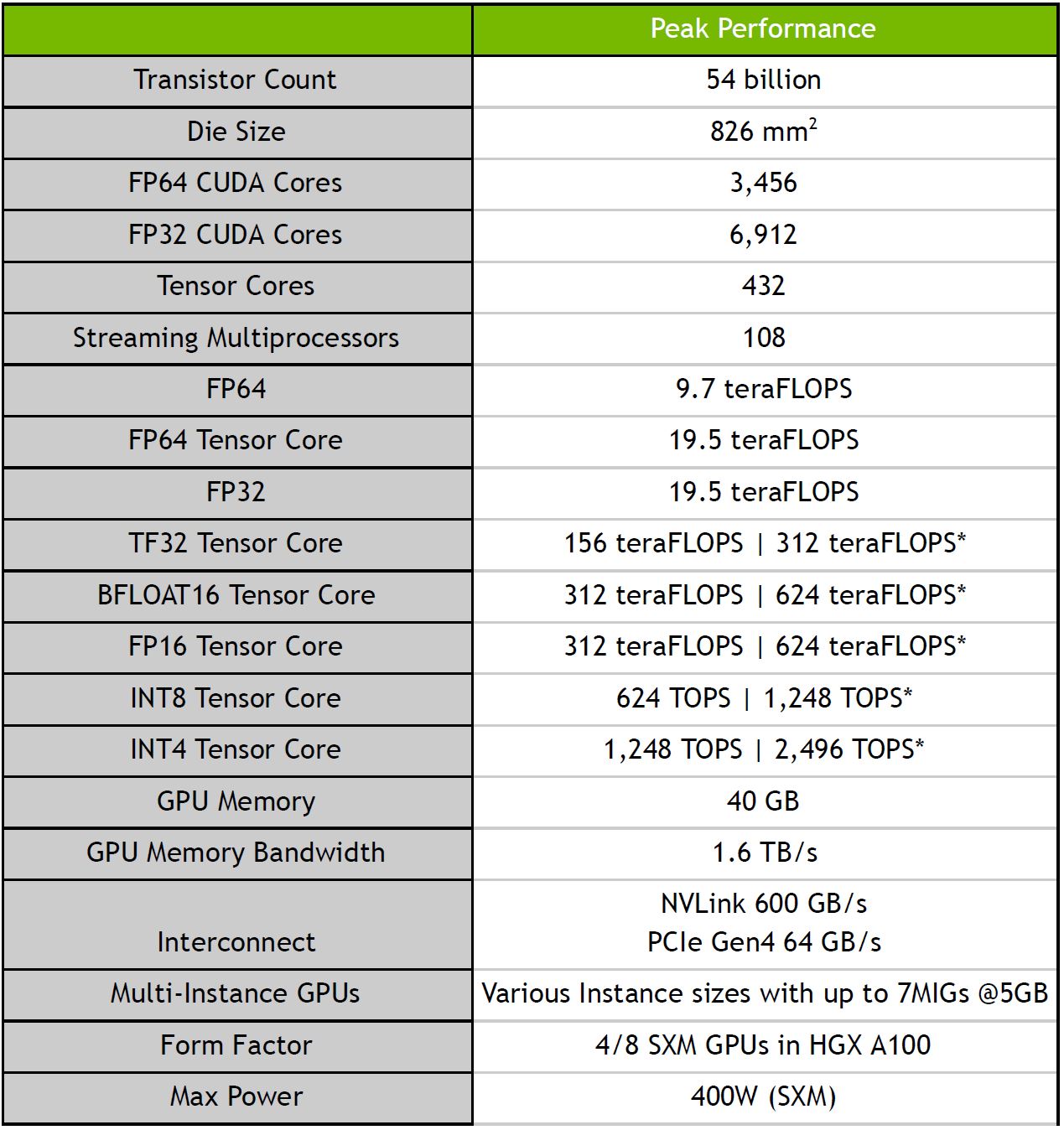

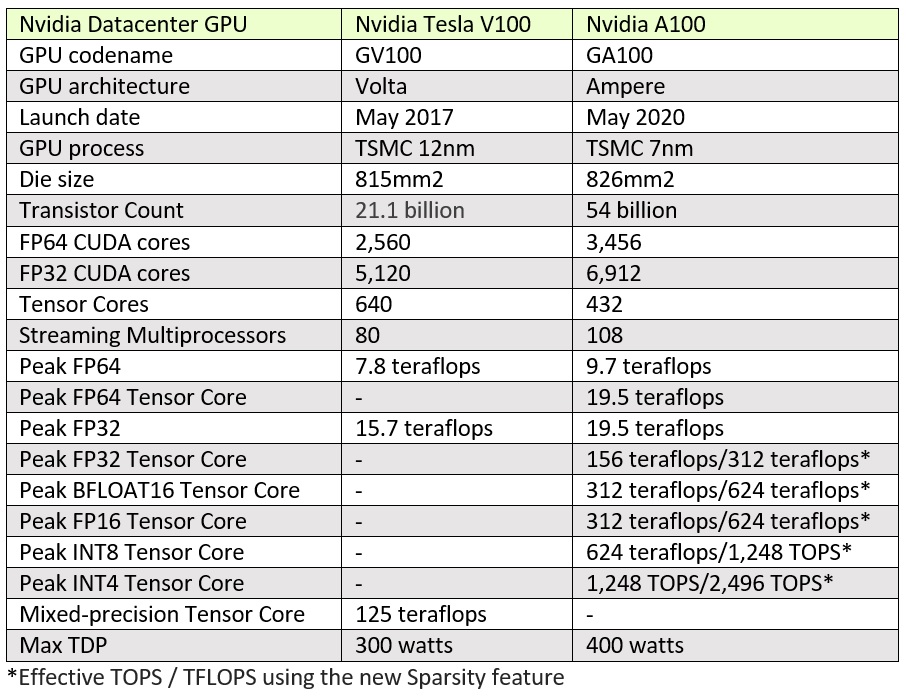

Nvidia a100 memory bandwidth. Supplied by samsung the hbm2 memory is now 70 faster than the previous version used on the v100 gpu. The nvidia a100 tensor core gpu delivers unprecedented acceleration at every scale for ai data analytics and high performance computing hpc to tackle the world s toughest computing challenges. A100 delivers 1 7x higher memory bandwidth over the previous generation. Nvidia a100 pcie image credit.

Mig technology can partition the a100 gpu into ind ividual instances each fully isolated with its own high bandwidth memory cache and compute cores enabling optimized computational resource provisioning and quality of service qos. And a huge 1 55gb sec of memory bandwidth thanks to the huge 5120 bit memory bus. Lastly mig is the new form of concurrency offered by the nvidia a100 while addressing some of the limitations with the other cuda technologies for running parallel work. The titan v has 653 gb sec of memory bandwidth and 14 9 tflops of fp32 compute.

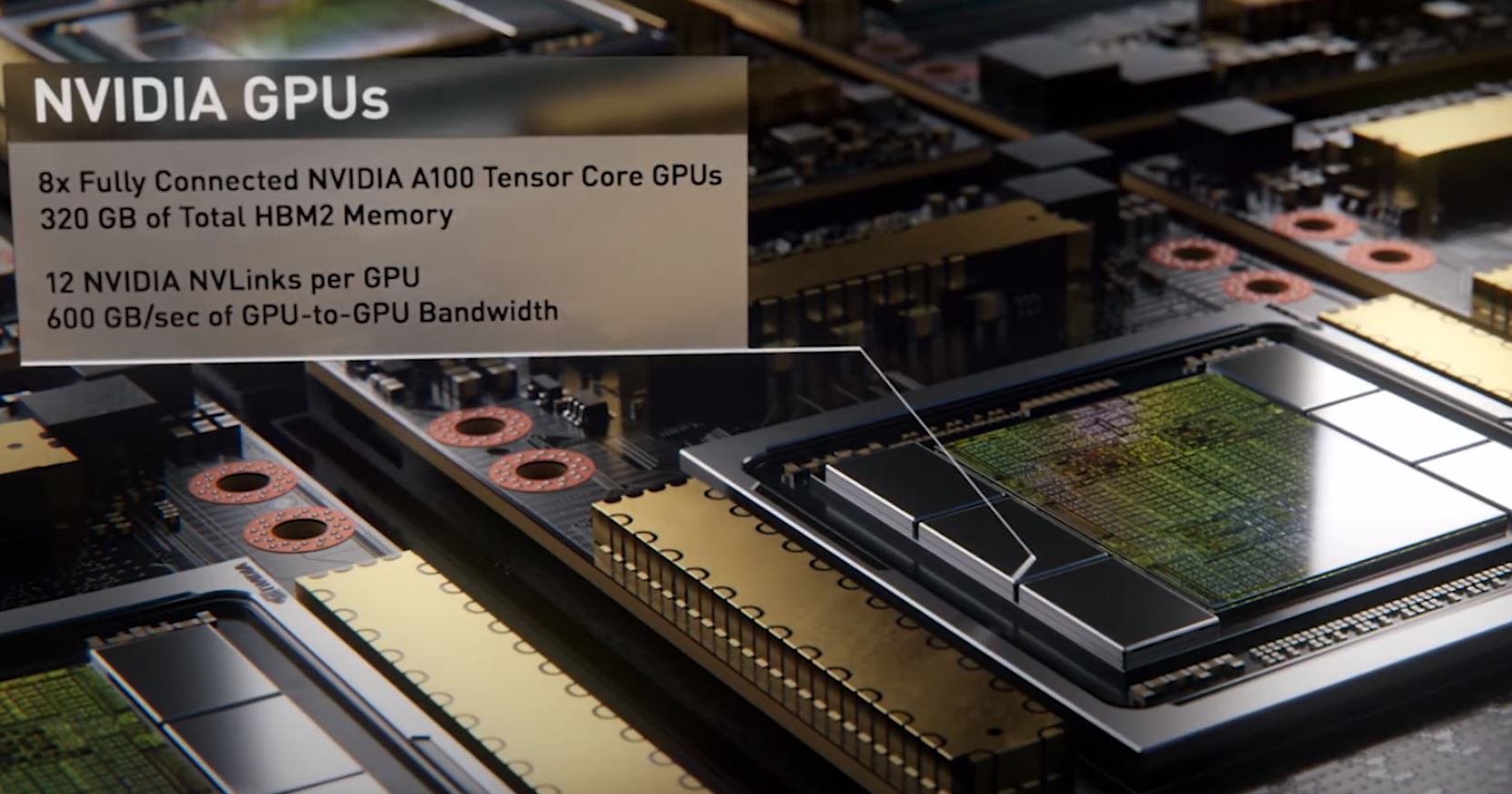

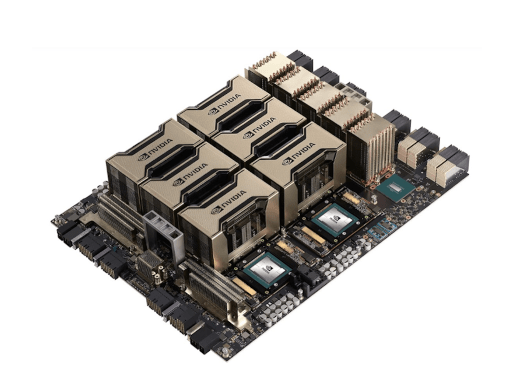

Last but not least memory capacity has been boosted significantly to 40gb hbm2 memory for each a100 gpu and peak memory bandwidth is now a staggering 1 6tb s. Instances per nvidia a100 gpu. To feed its massive computational throughput the nvidia a100 gpu has 40 gb of high speed hbm2 memory with a class leading 1555 gb sec of memory bandwidth a 73 increase compared to tesla v100. Bandwidth memory hbm2 a100 delivers improved raw bandwidth of 1 6tb sec as well as higher dynamic random access memory dram utilization efficiency at 95 percent.

Multi instance gpu mig an a100 gpu can be partitioned into as many as seven gpu instances fully. The new a100 is being compared. Memory bandwidth caches and capacity are all shared between mps clients. As the engine of the nvidia data center platform a100 can efficiently scale to thousands of gpus or with nvidia multi instance gpu mig technology be partitioned into seven gpu instances to.

In addition the a100 gpu has significantly more on chip memory including a 40 mb level 2 l2 cache nearly 7x larger than v100 to maximize compute performance. In figure 1 we are visualizing the speedups we get when replacing an nvidia v100 gpu with an nvidia a100 gpu without code modification.