Nvidia A100 Ibm

David wolford marketing manager for ibm s global cloud storage portfolio wrote in a company blog last week.

Nvidia a100 ibm. For the first time scale up and scale out workloads. Nvidia also introduced the pcie form factor of the ampere based a100 gpu. Ibm s storage for data and ai portfolio now supports the recently announced nvidia dgx a100 which is designed for analytics and ai workloads. To power ai workloads of all sizes its new nd a100 v4 vm series can scale from a single partition of one a100 to an instance of thousands of a100s networked with nvidia mellanox interconnects the accelerated compute leader added this announcement comes on the heels of top server makers unveiling plans for more than 50 a100 powered systems and google cloud s announcement of a100.

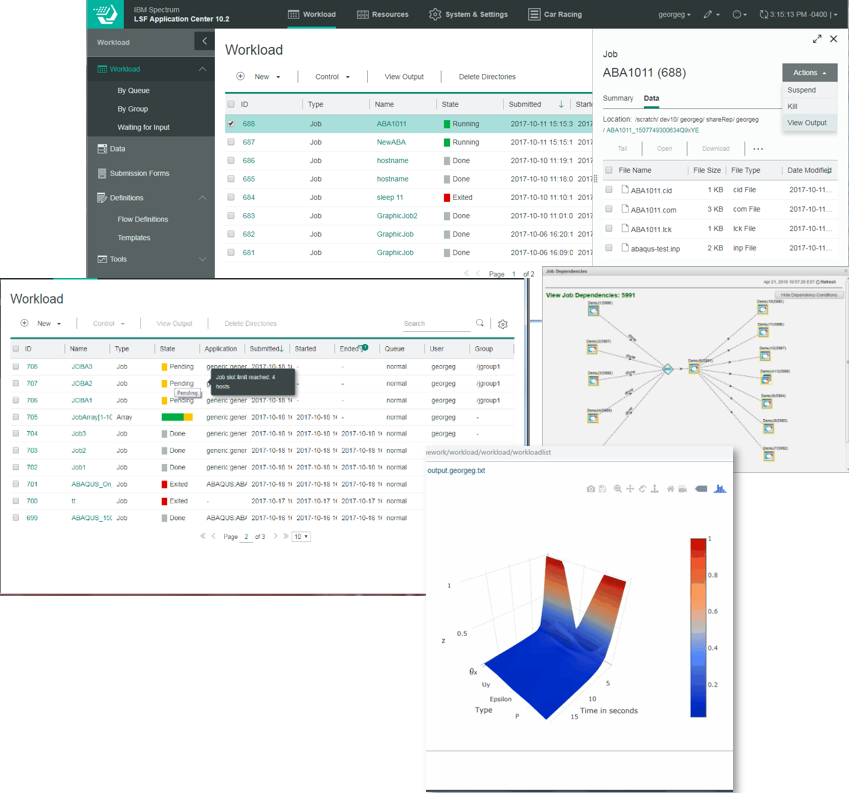

Ibm s data storage and ai portfolio now support the recently announced nvidia dgx a100 dedicated to analytics and ai workloads. Earlier cliff took us through how nvidia built its selene supercomputer currently 7 in the world during the pandemic in 0 to supercomputer nvidia selene deployment now we are going to look more at what goes into that type of machine. Ibm brings together the infrastructure of both file and object storage with nvidia dgx a100 to create an end to end solution. Nvidia unveiled its selene ai supercomputer today in tandem with the updated listing of world s fastest computers.

Nvidia s new internal ai supercomputer selene joins the upper echelon of the 55th top500 s ranks and breaks an energy efficiency barrier. Nvidia a100 gpu is a 20x ai performance leap and an end to end machine learning accelerator from data analytics to training to inference. Ibm brings together the infrastructure of both file and object storage with nvidia dgx a100 to. Using ibm storage for data and ai with nvidia dgx systems makes data simple and accessible for a hybrid infrastructure delivering ai infrastructure solutions that fit your business model.

Storage for ai and big data simplifies your deployment with optimized efficiency driving faster insights that are massively scalable and globally available from data center to edge. Kicking off our hot chips 32 coverage we are going to take a detailed look at the nvidia dgx a100 superpod design. It is integrated with the ability to catalog and discover in real time all the data for the nvidia ai solution from both ibm cloud object storage and ibm spectrum scale storage. As the engine of the nvidia data center platform a100 can efficiently scale to thousands of gpus or with nvidia multi instance gpu mig technology be partitioned into seven gpu instances to.

Building on our existing collaboration we unveil that ibm storage for data and ai now supports the recently announced nvidia dgx a100 which is designed for analytics and ai workloads. Ibm has combined its file and object storage infrastructure with the nvidia dgx a100 to create an end to end end solution.