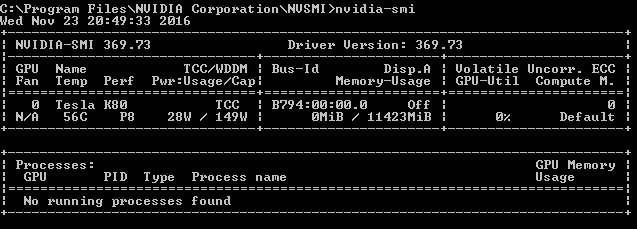

Nvidia K80 Compute Capability

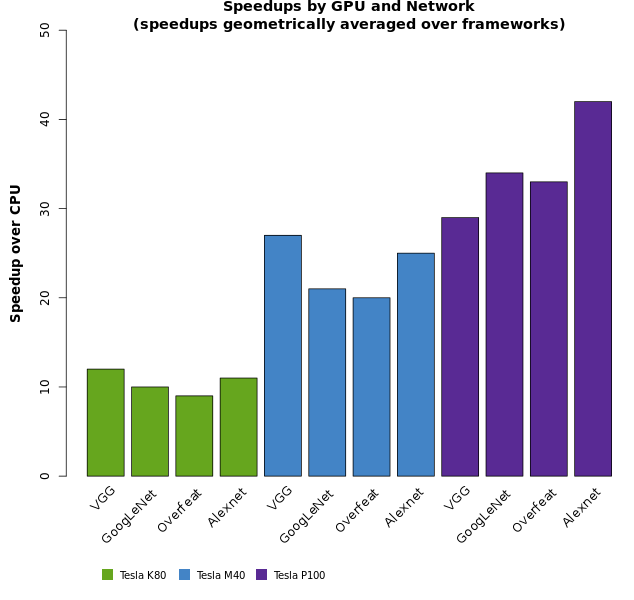

V100 16gb or 32gb p100 p40 k80 and or k40.

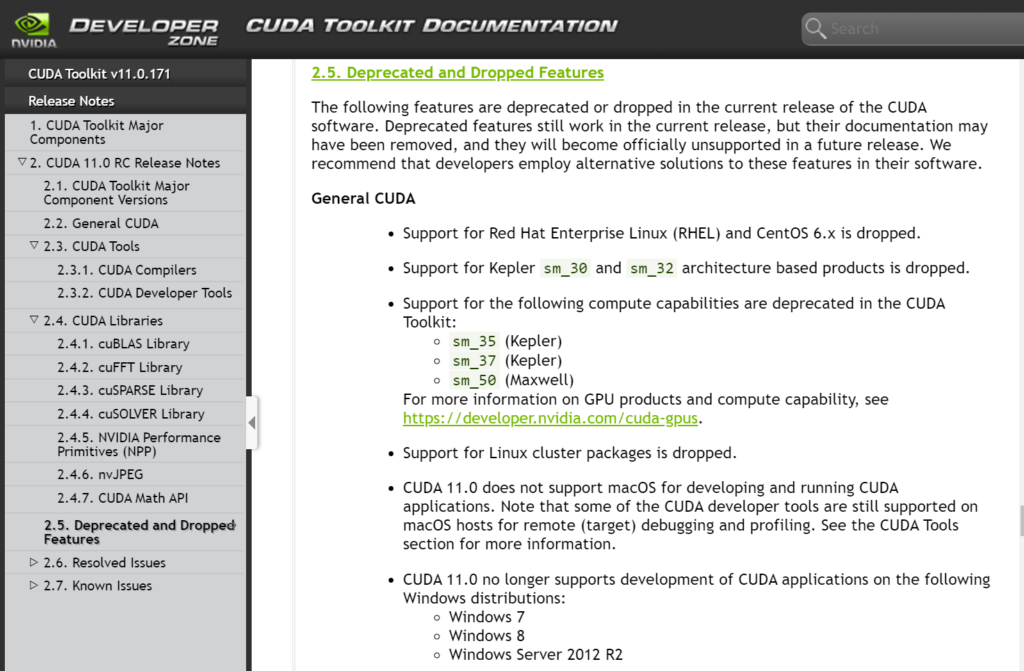

Nvidia k80 compute capability. Now you need to know the correct value to replace xx nvidia helps us with the useful cuda gpus webpage. Cuda compute unified device architecture is a parallel computing platform and application programming interface api model created by nvidia. Built on the turing architecture it features 4608 576 full speed mixed precision tensor cores for accelerating ai and 72 rt cores for accelerating ray tracing. With the introduction of cuda compatible upgrade.

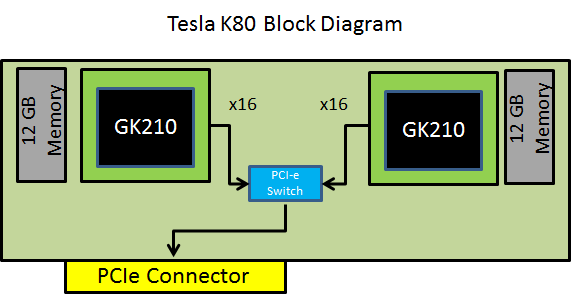

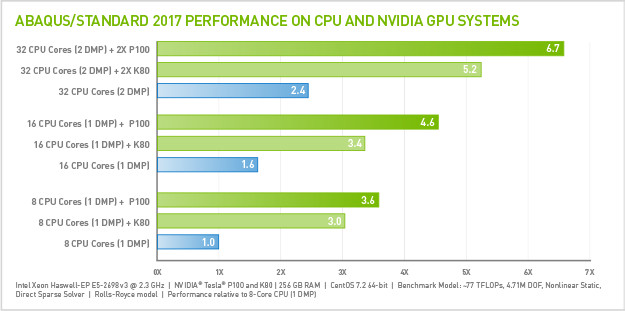

It also lists the availability of deep learning accelerator dla on this hardware. Nvidia tesla was the name of nvidia s line of products targeted at stream processing or general purpose graphics processing units gpgpu named after pioneering electrical engineer nikola tesla its products began using gpus from the g80 series and have continued to accompany the release of new chips. Compute performance of nvidia tesla k80. For example if your gpu is a nvidia titan xp.

Nvidia kernel mode driver. 418 40 04 cuda 10 1 440 33 01. Tensorrt supports all nvidia hardware with capability sm 5 0 or higher. Recommended gpu for developers nvidia titan rtx nvidia titan rtx is built for data science ai research content creation and general gpu development.

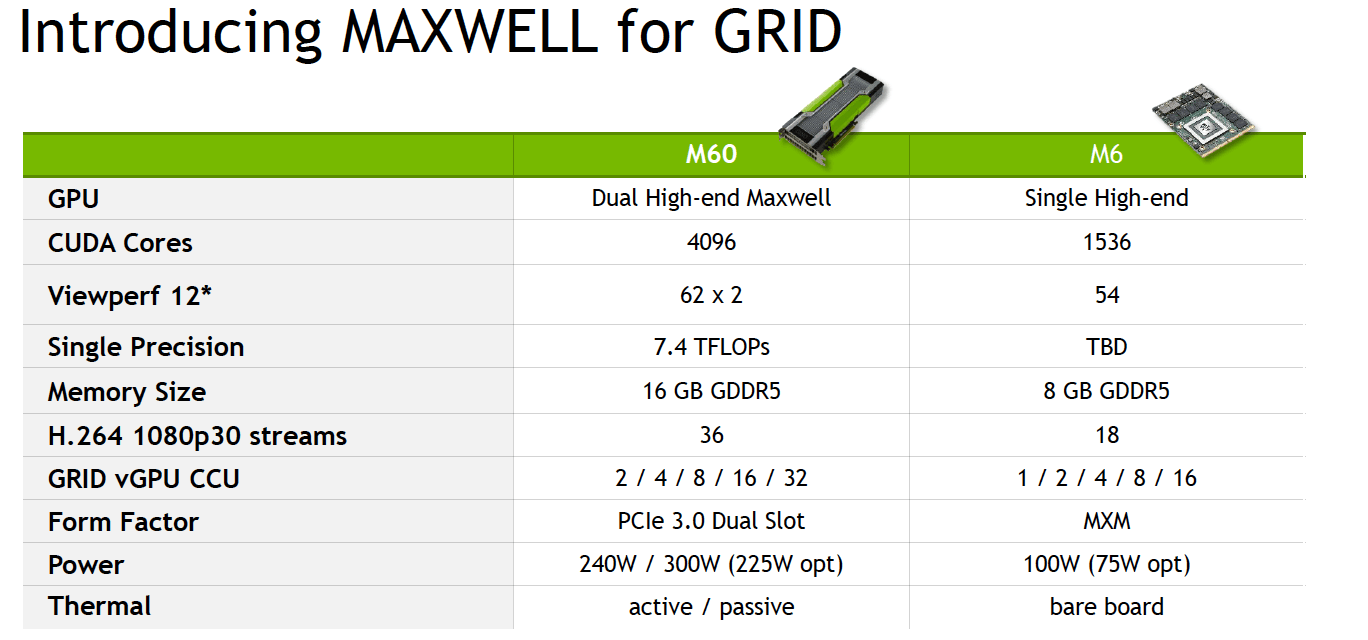

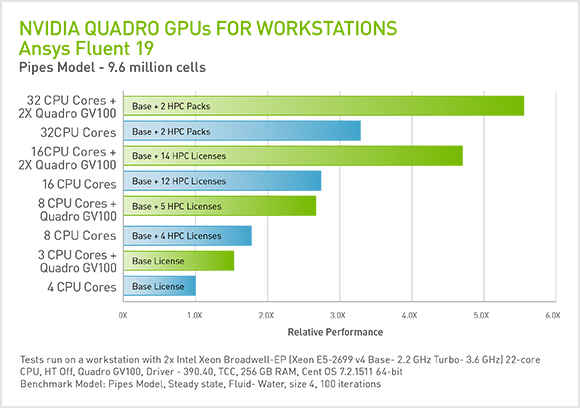

Results from other configurations are available. Binary compatibility for cubins is guaranteed from one compute capability minor revision to the next one. The following table lists nvidia hardware and which precision modes each hardware supports. Various capabilities fall under the gpu direct umbrella but the rdma capability promises the largest performance gain.

It also includes 24 gb of gpu memory for training neural networks. It allows software developers and software engineers to use a cuda enabled graphics processing unit gpu for general purpose processing an approach termed gpgpu general purpose computing on graphics processing units.