Nvidia K80 Aws

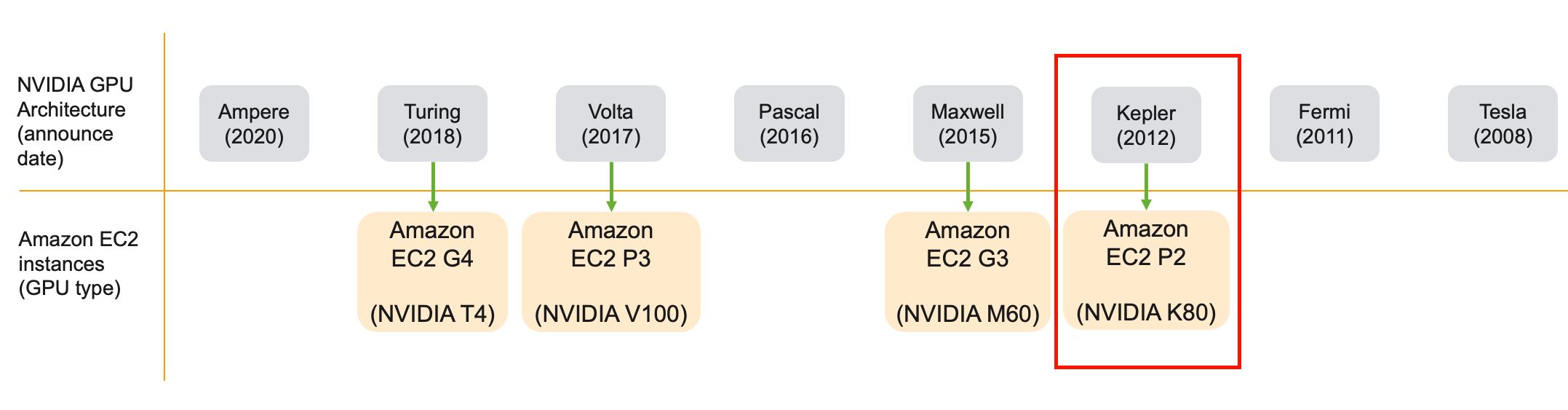

Prior to the launch of amazon ec2 g4 instances single gpu g3 instances were cost effective to develop test and prototype.

Nvidia k80 aws. The gpus support cuda 7 5 and above opencl 1 2 and the gpu compute apis. Copy link quote reply hui zhi commented nov 29 2016. The p2 16xlarge gives you control over c states and p states and can turbo boost up to 3 0 ghz when running on 1 or 2 cores. The p2 instance family is a welcome addition for compute and data focused users who were growing frustrated with the performance limitations of amazon s g2 instances which are backed by three year old nvidia grid k520.

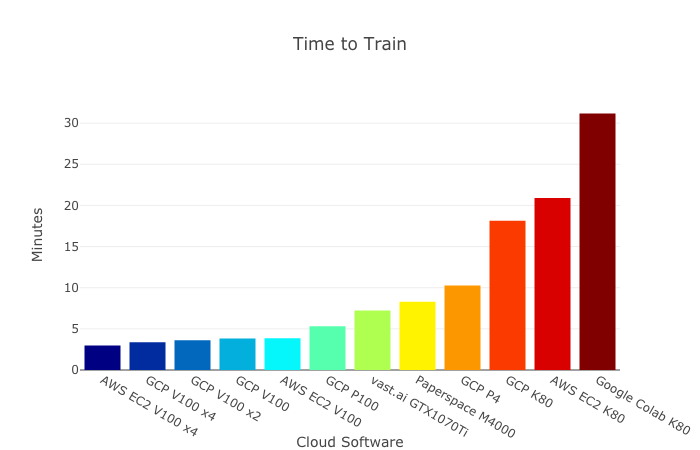

The gpus on the p2 8xlarge and the p2 16xlarge are connected via a common pci. All of the instances are powered by an aws specific version of intel s broadwell processor running at 2 7 ghz. Fixed a performance issue related to slower h 265 video encode decode performance on aws p3 instances. And although the maxwell architecture is more recent than nvidia k80 s kepler architectures found on p2 instances you should still consider p2 instances before g3 for deep learning.

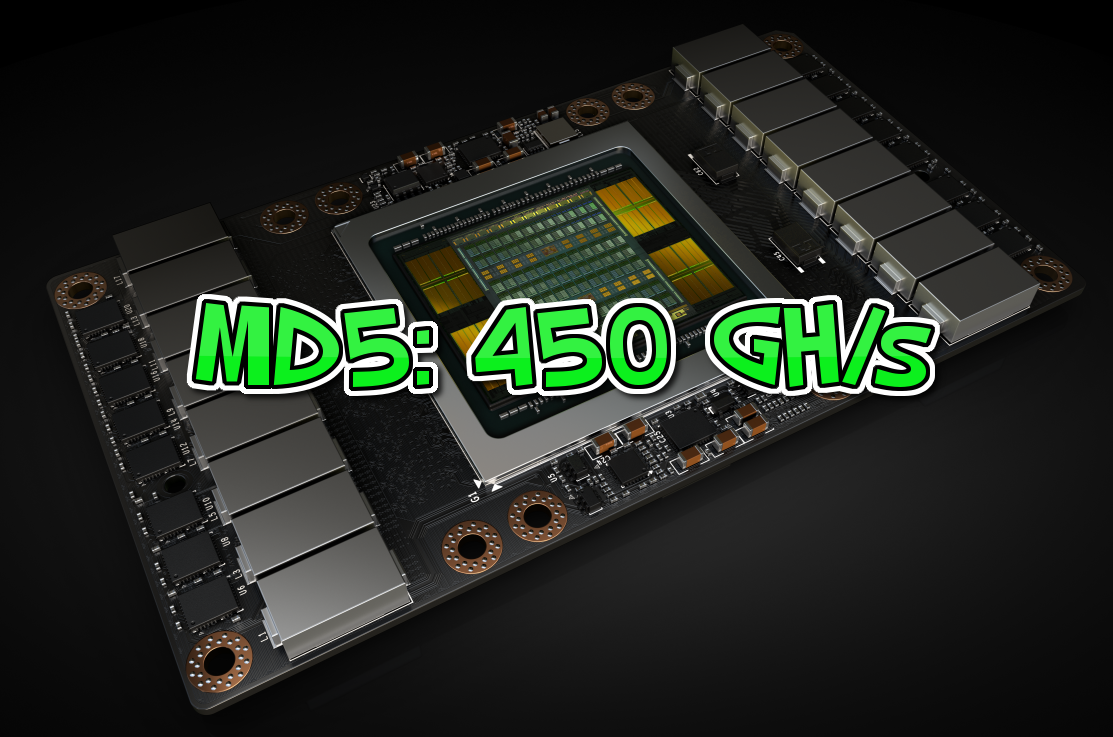

The nvidia tesla k80 accelerator dramatically lowers data center costs by delivering exceptional performance with fewer more powerful servers. Hui zhi opened this issue nov 29 2016 8 comments assignees. Depending on the instance type you can either download a public nvidia driver download a driver from amazon s3 that is available only to aws customers or use an ami with the driver pre installed. Depending on the instance type you can either download a public nvidia driver download a driver from amazon s3 that is available only to aws customers or use an ami with the driver pre installed.

It s engineered to boost throughput in real world applications by 5 10x while also saving customers up to 50 for an accelerated data center compared to a cpu only system. Errors on aws with 8 nvidia k80 cards. Download drivers for nvidia products including geforce graphics cards nforce motherboards quadro workstations. P2 instances provide up to 16 nvidia k80 gpus 64 vcpus and 732 gib of host memory with a combined 192 gb of gpu memory 40 thousand parallel processing cores 70 teraflops of single precision floating point performance and over 23 teraflops of double precision floating point performance.

An instance with an attached gpu such as a p3 or g4 instance must have the appropriate nvidia driver installed. An instance with an attached gpu such as a p3 or g4 instance must have the appropriate nvidia driver installed. Amazon web services aws has announced the availability of a new gpu computing instance based on nvidia s tesla k80 devices.