Nvidia Jetson Xavier Tensorrt

By using our services.

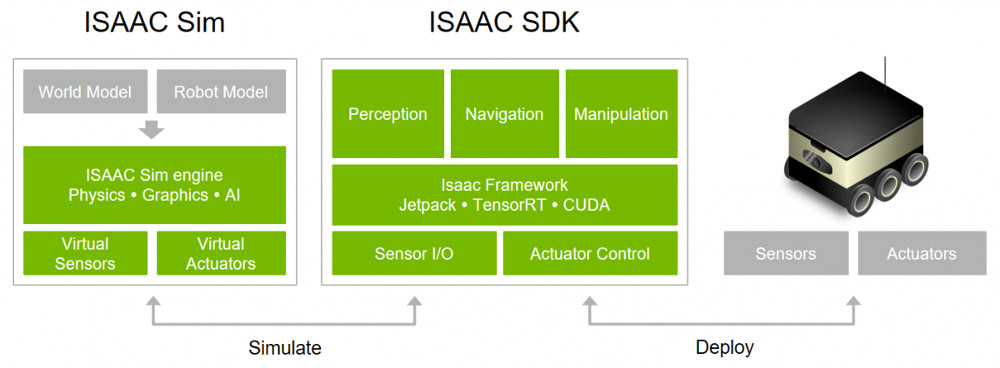

Nvidia jetson xavier tensorrt. Workflow automation ease of use framework interoperability. Jetson inference is a training guide for inference on the nvidia jetson tx1 and tx2 using nvidia digits. Cuda and tensorrt code generation jetson xavier and drive xavier targeting key takeaways optimized cuda and tensorrt code generation jetson xavier and drive xavier targeting processor in loop pil testing and system integration key takeaways platform productivity. Hi i serialized an engine from pytorch using pytorch2trt.

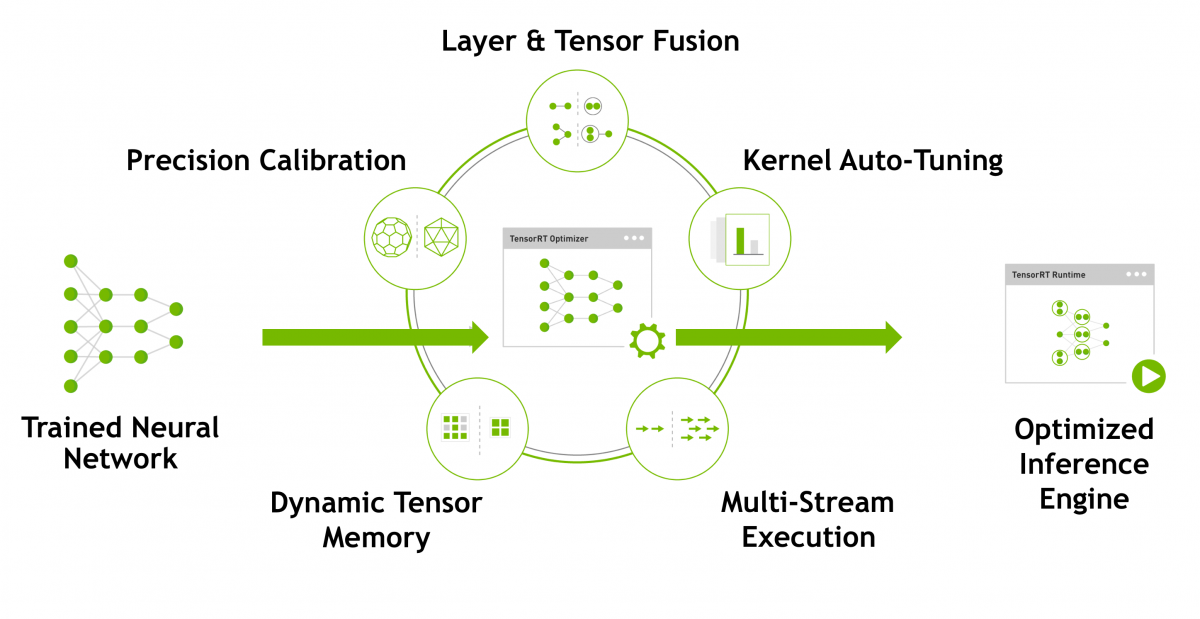

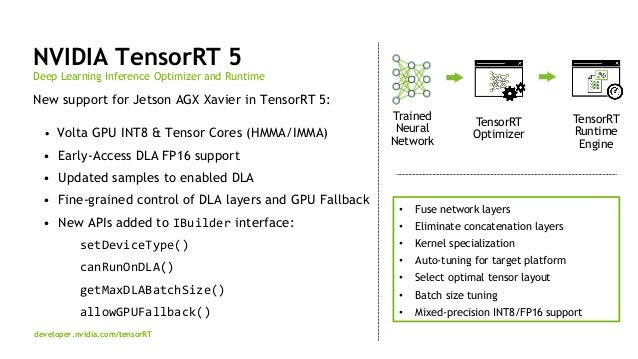

The engine output wrong results i e. Users can configure operating modes for their applications at 10w or 20w with the lower power and lower price jetson agx xavier 8gb module or at 10w 15w or 30w with the jetson agx xavier module. Jetson xavier is a powerful platform from nvidia supported by ridgerun engineering. Tensorrt provides api s via c and python that help to express deep learning models via the network definition api or load a pre defined model via the parsers that allow tensorrt to optimize and run them on an nvidia gpu.

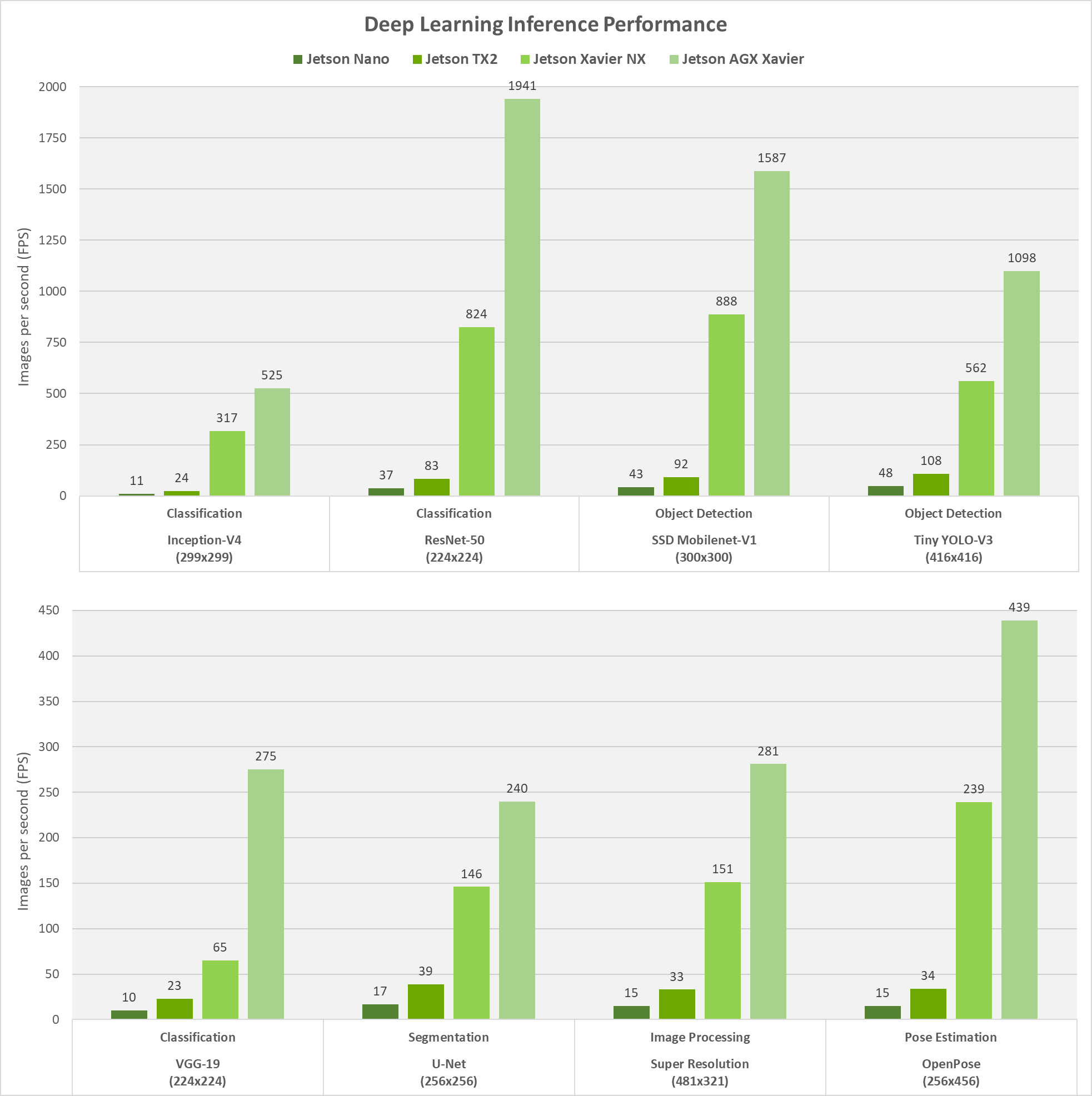

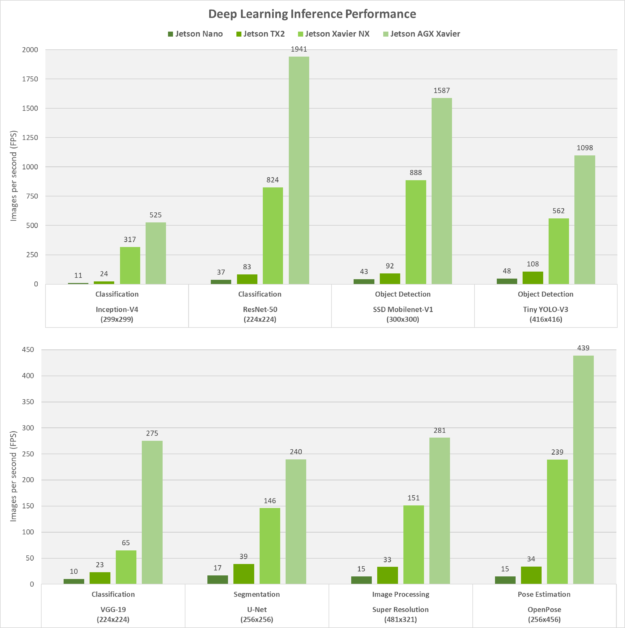

The jetson platform includes modules such as jetson nano jetson agx xavier and jetson tx2. Accelerating inference in frameworks with tensorrt. All values are zeros both for real input image and random input. Finally to quickly prototype designs on gpus matlab users can compile the complete algorithm to run on any modern nvidia gpus from nvidia tesla to drive to jetson agx xavier platforms.

Jetson question labels sep 17 2020 copy link. The following section demonstrates how to build and use nvidia samples for the tensorrt c api and python api c api. The output of running on 1 dla. Define cuda device by.

Cookies help us deliver our services. In this post you ll learn how you can use matlab s new capabilities to compile matlab applications including deep learning networks and any pre or postprocessing logic into cuda and run it on modern. How can i debug the intermediate values to debug the interface to the engine. These release notes describe the key features software enhancements and known issues when installing tensorflow for jetson platform.

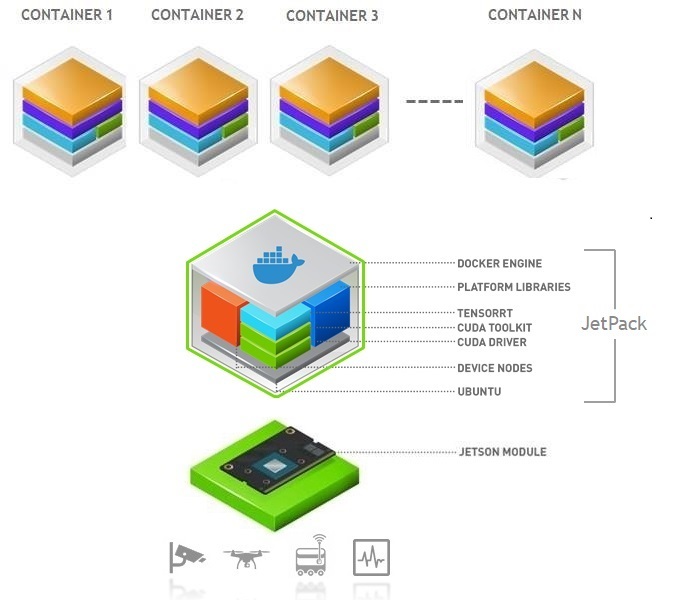

The dev branch on the repository is specifically oriented for nvidia jetson xavier since it uses the deep learning accelerator dla integration with tensorrt 5. Jetson agx xavier enables new levels of compute density power efficiency and ai inferencing capabilities at the edge. Tensorrt applies graph optimizations layer fusion among other optimizations while also finding the fastest implementation of that model leveraging a diverse collection of. I am using the jetson agx xavier with the latest jetpack 4 1 1 tensorrt 5 0 why nvidia added 2 dla s to the xavier and not just increase the cuda cores and tensor cores.