Nvidia Jetson Tx2 Tensorrt

Tensorrt provides api s via c and python that help to express deep learning models via the network definition api or load a pre defined model via the parsers that allow tensorrt to optimize and run them on an nvidia gpu.

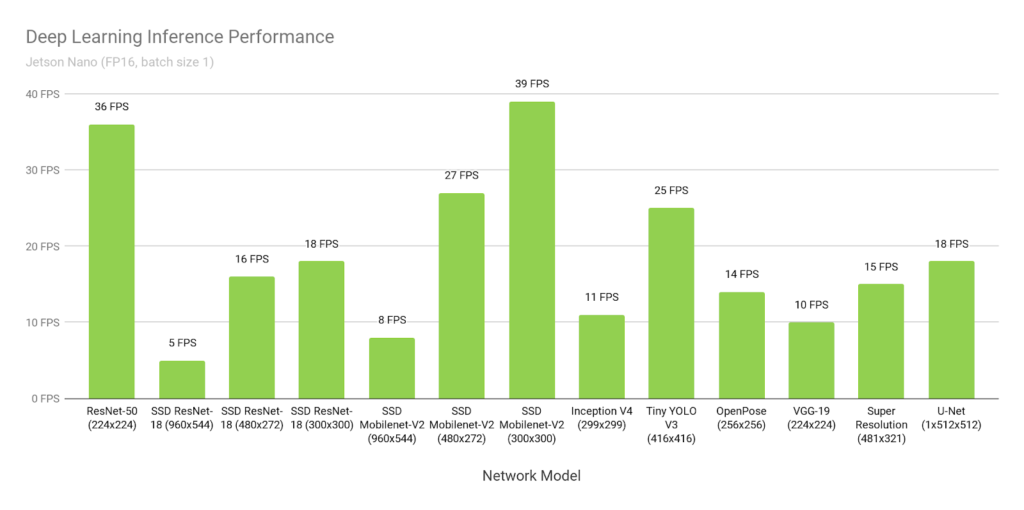

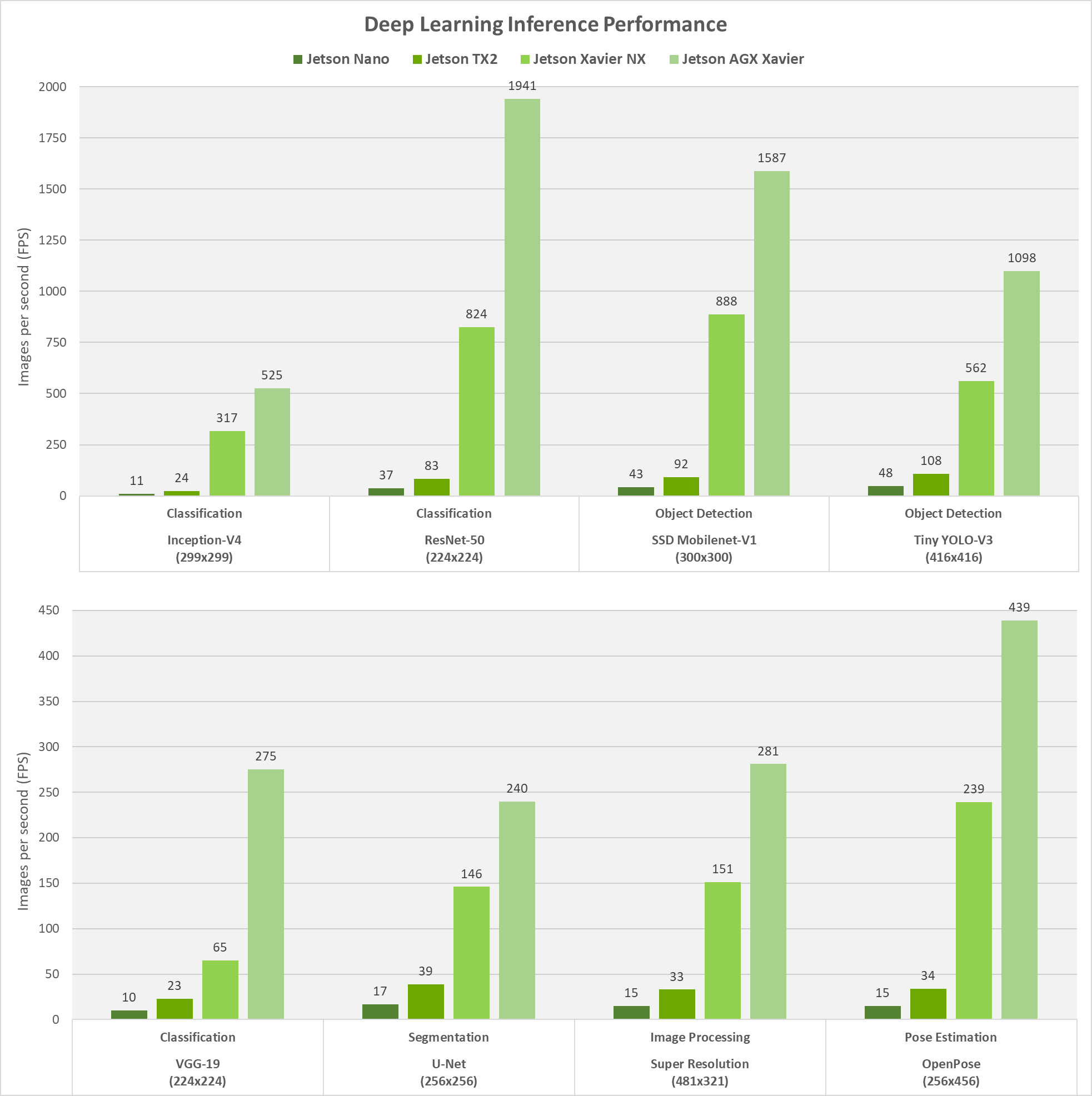

Nvidia jetson tx2 tensorrt. I ve shared the pip wheel file in the how to set up jetson tx2 environment section above. Tensorrt applies graph optimizations layer fusion among other optimizations while also finding the fastest implementation of that model leveraging a diverse collection of. Tensorrt based applications perform up to 40x faster than cpu only platforms during inference. Deep learning inference nodes for ros with support for nvidia jetson tx1 tx2 xavier and tensorrt dusty nv ros deep learning.

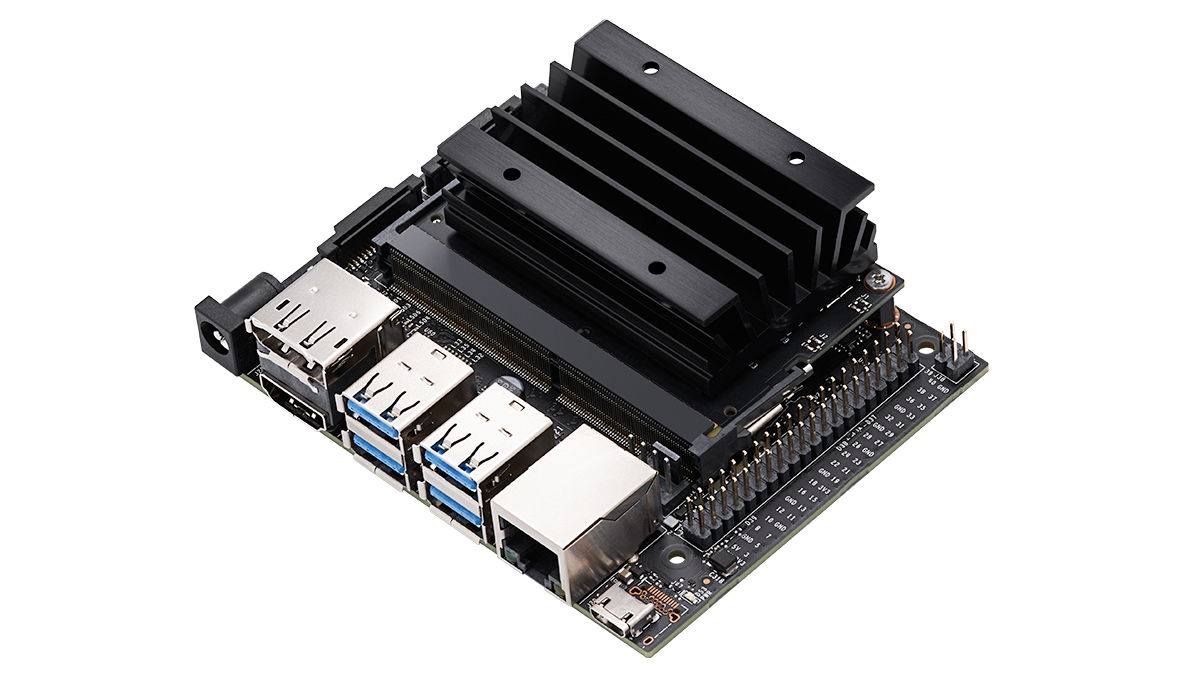

I have numpy version 1 11 on ubuntu 16 04 x86 when trying to import tensorrt with. Nvidia jetson tx2 gives you exceptional speed and power efficiency in an embedded ai computing device. With tensorrt you can optimize neural network models trained. It includes a deep learning inference optimizer and runtime that delivers low latency and high throughput for deep learning inference applications.

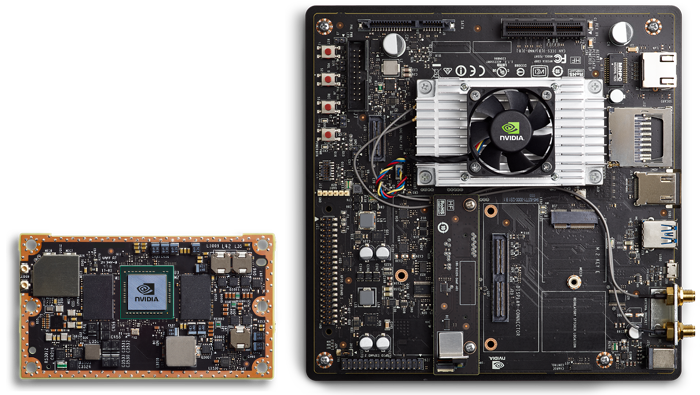

Since nvidia only officially provided tensorflow 1 8 0 pip wheel files for jetpack 3 2 1 tensorrt 3 0 ga i ended up compiling tensorflow 1 8 0 against jetpack 3 3 tensorrt 4 0 ga by myself. This supercomputer on a module brings true ai computing at the edge with an nvidia pascal gpu up to 8 gb of memory 59 7 gb s of memory bandwidth and a wide range of standard hardware interfaces that offer the perfect fit for a variety of products and form factors. Tf trt tensorrt optimized graph fp16 the above benchmark timings were gathered after placing the jetson tx2 in max n mode. Tensorrt runs with minimal dependencies on every gpu platform from datacenter gpus such as p4 and v100 to autonomous driving and embedded platforms such as the drive px2 and jetson tx2.

Hi there aastalll i m having sort of the same issue but with cuda 9 2 and tensorrt 4. To do this run the following commands in a terminal. For more information on how tensorrt and nvidia gpus deliver high performance and efficient inference resulting in dramatic cost savings in the data center and. The jetson tx2 developer kit enables a fast and easy way to develop hardware and software for the jetson tx2 ai supercomputer on a module.