Nvidia Docker Benchmark

For more information see the installing docker and nvidia docker section in nvidia docker.

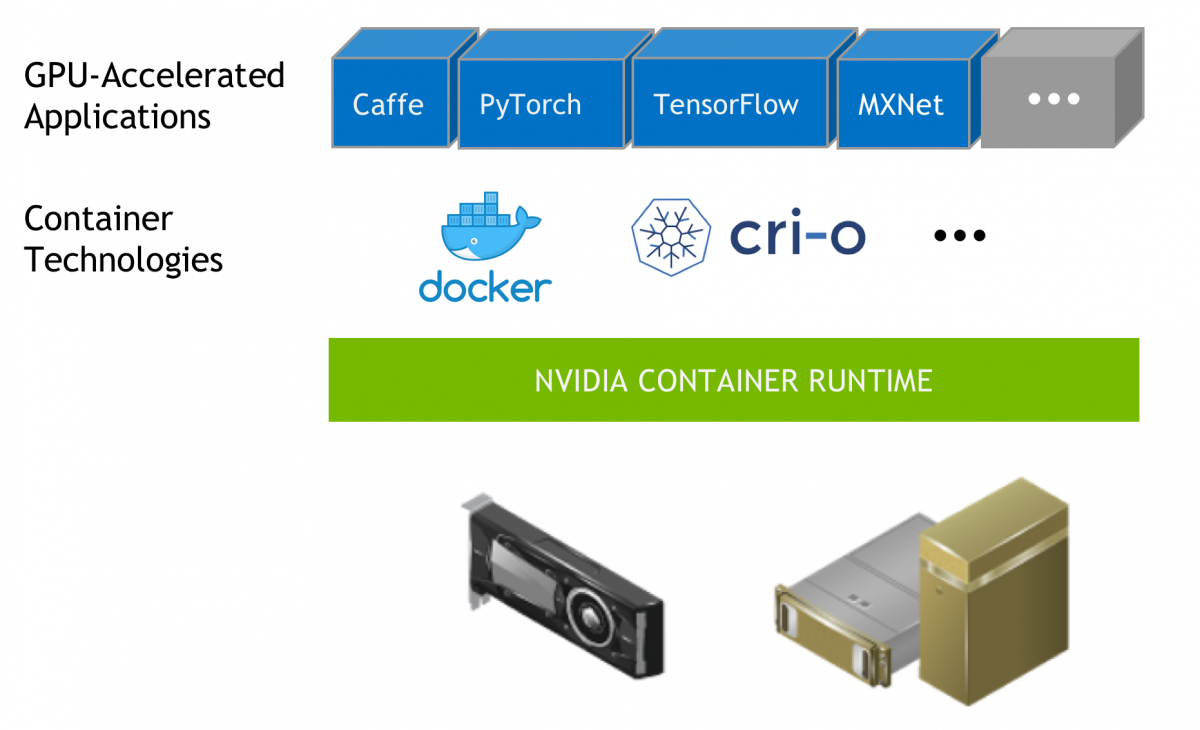

Nvidia docker benchmark. Nvidia designed nvidia docker in 2016 to enable portability in docker images that leverage nvidia gpus. Note that nvidia container toolkit does not yet support docker desktop wsl 2 backend. Docker can now be used. The toolkit includes a container runtime library and utilities to automatically configure containers to leverage nvidia gpus.

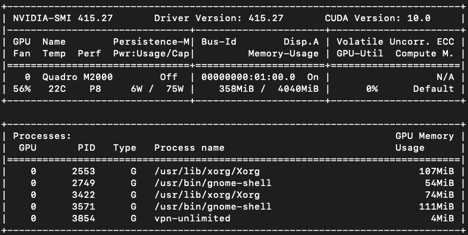

Let s ensure everything work as expected using a docker image called nvidia smi which is a nvidia utility allowing to monitor and manage gpus. However you may want to build a new image from scratch or augment an existing image with custom code libraries data or settings for your corporate infrastructure. Nvidia management library nvml apis are not supported. The nvidia docker plugin enables deployment of gpu accelerated applications across any linux gpu server with nvidia docker support.

The end result will be an up to date configuration suitable for a personal workstation. Gpu server application deployment made easy. Use docker ce for linux instead inside your wsl 2 linux distribution. As of docker release 19 03 nvidia gpus are natively supported as devices in the docker runtime.

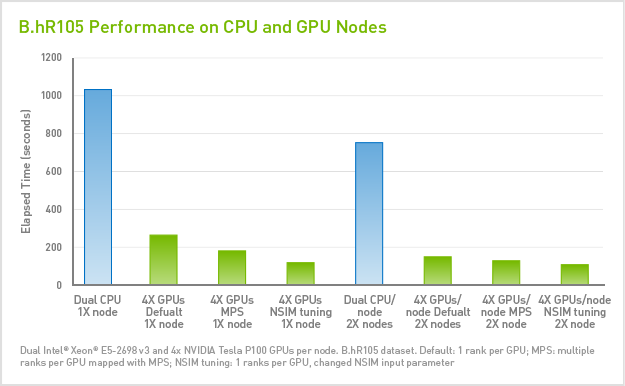

Performance has not yet been tuned on the preview driver. Model analyzer runs as a helm chart docker container or standalone command line interface. At nvidia we use containers in a variety of ways including development testing benchmarking and of course in production as the mechanism for deploying deep learning frameworks through the nvidia dgx 1 s cloud managed software. It allowed driver agnostic cuda images and provided a docker command line wrapper that mounted the user mode components of the driver and the gpu device files into the container at launch.

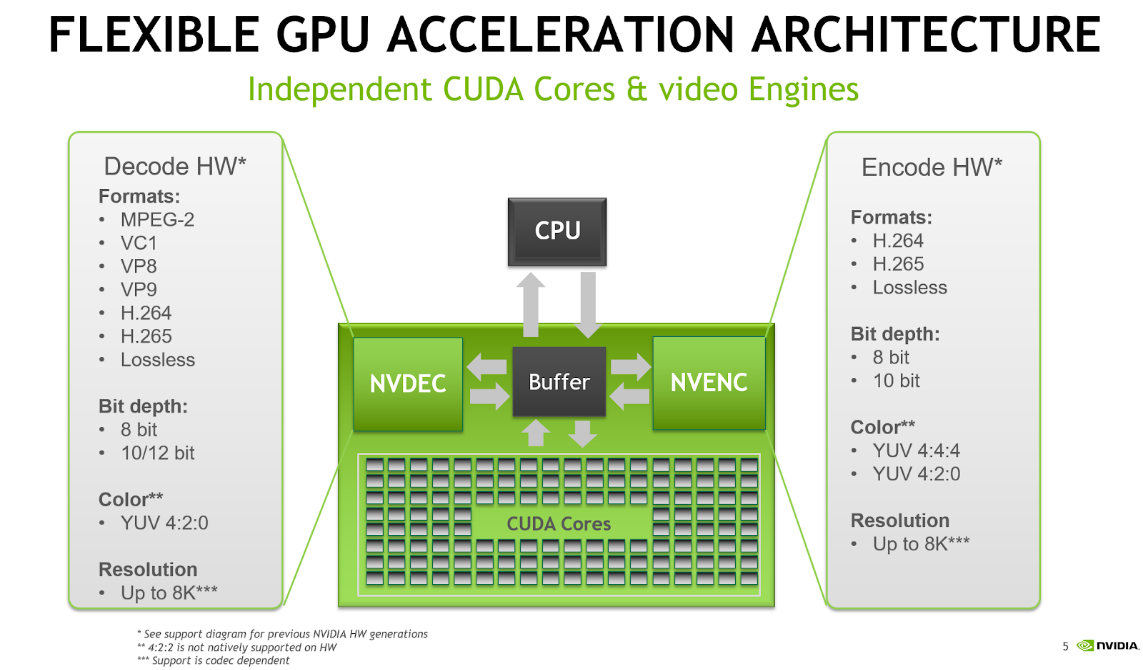

Product documentation including an architecture overview platform support installation and usage guides can be found in the. I can use it with any docker container. The nvidia container toolkit allows users to build and run gpu accelerated docker containers. The nvidia docker container for machine learning includes the application and the machine learning framework for example tensorflow 5 but importantly it does not include the gpu driver or the cuda toolkit.

This section will guide you through exercises that will highlight how to create a container from scratch customize a container extend a deep learning framework. Nvidia supports docker containers with their own docker engine utility nvidia docker 7 which is specialized to run applications that use nvidia gpus. It s time to look at gpus inside docker container. I ll go through the docker nvidia gpu setup in a series of steps.

I now have access to a docker nvidia runtime which embeds my gpu in a container.