Nvidia Dgx A100 Tflops

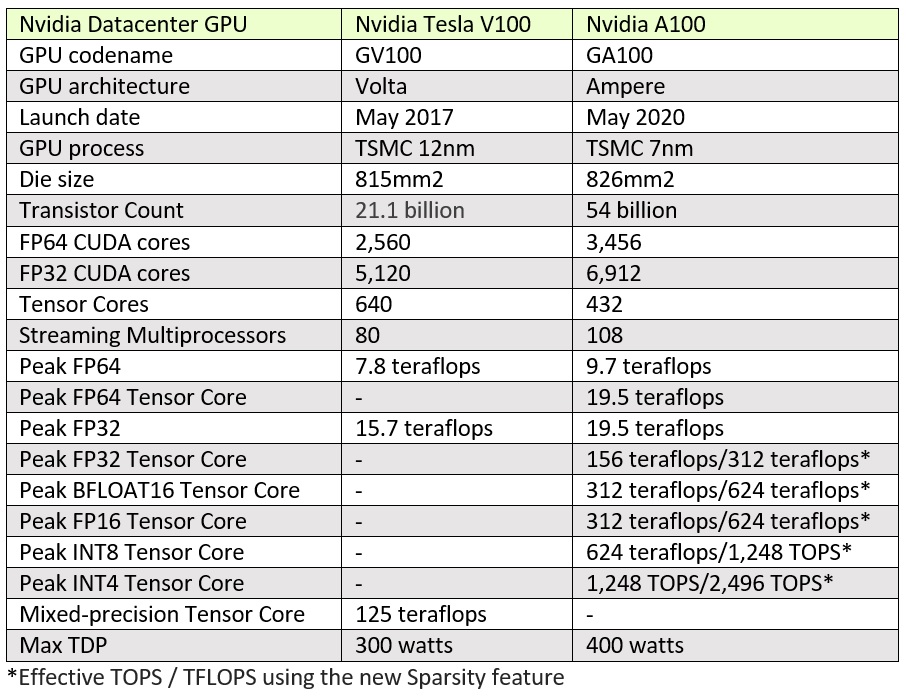

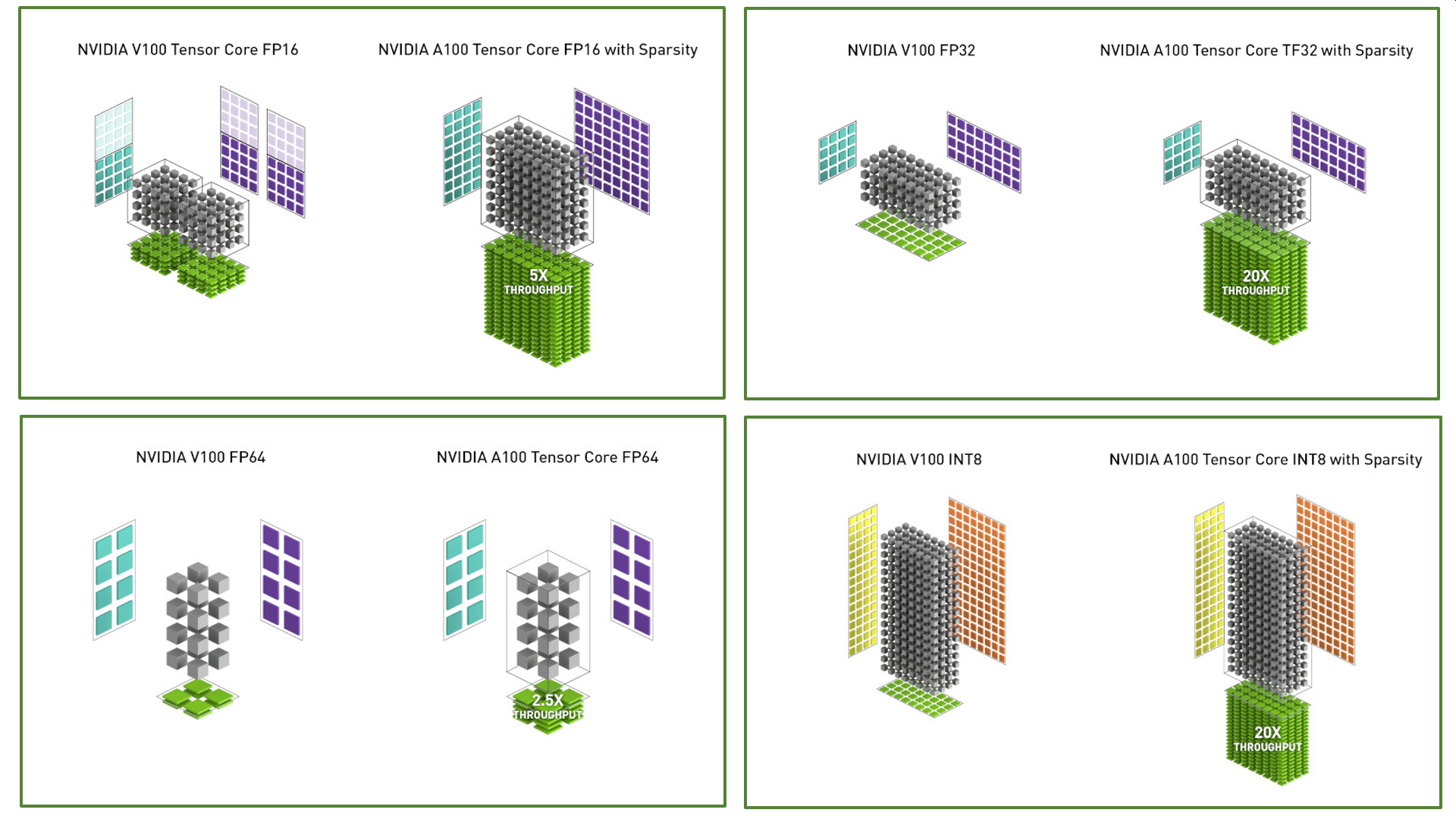

The a100 packs 19 5 tflops of double precision performance compared to only 7 8 tflops in the v100 as well as more cuda cores coming in at 6912 vs 5120 respectively.

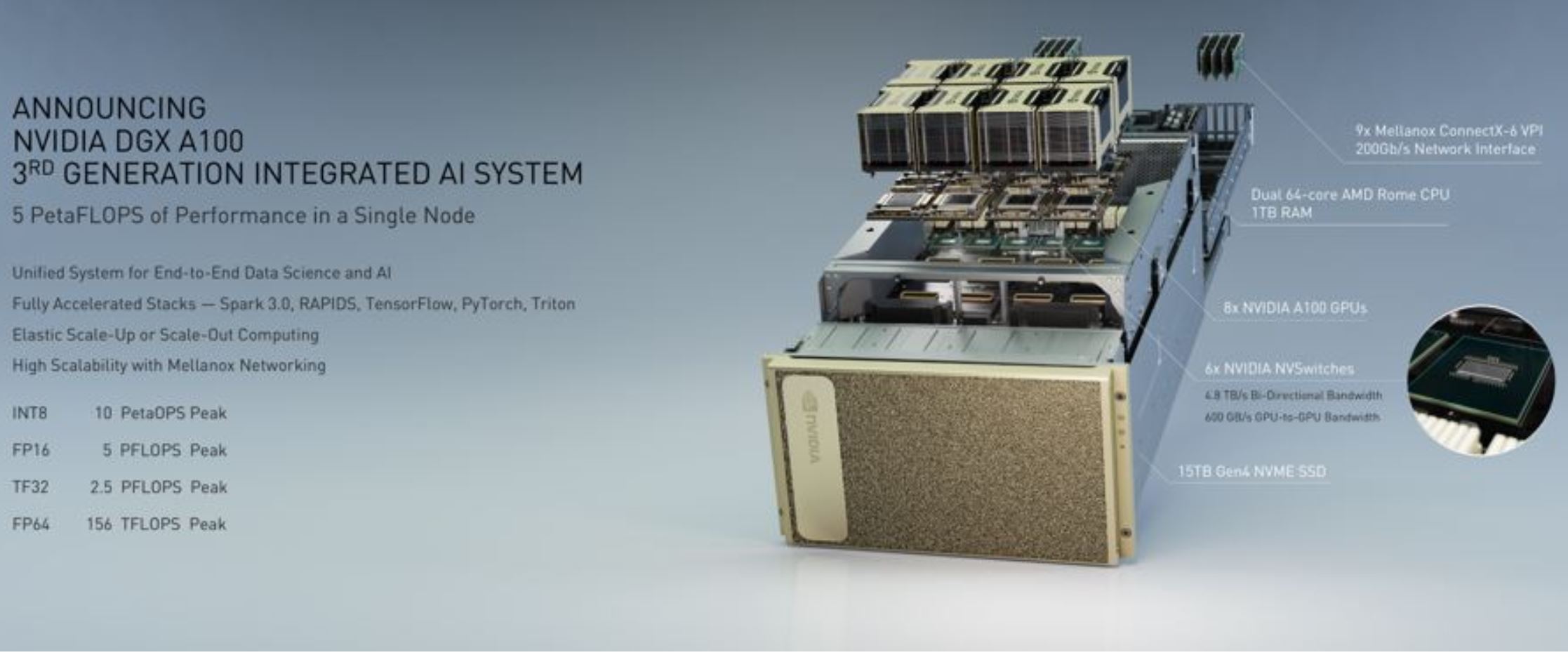

Nvidia dgx a100 tflops. Nvidia dgx a100 features the world s most advanced accelerator the nvidia a100 tensor core gpu enabling enterprises to consolidate training inference and analytics into a unified easy to deploy ai. The server is the first generation of the dgx series to use amd cpus. Built on the revolutionary nvidia a100 tensor core gpu dgx a100 unifies data center ai infrastructure flexibly adapting to training inference and analytics workloads with ease. Nvidia a100 tf32 nvidia v100 fp32 1x 6x bert large training 1x 7x up to 7x higher performance with multi instance gpu mig for ai inference2 0 4 000 7 000 5 000 2 000 sequences second 3 000 nvidia a100 nvidia t4 1 000 6 000 bert large inference 0 6x nvidia v100 1x.

In addition to the nvidia ampere architecture and a100 gpu that was announced nvidia also announced the new dgx a100 server. Nvidia dgx a100 is the universal system for all ai workloads offering unprecedented compute density performance and flexibility in the world s first 5 petaflops ai system. Say hello to the nvidia dgx a100 superpod. 8x a100s 2x amd epyc cpus and pcie gen 4.

The dgx a100 also has 40gb compared to 32gb of gpu memory for the v100 providing a significant increase when performing trainings and inferencing. Housing 140 dgx a100 systems each with eight a100 gpus 1 120 total a100 gpus the a100 superpod was built in under three weeks and delivers 700 pflops.