Nvidia Dgx A100 Price

Also the modularity enables the system to be installed and operational in as.

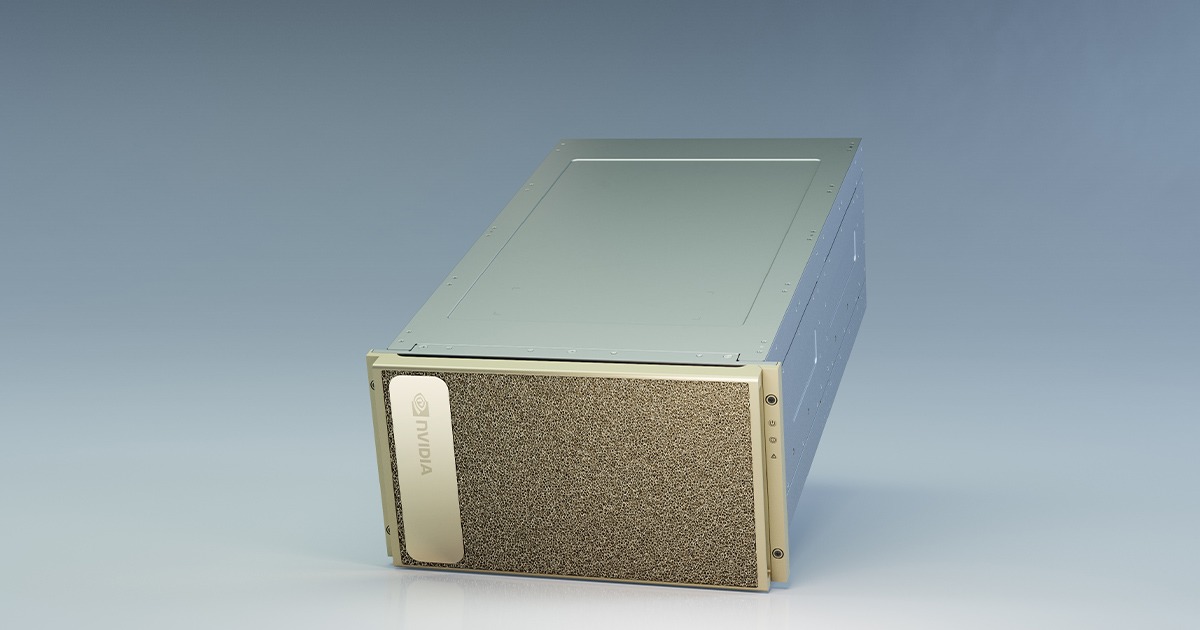

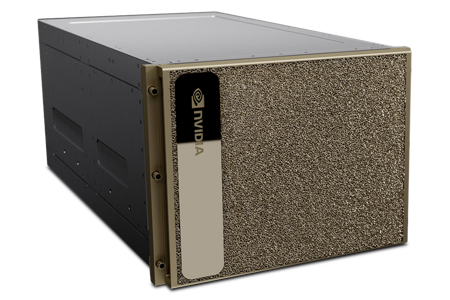

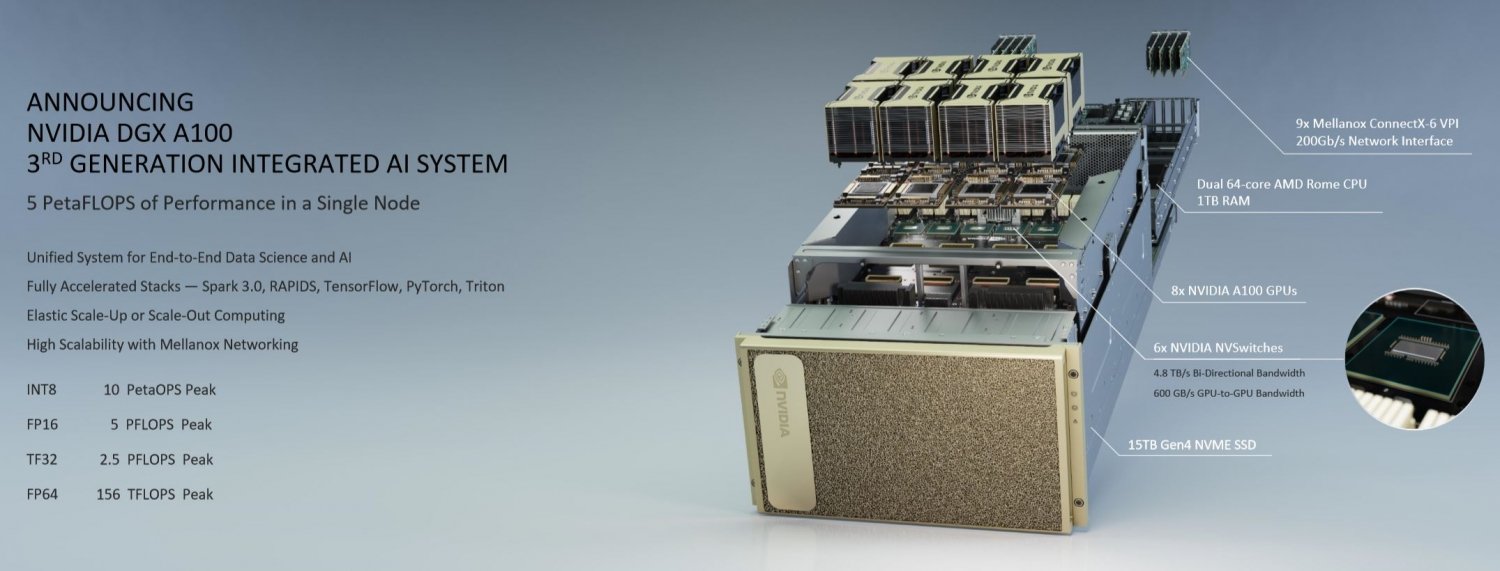

Nvidia dgx a100 price. The nvidia a100 tensor core gpu delivers unprecedented acceleration at every scale for ai data analytics and high performance computing hpc to tackle the world s toughest computing challenges. Nvidia dgx a100 features the world s most advanced accelerator the nvidia a100 tensor core gpu enabling enterprises to consolidate training inference and analytics into a unified easy to deploy ai. Costing 199 000 this isn t a system made for gamers. Nvidia dgx a100 offers unprecedented compute density performance and flexibility.

As for the price each of the dgx a100 will be priced at 200k. The system features the world s most advanced accelerator the nvidia a100 tensor core gpu enabling enterprises to consolidate training inference and analytics into a unified easy to deploy ai infrastructure that includes direct access to nvidia ai experts and multi layered built in security. Nvidia unveiled its ampere graphics architecture and its most powerful computer yet. If the new ampere architecture based a100 tensor core data center gpu is the component responsible re architecting the data center nvidia s new dgx a100 ai supercomputer is the ideal enabler to revitalize data centers.

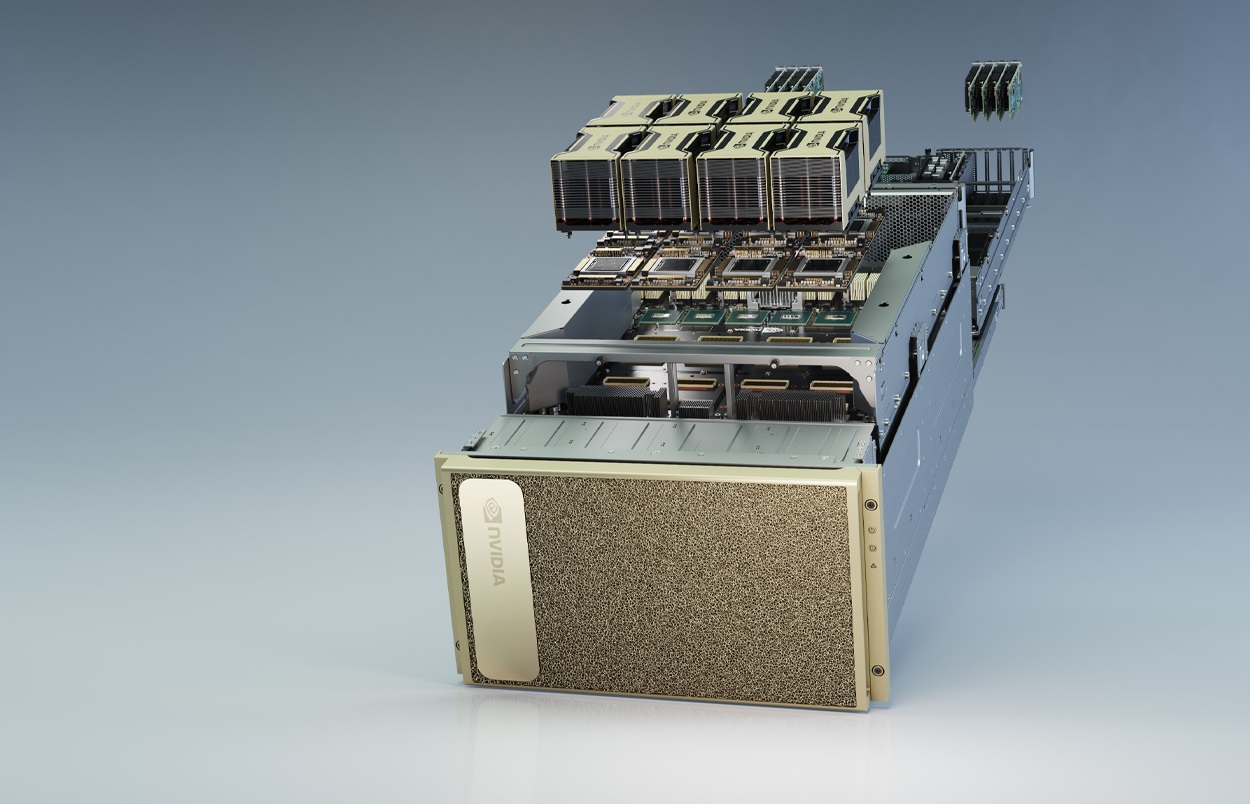

The a100 is being sold packaged in the dgx a100 a system with 8. Compared to the older v100 the pricing seems compelling for the computing power nvidia is giving to its customers. Nvidia unveils 350lb dgx 2 supercomputer with a 399 000 price tag the supercomputer is geared towards machine learning by cohen coberly on march 27 2018 14 50 12 comments. That equates to a 33 percent generational price hike but nvidia claims the a100 is 20 times faster at ai inference and.

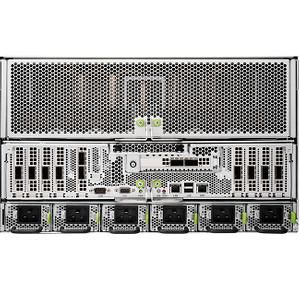

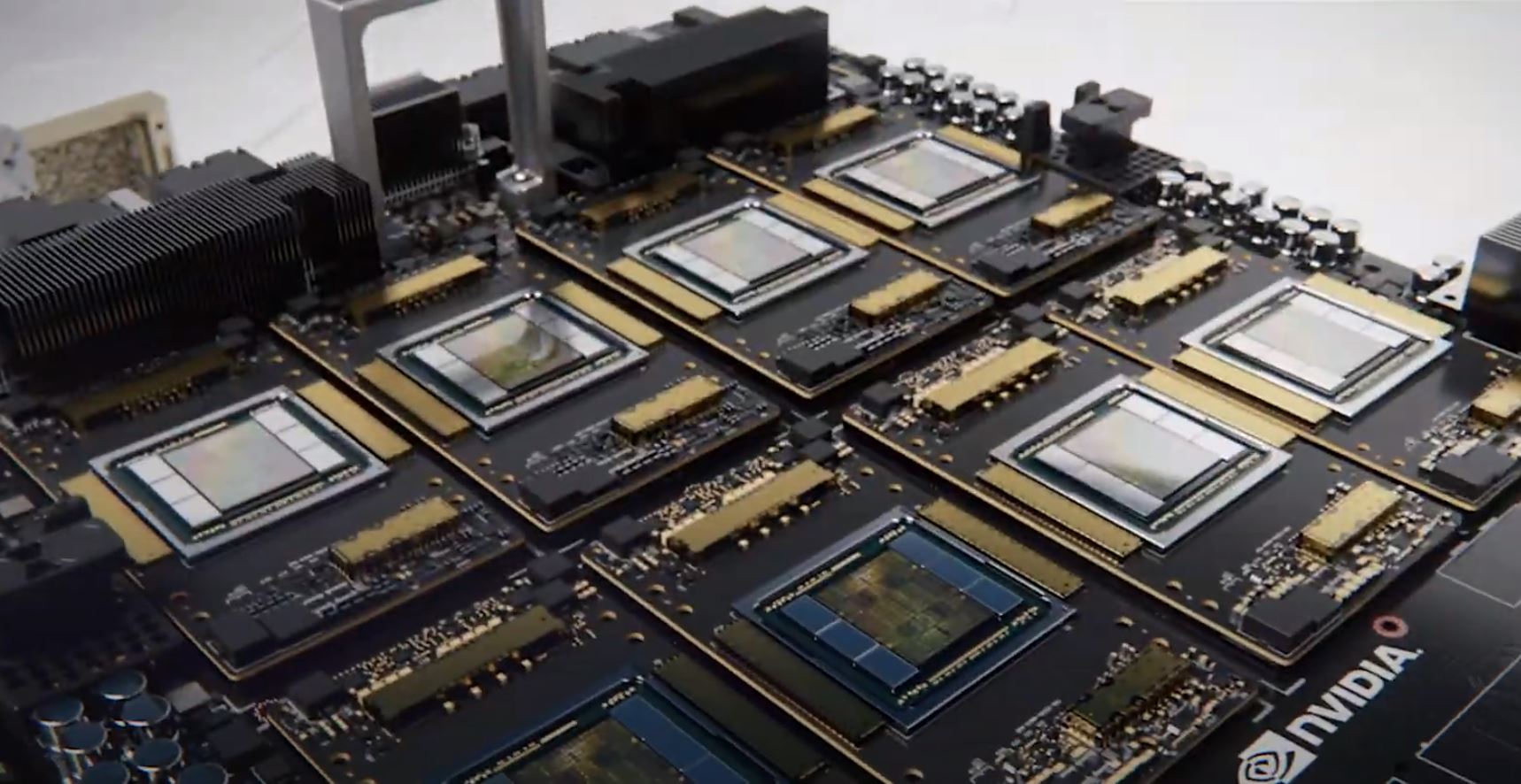

Nvidia dgx a100 is the universal system for all ai workloads offering unprecedented compute density performance and flexibility in the world s first 5 petaflops ai system. The slide presented a bigger buyer s remorse. Each gpu now supports 12 nvidia nvlink bricks for up to 600gb sec of total bandwidth up to 10x the training and 56x the inference performance per system. As the engine of the nvidia data center platform a100 can efficiently scale to thousands of gpus or with nvidia multi instance gpu mig technology be partitioned into seven gpu instances to.

This article was first published on 15 may 2020. Increased nvlink bandwidth 600gb s per nvidia a100 gpu. Inside it will feature 80 modular nvidia dgx a100 systems connected by nvidia mellanox infiniband networking.