Nvidia Dgx A100 Datasheet

Nvidia dgx a100 is the universal system for all ai workloads from analytics to training to inference.

Nvidia dgx a100 datasheet. The system is built on eight nvidia a100 tensor core gpus. The system features the world s most advanced accelerator the nvidia a100 tensor core gpu enabling enterprises to consolidate training inference and analytics into a unified easy to deploy ai infrastructure that includes direct access to nvidia ai experts and multi layered built in security. Which nvidia dgx a100 spare parts. Spares are offered at the discretion of each nvidia distributor or npn solution provider.

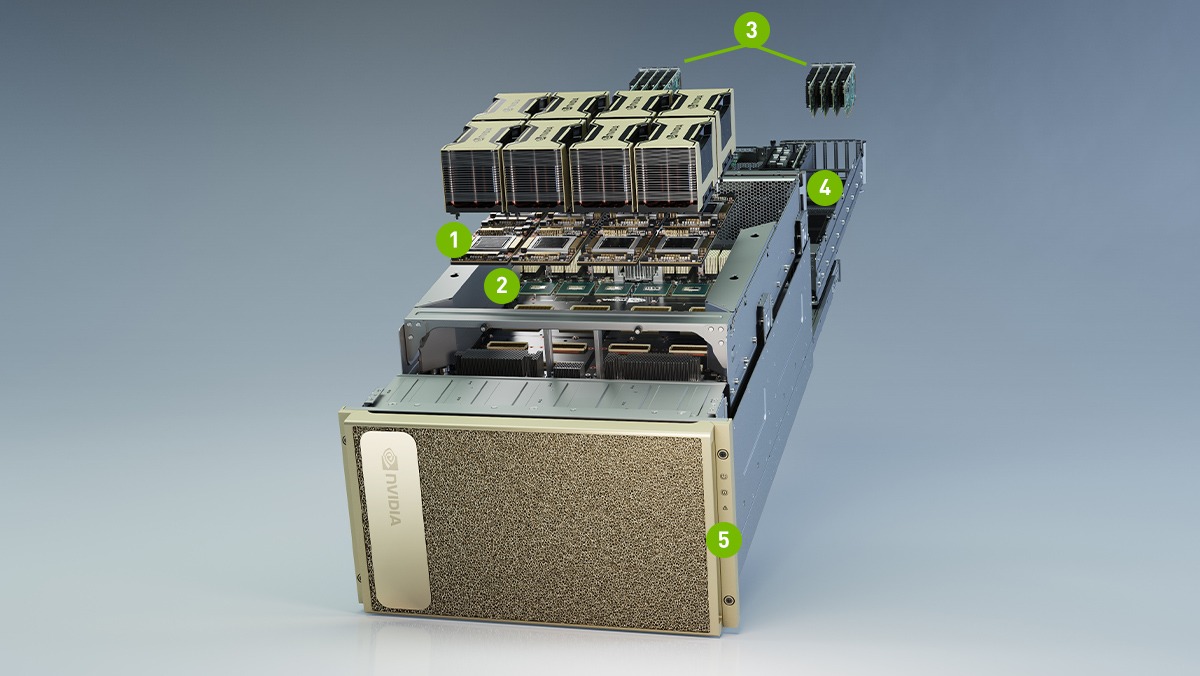

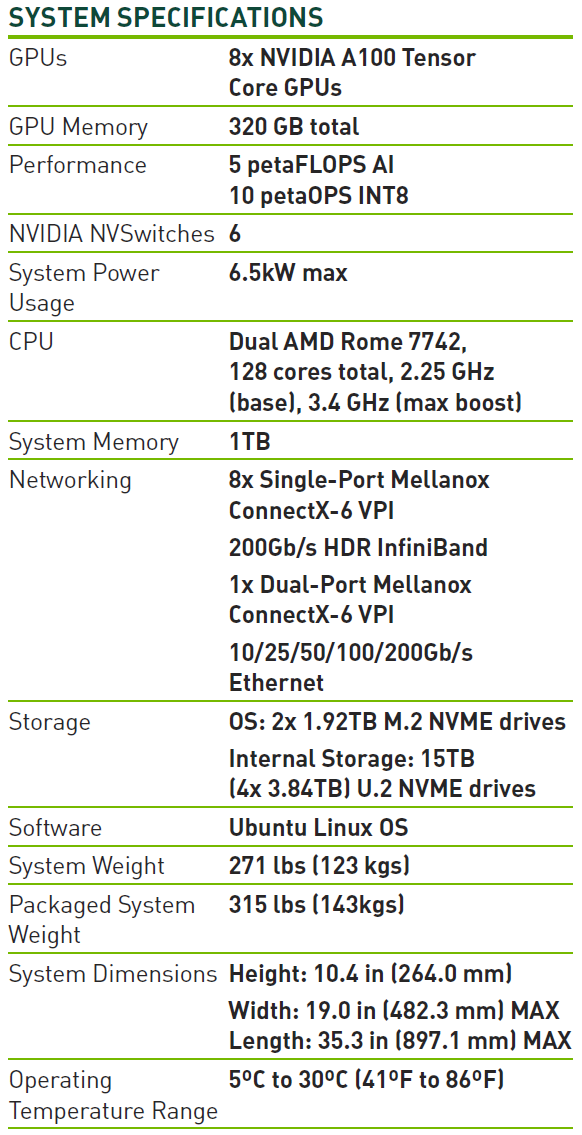

Nvidia dgx a100 data sheet may20 system specifications gpus 8x nvidia a100 tensor core gpus gpu memory 320 gb total performance 5 petaflops ai 10 petaops int8 nvidia nvswitches 6 system power usage 6 5kw max cpu dual amd rome 7742 128 cores total 2 25 ghz base 3 4 ghz max boost system memory 1tb networking 8x single port mellanox. The nvidia dgx a100 system is the the universal system purpose built for all ai infrastructure and workloads from analytics to training to inference. Nvidia dgx a100 features eight nvidia a100 tensor core gpus which deliver unmatched acceleration and is fully optimized for nvidia cuda x software and the end to end nvidia data center solution stack. If the new ampere architecture based a100 tensor core data center gpu is the component responsible re architecting the data center nvidia s new dgx a100 ai supercomputer is the ideal enabler to revitalize data centers.

Nvidia dgx a100 customers can purchase dgx a100 media retention services from dgx a100 distributors and authorized dgx a100 nvidia partner network npn solution providers. Dgx a100 sets a new bar for compute density packing 5 petaflops of ai performance into a 6u form factor replacing legacy compute infrastructure with a single unified system. This article was first published on 15 may 2020. Nvidia s dgx a100 supercomputer is the ultimate instrument to advance ai and fight covid 19.

Nvidia dgx a100 offers unprecedented compute density performance and flexibility. However please note that nvidia media retention services are offered at the discretion of each nvidia distributor or npn solution provider. A100 is part of the complete nvidia data center solution that incorporates building blocks across hardware networking software libraries and optimized ai models and applications from ngc representing the most powerful end to end ai and hpc platform for data centers it allows researchers to deliver real world results and deploy solutions into production at scale. Nvidia a100 tf32 nvidia v100 fp32 1x 6x bert large training 1x 7x up to 7x higher performance with multi instance gpu mig for ai inference2 0 4 000 7 000 5 000 2 000 sequences second 3 000 nvidia a100 nvidia t4 1 000 6 000 bert large inference 0 6x nvidia v100 1x.