Nvidia Dgx A100 Cuda Cores

The nvidia dgx a100 system is the the universal system purpose built for all ai infrastructure and workloads from analytics to training to inference.

Nvidia dgx a100 cuda cores. The gpu in tesla a100 is clearly not the full chip. For the complete documentation see the pdf nvidia dgx a100 system user guide. The nvidia a100 tensor core gpu delivers unprecedented acceleration at every scale for ai data analytics and high performance computing hpc to tackle the world s toughest computing challenges. For more information about the new cuda features see the upcoming nvidia a100 tensor core gpu architecture whitepaper.

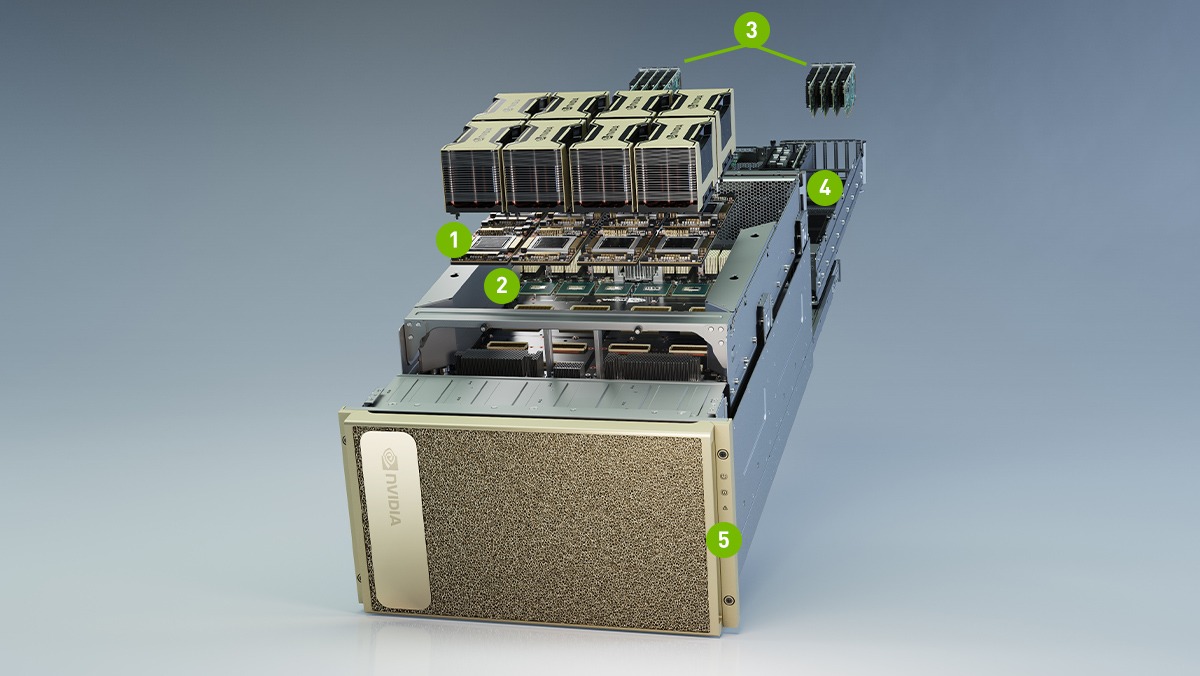

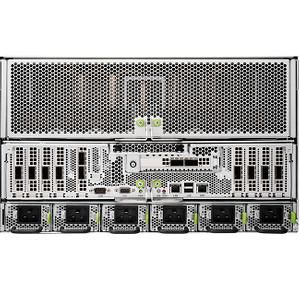

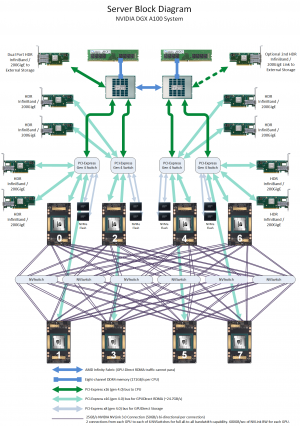

Nvidia has revealed its tesla a100 graphics accelerator and it is a monster. Integrating eight a100 gpus the system provides unprecedented acceleration and is fully optimized for nvidia cuda x software and the end to end nvidia data center solution stack. Cutlass the cuda c template abstractions for high performance gemm supports all the various precision modes offered by a100. Nvidia tesla a100 features 6912 cuda cores the card features 7nm ampere ga100 gpu with 6912 cuda cores and 432 tensor cores.

Nvidia dgx a100 is the world s first ai system built on the nvidia a100 tensor core gpu. Nvidia is boosting its tensor cores to make them easier to use for developers and the a100 will also include 19 5 teraflops of fp32 performance 6 912 cuda cores 40gb of memory and 1 6tb s of. Right now we know that nvidia s tesla a100 features 6 912 cuda cores which feature the ability to calculate fp64 calculations at half rate. For more information about the new dgx a100 system see defining ai innovation with nvidia dgx a100.

The gpu is divided into 108 streaming multiprocessors. With cuda 11 cutlass now achieves more than 95 performance parity with cublas. This gpu has a die size of 826mm2 and 54 billion transistors. More than a server dgx a100 is the foundational building block of ai infrastructure and part of the nvidia end to end data center solution created from over a decade of ai leadership by nvidia.

As the engine of the nvidia data center platform a100 can efficiently scale to thousands of gpus or with nvidia multi instance gpu mig technology be partitioned into seven gpu instances to. The dgx a100 also has 40gb compared to 32gb of gpu memory for the v100 providing a significant increase when performing trainings and inferencing. Thanks to crn we have detailed specifications for nvidia s tesla a100 silicon complete with cuda core counts die size and more. The a100 packs 19 5 tflops of double precision performance compared to only 7 8 tflops in the v100 as well as more cuda cores coming in at 6912 vs 5120 respectively.

The system is built on eight nvidia a100 tensor core gpus.