Nvidia Ampere Inference

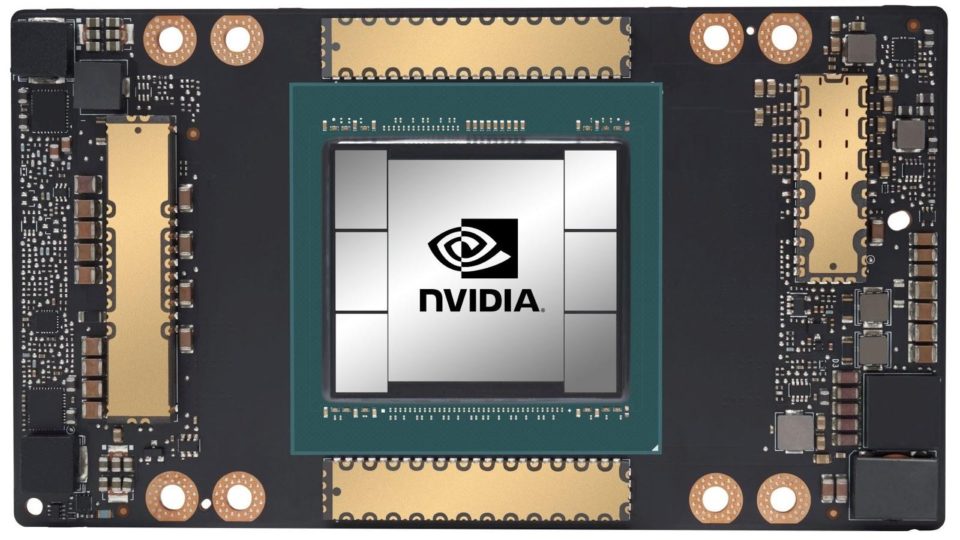

Here we see the full 200tops of inference performance that nvidia had.

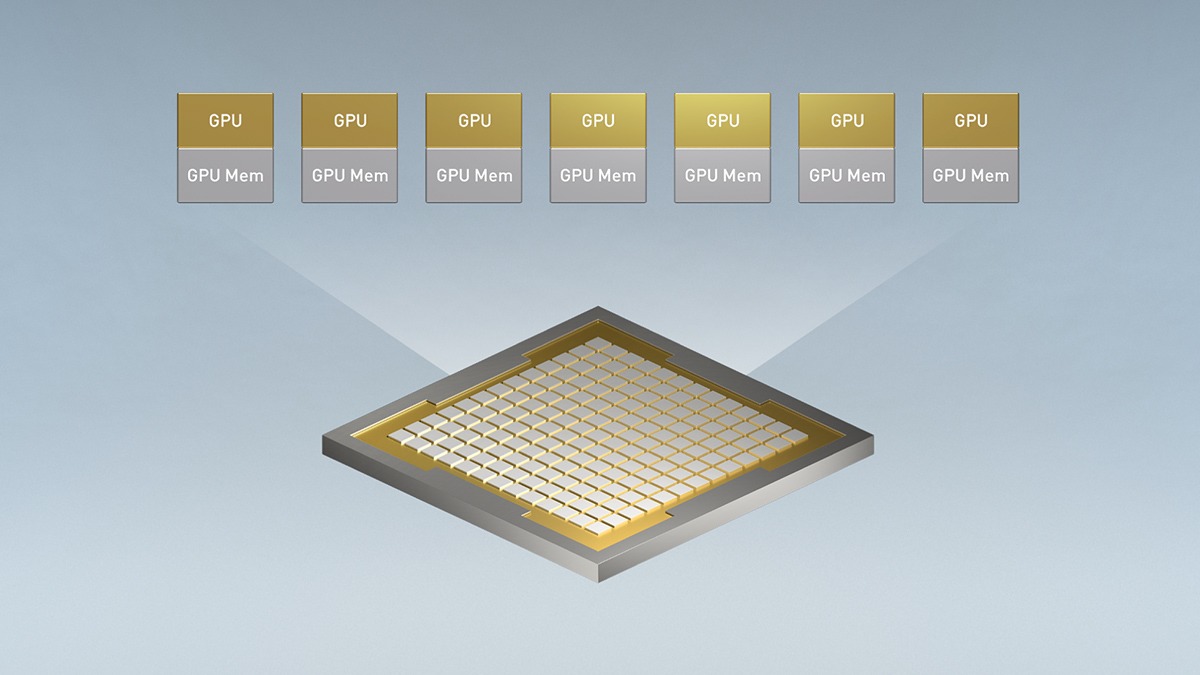

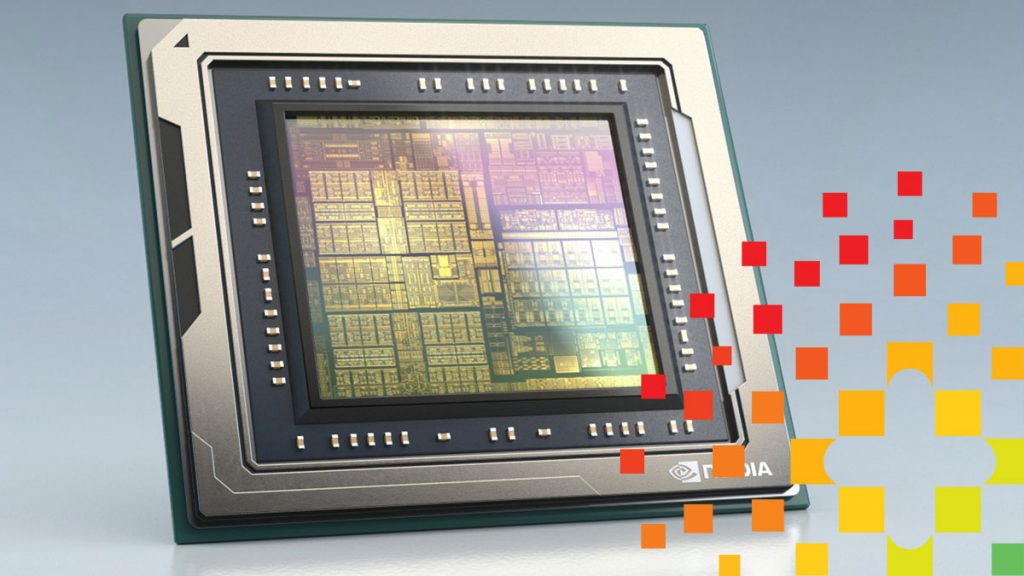

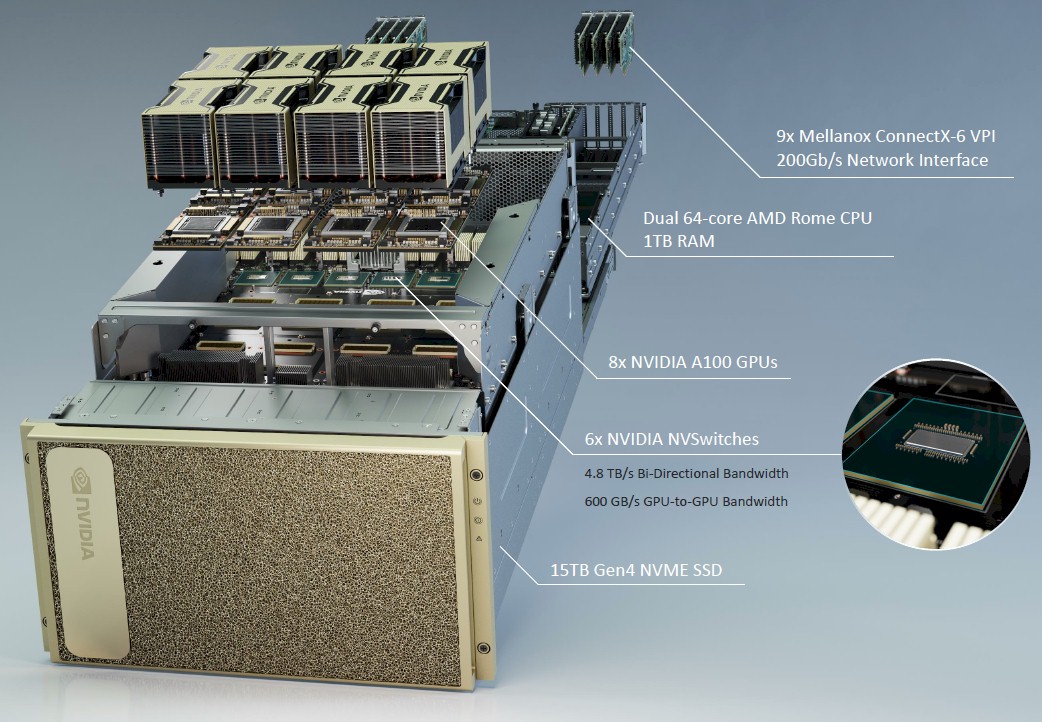

Nvidia ampere inference. Nvidia ampere architecture gpus and the cuda programming model advances accelerate program execution and lower the latency and overhead of many operations. First introduced in the nvidia volta architecture nvidia tensor core technology has brought dramatic speedups to ai bringing down training times from weeks to hours and providing massive acceleration to inference. Nvidia ampere architecture gpus are designed to improve gpu programmability and performance while also reducing software complexity. Nvidia reveals its new ampere data center gpu the a100 which unified training and inference into one architecture that can outperform the chipmaker s v100 and t4 several times over.

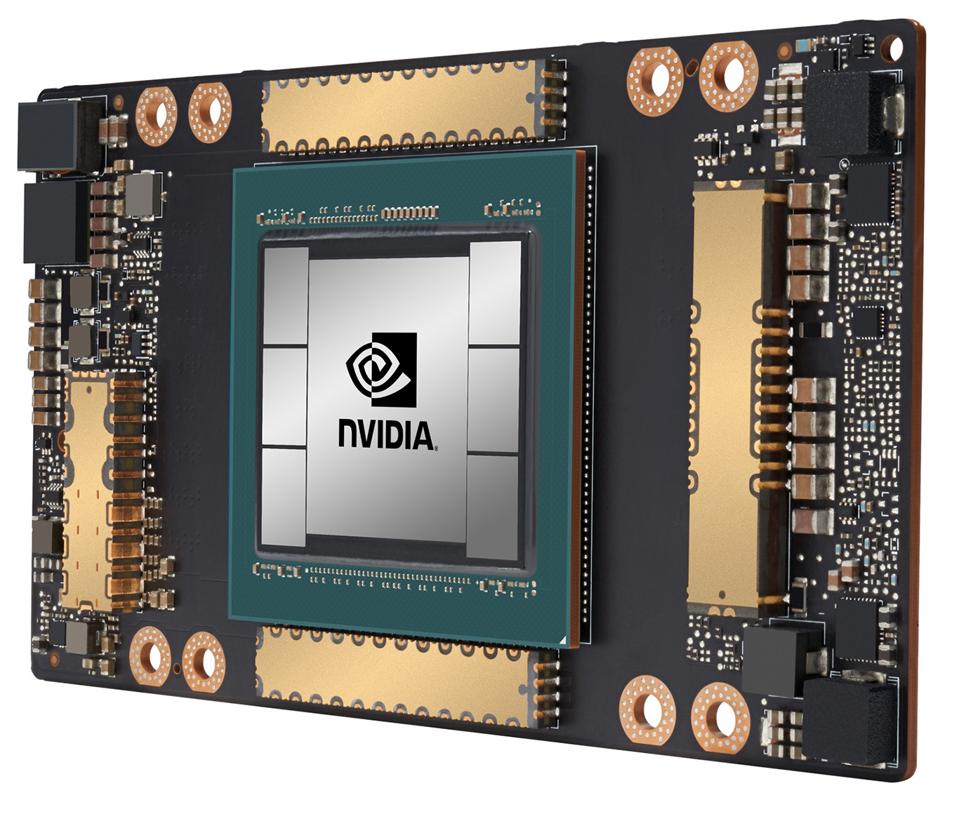

Specifically it defines a method for training a neural network with half its weights removed or what s known as 50 percent sparsity. The t4 will live on. Judging by its size and form factor with hbm memory is seemingly the newest ga100 ampere gpu. The nvidia ampere architecture takes advantage of the prevalence of small values in neural networks in a way that benefits the widest possible swath of ai applications.

The most drastic change is the increase in inference performance in the a100 chipset compared to the v100 chipset. Nvidia is helping professionals address these challenges and tackle enterprise workloads from the desktop to the data center with the new nvidia rtx a6000 and nvidia a40. Performance boost largely for inference. Nvidia disclosed that this.