Nvidia A100 Wiki

Launch date of release for the processor.

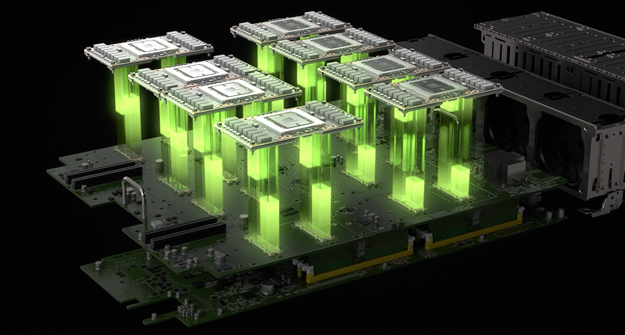

Nvidia a100 wiki. The new 4u gpu system features the nvidia hgx a100 8 gpu baseboard up to six nvme u 2 and two nvme m 2 10 pci e 4 0 x16 i o with supermicro s unique aiom support invigorating the 8 gpu communication and data flow between systems through the latest technology stacks. So while v100 offered 6 nvlinks for a total bandwidth of 300gb. Nvidia was founded on april 5 1993 by jensen huang ceo as of 2020 a taiwanese american previously director of coreware at lsi logic and a microprocessor designer at advanced micro devices amd chris malachowsky an electrical engineer who worked at sun microsystems and curtis priem previously a senior staff engineer and graphics chip designer at sun microsystems. A100 accelerator and dgx a100.

Its die size is 826 square millimeters which is larger than both the v100 815mm2 and. The nvidia ai platform delivered using the power of the nvidia a100 tensor core gpu the nvidia t4 tensor core gpu and the scalability and flexibility of nvidia interconnect technologies nvlink nvswitch and the mellanox connectx 6 vpi. Nvidia egx 是一款云本地的平台. Nvidia was a little hazy on the finer details of ampere but what we do know is that the a100 gpu is huge.

Nvidia dgx 2 集成16 个 nvidia v100 tensor core gpu 的 2 petaflops 系统 nvidia dgx a100 搭配了8个nvidia a100 tensor core gpu. Code name the internal engineering codename for the processor typically designated by an nvxy name and later gxy where x is the series number and y is the schedule of the project for that. For a100 in particular nvidia has used the gains from these smaller nvlinks to double the number of nvlinks available on the gpu. The a100 accelerator was initially available only in the 3rd generation of dgx server including 8 a100s.

Model the marketing name for the processor assigned by nvidia. Ideal for large scale deep learning training and neural network model applications. Nvidia a100 gpu is a 20x ai performance leap and an end to end machine learning accelerator from data analytics to training to inference said nvidia founder and ceo jensen huang. Announced and released on may 14 2020 was the ampere based a100 accelerator.

Ngc gpu 优化深度学习 机器学习和高性能计算 hpc 软件中心 nvidia jetson 运用在自主机器领域里的的嵌入式系统. These are at the heart of the nvidia dgx a100 the engine behind our benchmark performance. The nvidia a100 tensor core gpu delivers unprecedented acceleration at every scale for ai data analytics and high performance computing hpc to tackle the world s toughest computing challenges. The a100 features 19 5 teraflops of fp32 performance 6912 cuda cores 40gb of graphics memory and 1 6tb s of graphics memory bandwidth.