Nvidia A100 Reddit

The third generation of nvidia nvlink in a100 doubles the gpu to gpu direct bandwidth to 600 gigabytes per second gb s almost 10x higher than pcie gen4.

Nvidia a100 reddit. With this new flagship nvidia chip now on the market domain scientists relying on gpu accelerated scientific simulations codes wonder whether it is time to upgrade their hardware. There is no dlss 3 0. The full a100 gpu has 128 sms and up to 8192 cuda cores but the nvidia a100 gpu only enables 108 sms for now. The nvidia a100 tensor core gpu delivers unprecedented acceleration at every scale for ai data analytics and high performance computing hpc to tackle the world s toughest computing challenges.

Nvidia strongly pushed the still relatively new dlss 2 0 as there upscaling tech of the. Gaming was also strong with revenue up 27 year over year. The latest in nvidia s line of dgx servers the dgx 100 is a complete system that incorporates 8 a100 accelerators as well as 15 tb of storage dual amd rome 7742 cpus 64c each 1 tb of ram. When paired with the latest generation of nvidia nvswitch all gpus in the server can talk to each other at full nvlink speed for incredibly fast.

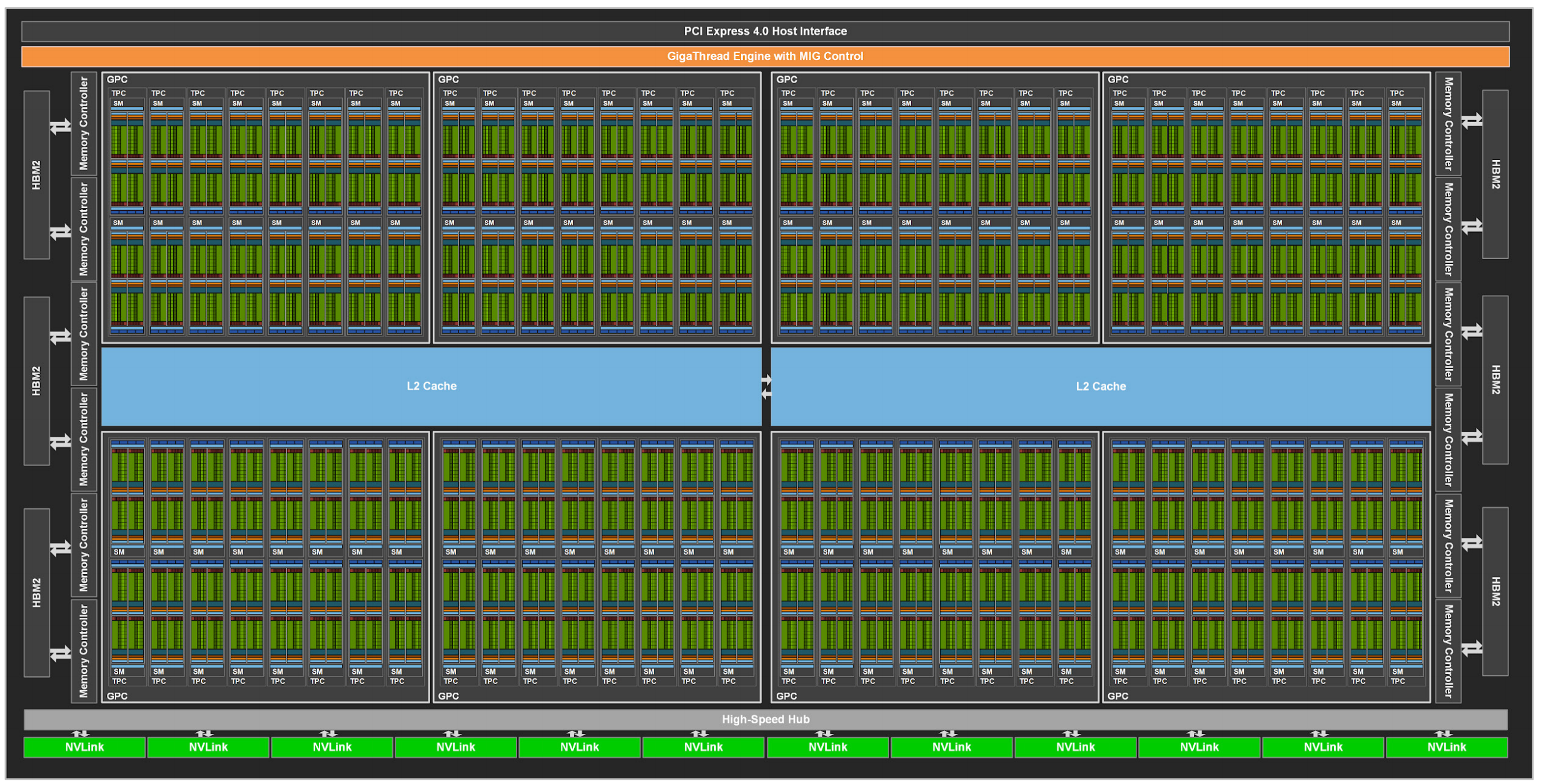

For the first time scale up and scale out workloads. As the engine of the nvidia data center platform a100 can efficiently scale to thousands of gpus or with nvidia multi instance gpu mig technology be partitioned into seven gpu instances to. Nvidia new ga100 sm with uber tensor core plus fp64 cores but no rt. The a100 does not contain rt cores so we know nothing about the rtx 3000 ray tracing performance.

The nvidia a100 tensor core gpu implementation of the ga100 gpu includes the following units. Nvidia s new a100 pcie accelerator. It also has faster nvlink. Nvidia a100 gpu is a 20x ai performance leap and an end to end machine learning accelerator from data analytics to training to inference.

But it only has 8gb more memory than the v100. 7 gpcs 7 or 8 tpcs gpc 2 sms tpc up to 16 sms gpc 108 sms 64 fp32 cuda cores sm 6912 fp32 cuda cores per gpu 4 third generation tensor cores sm 432 third generation tensor cores per gpu 5 hbm2 stacks 10 512 bit memory controllers. As expected the a100 has pci e 4. Scaling applications across multiple gpus requires extremely fast movement of data.

40gb hbm2e memory pcie 4 0 tech this new geforce rtx 3090 leak has it at 26 faster than rtx 2080 ti new geforce rtx 3090 leaks.