Nvidia A100 Memory

News reviews articles guides gaming ask the experts newsletter forums giveaway.

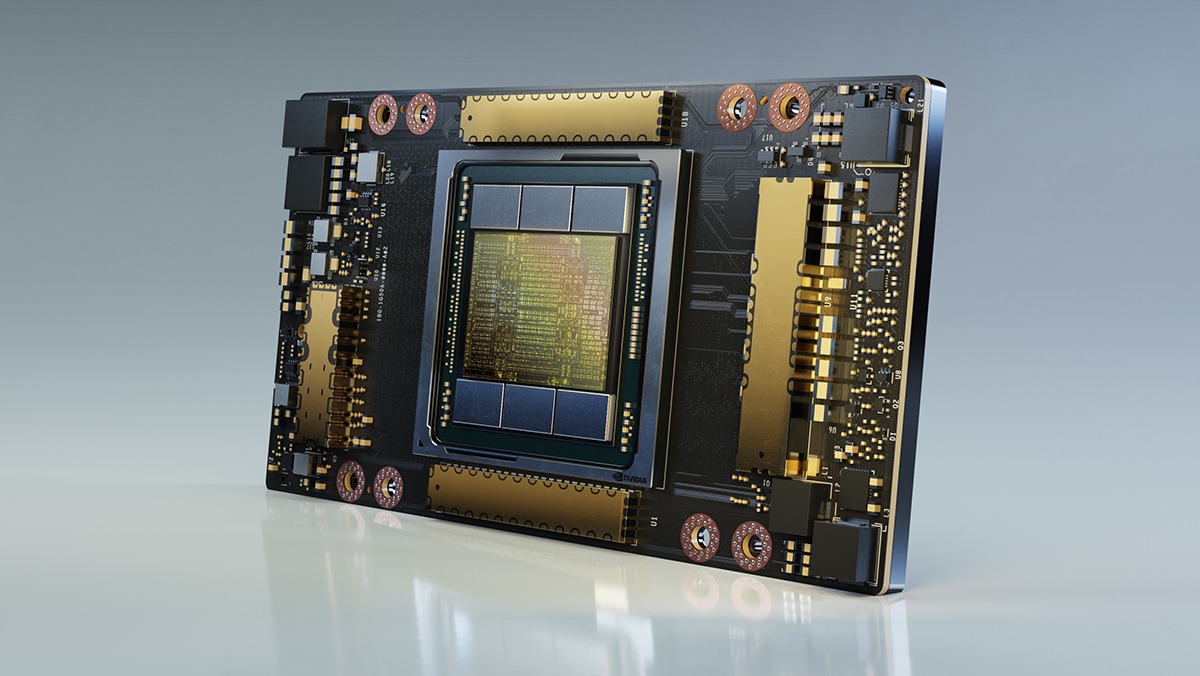

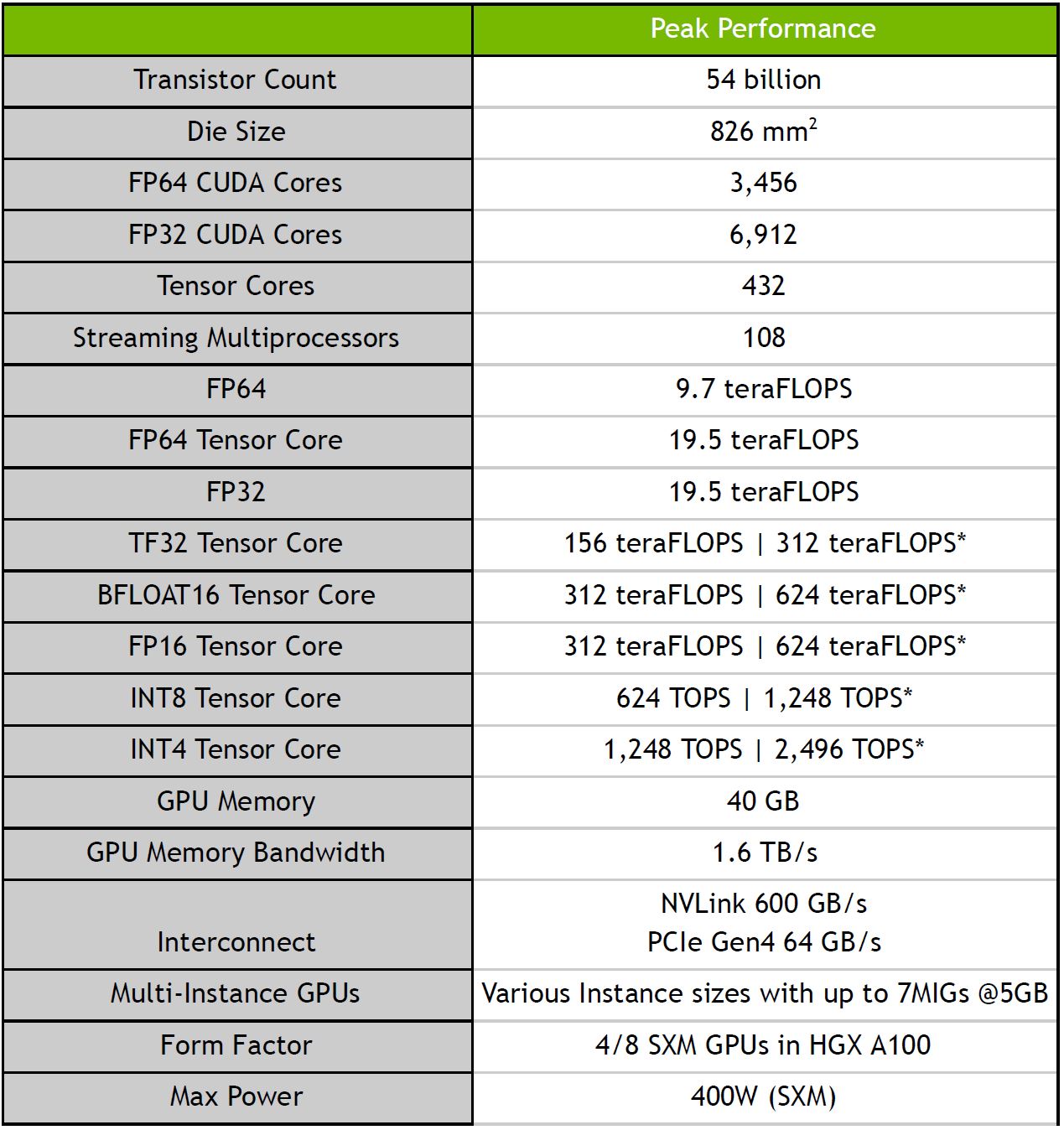

Nvidia a100 memory. To feed its massive computational throughput the nvidia a100 gpu has 40 gb of high speed hbm2 memory with a class leading 1555 gb sec of memory bandwidth a 73 increase compared to tesla v100. As the engine of the nvidia data center platform a100 can efficiently scale to thousands of gpus or with nvidia multi instance gpu mig technology be partitioned into seven gpu instances to. This feature is used to prevent known degraded memory locations from being used. Far from your computer screens and deep inside the cloud is where the new computational war is being fought right in the heart of the data centers.

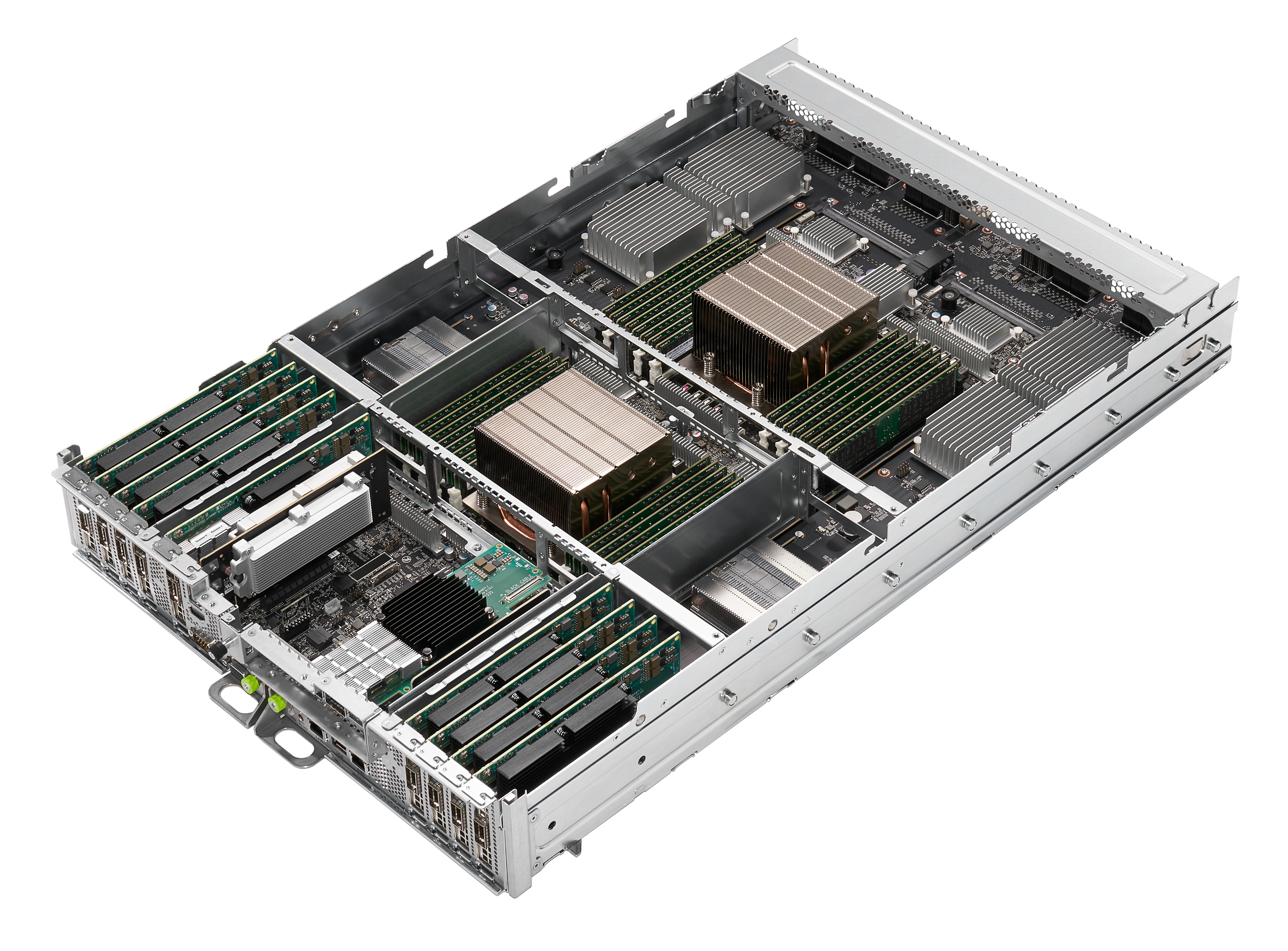

Every bank in nvidia a100 hbm is equipped with spare rows in. Nvidia dgx a100 features the world s most advanced accelerator the nvidia a100 tensor core gpu enabling enterprises to consolidate training inference and analytics into a unified easy to deploy ai. This feature is used to prevent known degraded memory locations from being used. The row remapping feature is a replacement for the page retirement scheme used in prior generation gpus.

Row remapping is a hardware mechanism to improve the reliability of frame buffer memory on nvidia a100. The row remapping feature is a replacement for the page retirement scheme used in prior generation gpus. Nvidia s new ampere a100 54 billion transistor gpu will revolutionize data center design. The gpu is operating at a frequency of 765 mhz which can be boosted up to 1410 mhz memory is running at 1215 mhz.

Gaming peripherals upgrade thanks to. In addition the a100 gpu has significantly more on chip memory including a 40 mb level 2 l2 cache nearly 7x larger than v100 to maximize compute performance. For the first time scale up and scale out workloads. Nvidia a100 gpu is a 20x ai performance leap and an end to end machine learning accelerator from data analytics to training to inference.

Nvidia has paired 40 gb hbm2e memory with the a100 pcie which are connected using a 5120 bit memory interface. The nvidia a100 tensor core gpu delivers unprecedented acceleration at every scale for ai data analytics and high performance computing hpc to tackle the world s toughest computing challenges. This article was first published on 15 may 2020. If the new ampere architecture based a100 tensor core data center gpu is the component responsible re architecting the data center nvidia s new dgx a100 ai supercomputer is the ideal enabler to revitalize data centers.

Being a dual slot card the nvidia a100 pcie does not require any additional power connector its power draw is rated. Nvidia announces its new a100 pcie accelerator with 40gb of hbm2e memory.

.jpg&w=480&c=0&s=1)