Nvidia A100 Instance

With newer models aws customers are continually looking for higher performance instances to support faster model training.

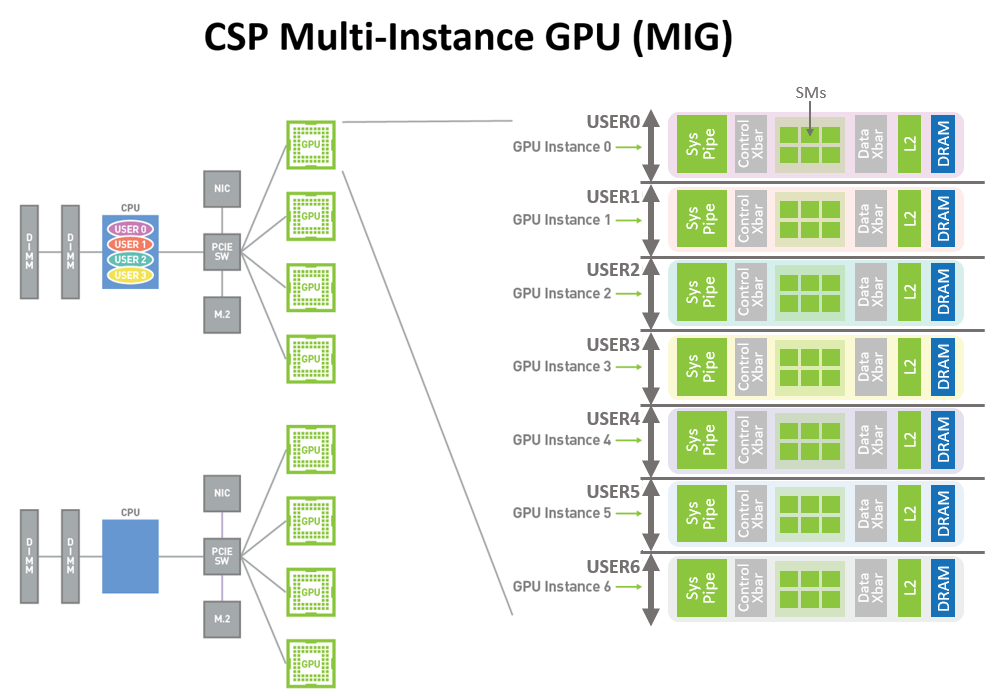

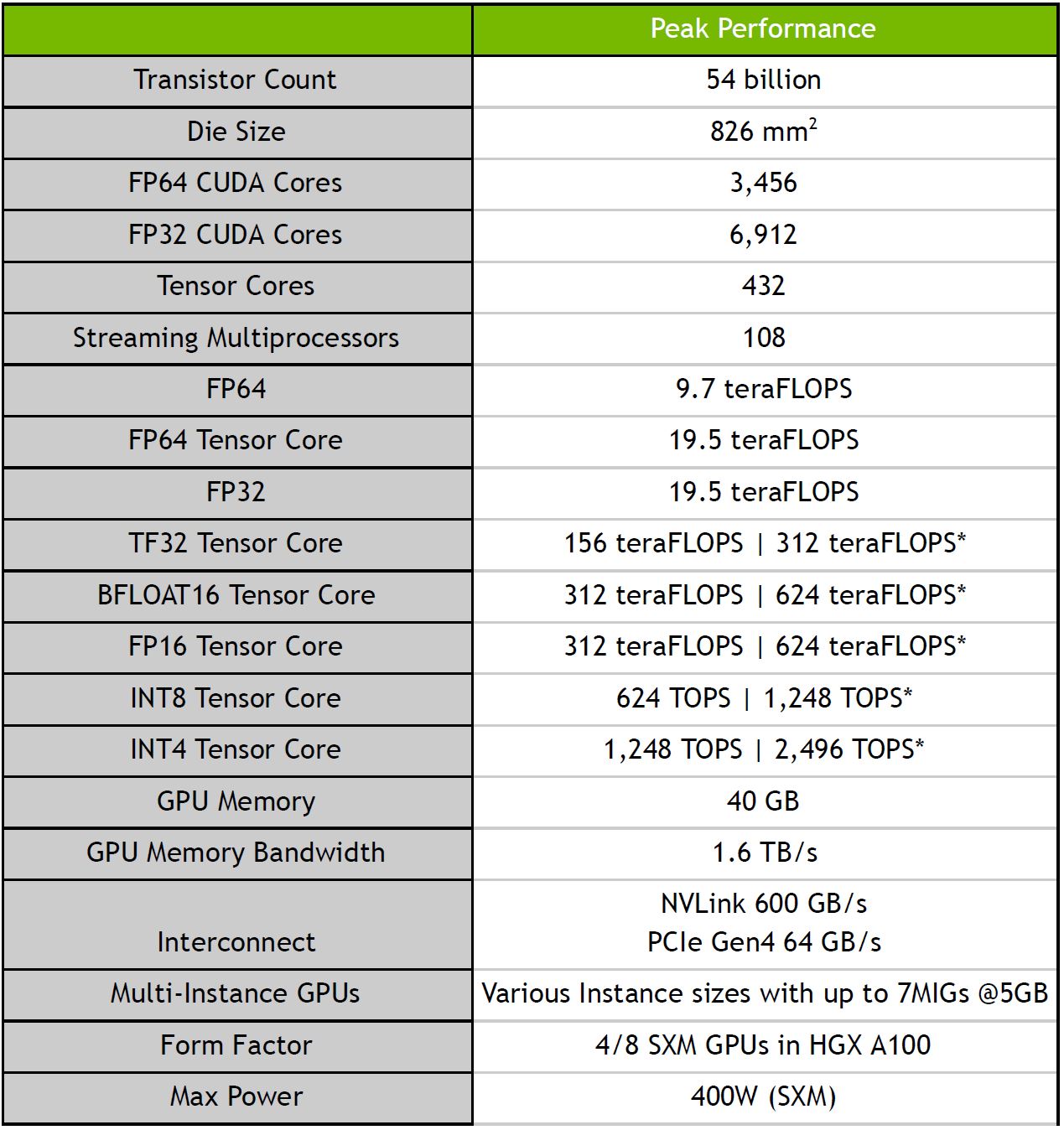

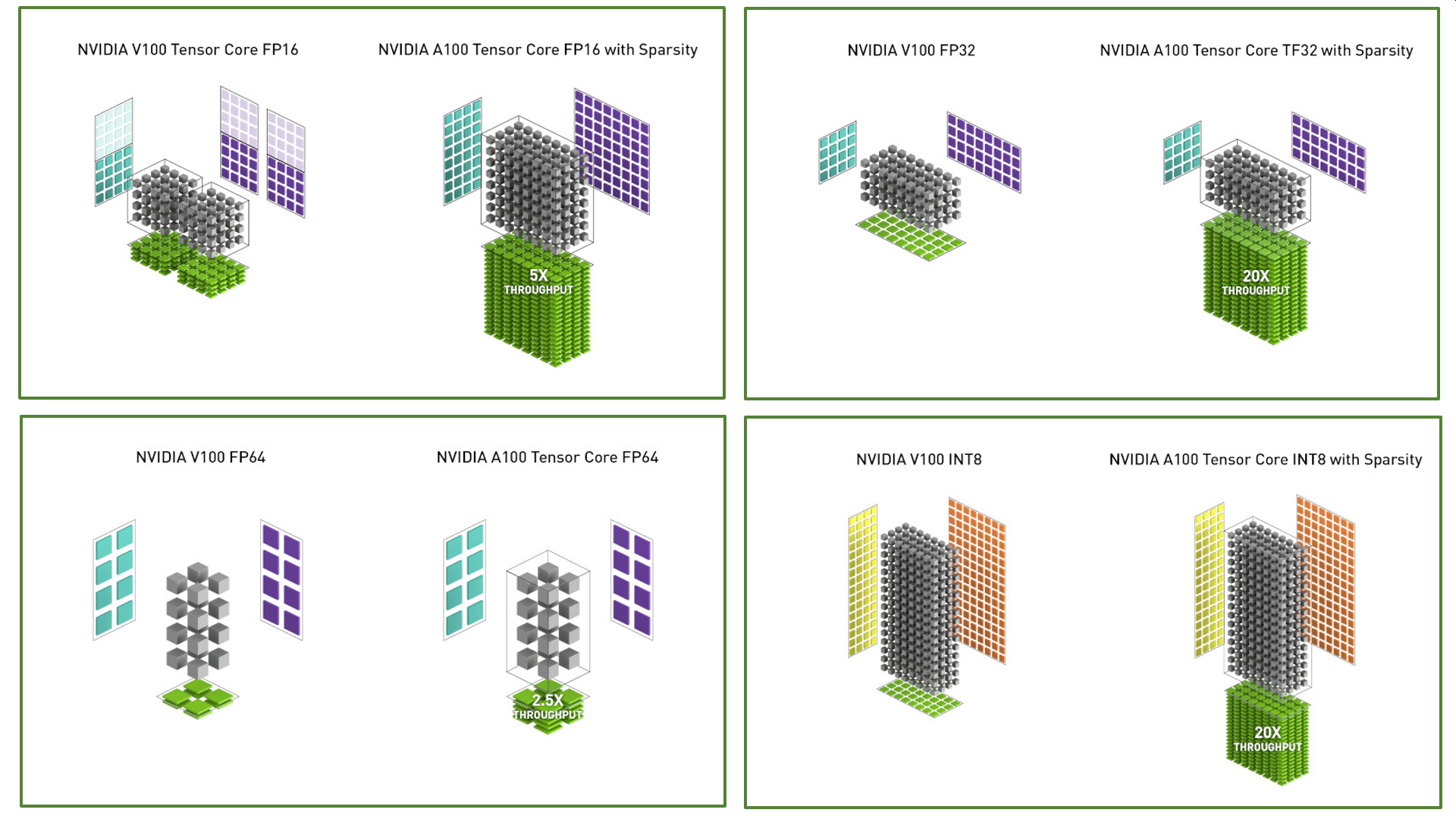

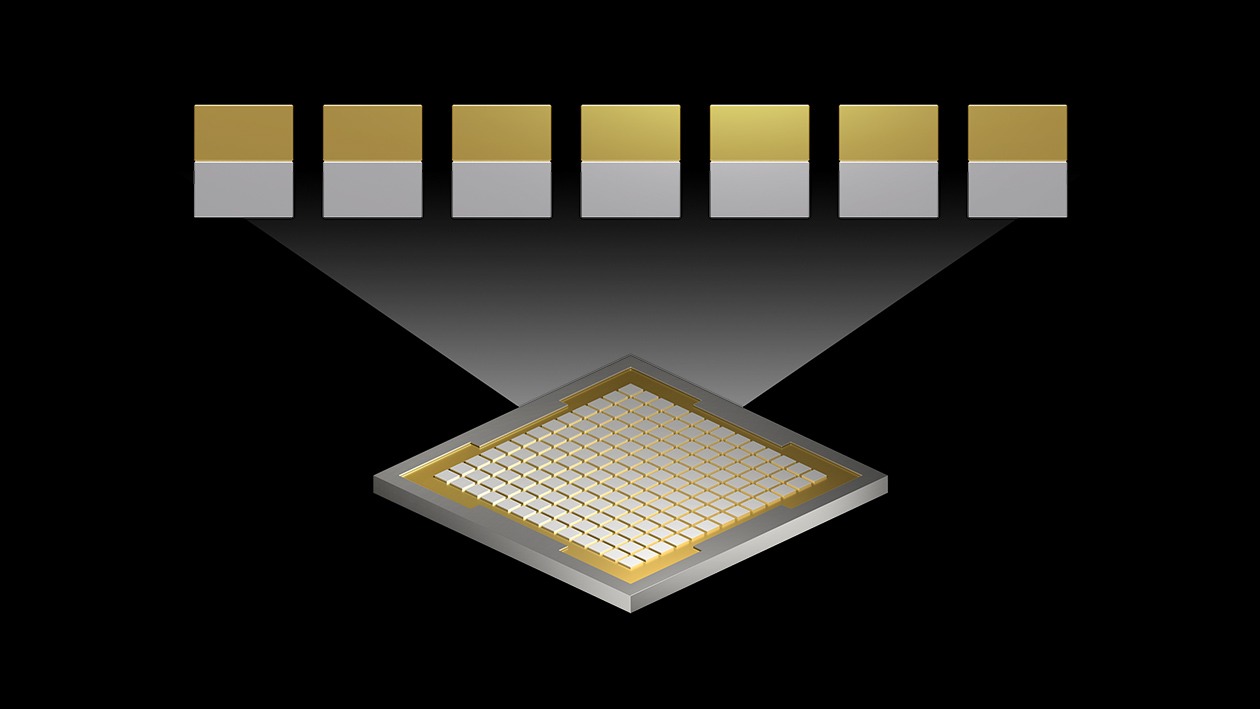

Nvidia a100 instance. The new multi instance gpu mig feature allows the nvidia a100 gpu to be securely partitioned into up to seven separate gpu instances for cuda applications providing multiple users with separate gpu resources for optimal gpu utilization. They run simultaneously each with its own memory cache and streaming multiprocessors sm. The nvidia a100 tensor core gpu delivers unprecedented acceleration at every scale for ai data analytics and high performance computing hpc to tackle the world s toughest computing challenges. Multi instance gpu partitions a single nvidia a100 gpu into as many as seven independent gpu instances.

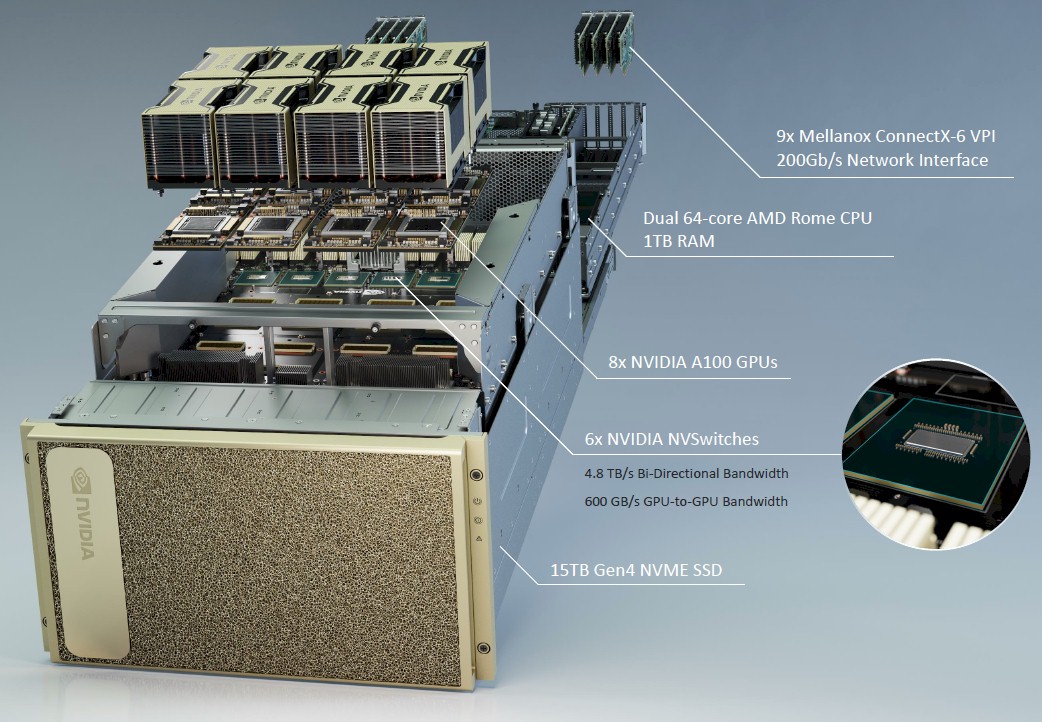

These gpu instances will enable customers in industries such as automotive and aerospace to run complex data intensive high performance applications like modeling and simulations more cost efficiently. Before announcing the first a100 powered cloud instance nvidia said in june that more than 50 servers from oems like dell technologies cisco systems and hewlett packard enterprise will support. Nvidia a100 tensor core gpus coming to amazon ec2 instances. To power ai workloads of all sizes its new nd a100 v4 vm series can scale from a single partition of one a100 to an instance of thousands of a100s networked with nvidia mellanox interconnects.

Where to go to learn more. Oracle cloud infrastructure has become the first to offer general availability of bare metal instances featuring the nvidia a100 tensor core gpu. Lastly the multi instance group mig feature allows each gpu to be partitioned into as many as seven gpu instances fully isolated from a performance and fault isolation perspective. Azure s a100 instances enable ai at incredible scale in the cloud said partner nvidia.

That enables the a100 gpu to deliver guaranteed quality of service qos at up to 7x higher utilization compared to prior gpus. All together each a100 will have a lot more performance increased memory very flexible precision support and increased process isolation for running multiple workloads on a single gpu. The a2 megagpu 16g instance uses 16x nvidia a100 gpus and is connected via nvswitch. With nvidia a100 and its software in place users will be able to see and schedule jobs on their new gpu instances as if they were physical gpus.

As the engine of the nvidia data center platform a100 can efficiently scale to thousands of gpus or with nvidia multi instance gpu mig technology be partitioned into seven gpu instances to. In the new alpha instances google compute engine allows customers to access up to 16 a100 gpus. To get the big picture on the role of mig in a100 gpus watch the keynote with nvidia ceo and founder jensen huang.