Nvidia A100 Hbm2e

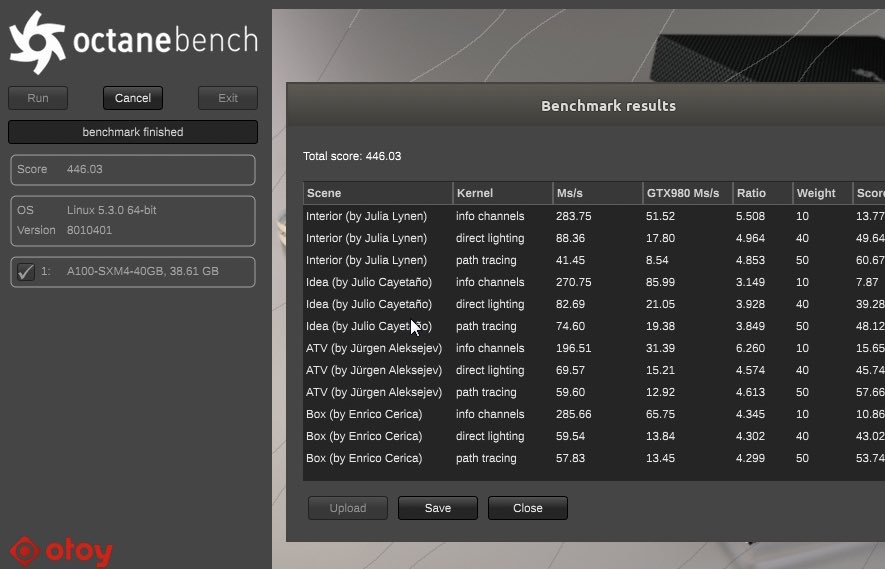

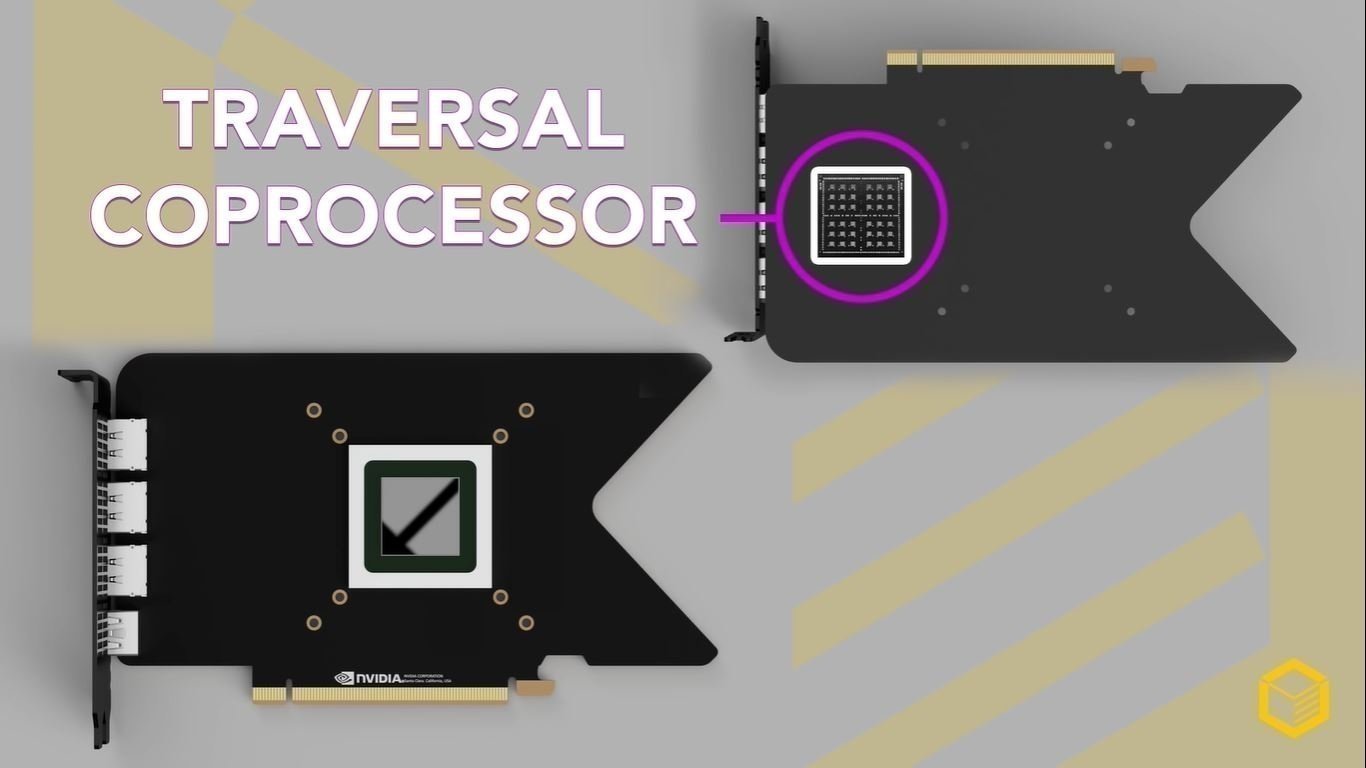

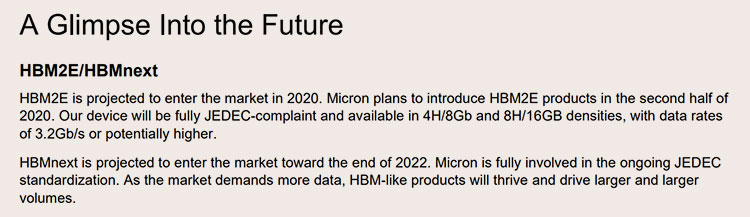

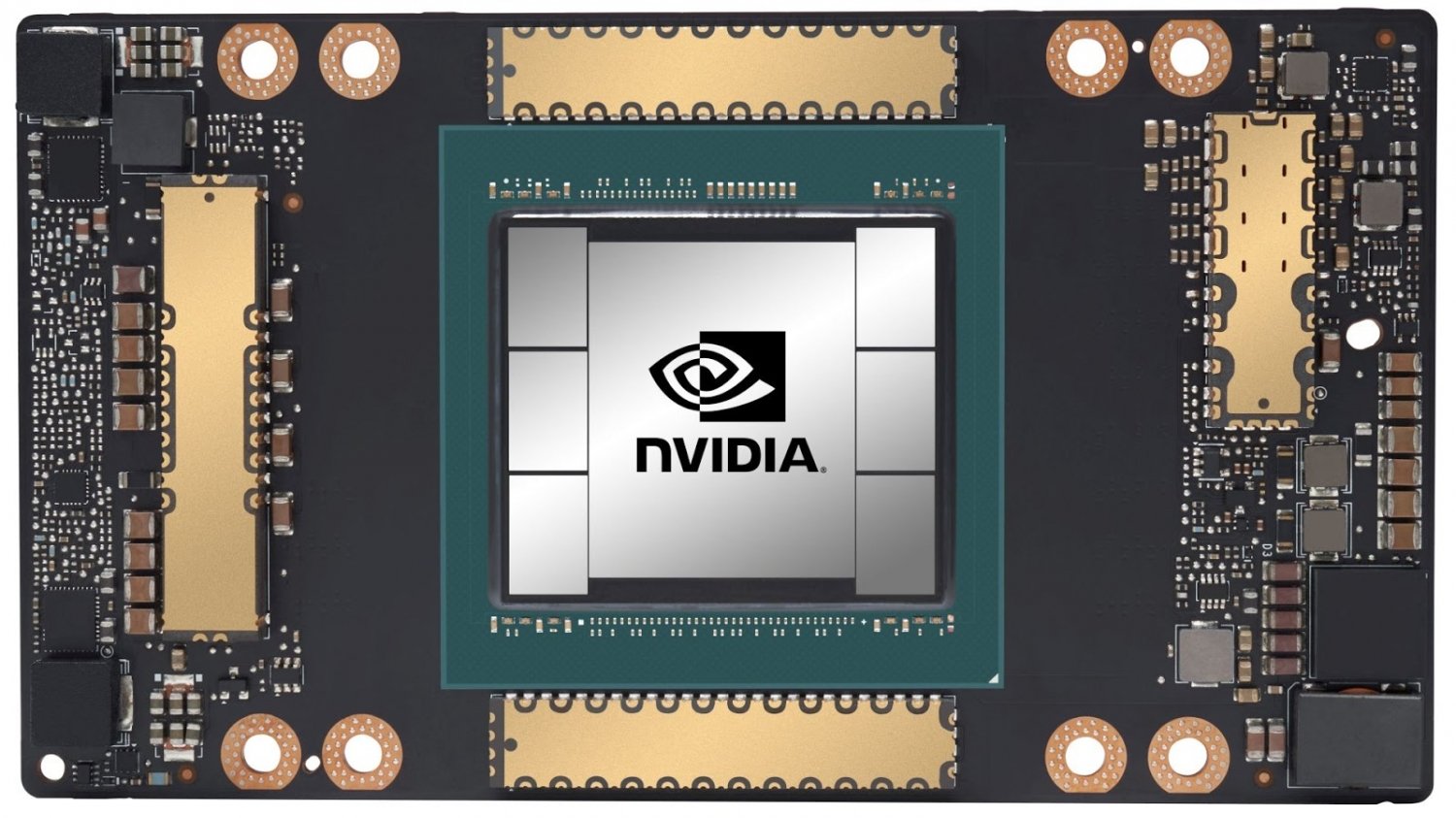

The ampere a100 is a beast of a card with 6 912 cuda cores 432 tensor cores 40 gb of hbm2e memory and a bandwidth of up to 1 555 gbps so it s no surprise that it dwarfs a consumer card like.

Nvidia a100 hbm2e. Nvidia a100 gpu is a 20x ai performance leap and an end to end machine learning accelerator from data. Being a dual slot card the nvidia a100 pcie does not require any additional power connector its power draw is rated. The gpu is operating at a frequency of 765 mhz which can be boosted up to 1410 mhz memory is running at 1215 mhz. Nvidia announces its new a100 pcie accelerator with 40gb of hbm2e memory.

The gpu is operating at a frequency of 1410 mhz memory is running at 1215 mhz. Dgx a100 nvidia has built eight of these a100s into a cloud accelerator system it calls the dgx a100 which offers 5 petaflops. Onboard is 40gb of hbm2e memory with maximum memory bandwidth of 1 6tb sec. Tesla a100 features 40gb of hbm2e memory.

Nvidia a100 gpu is a 20x ai performance leap and an end to end machine learning accelerator from data analytics to training to inference. Nvidia has paired 40 gb hbm2e memory with the a100 pcie which are connected using a 5120 bit memory interface. For the first time scale up and scale out workloads. Its power draw is rated at 400 w maximum.