Nvidia A100 Hbm2

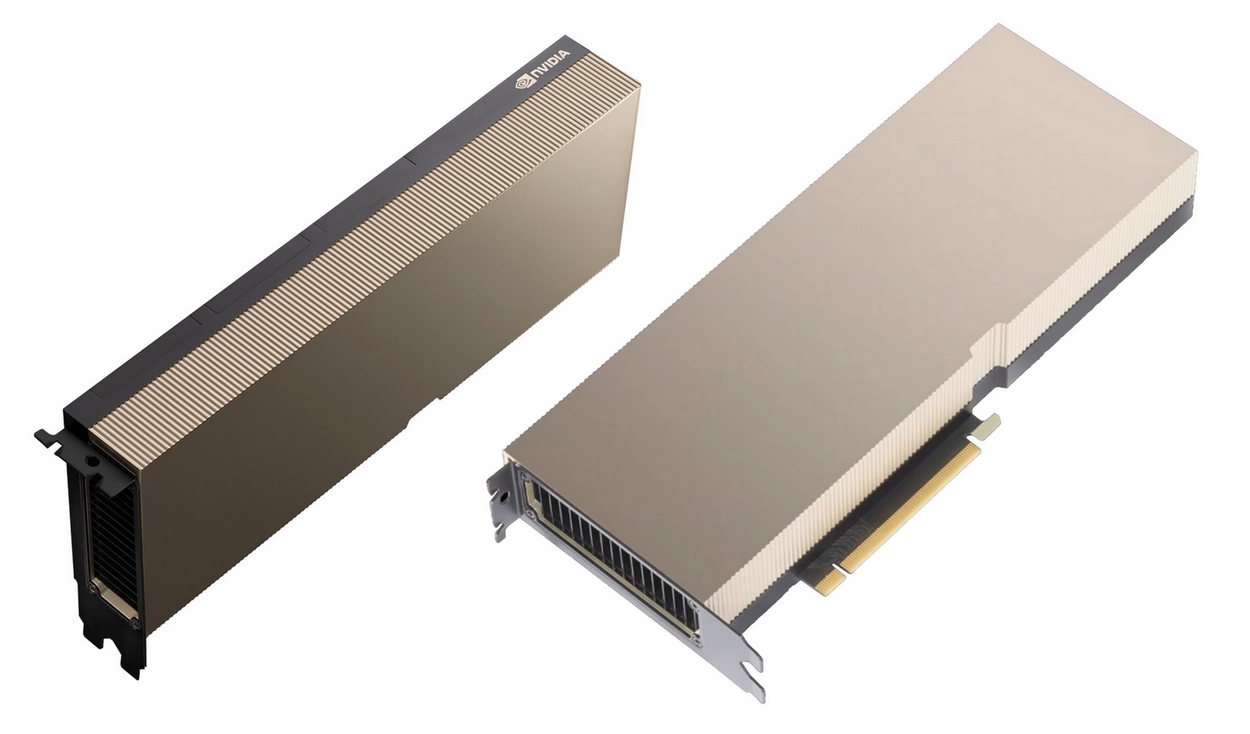

The nvidia a100 tensor core gpu delivers unprecedented acceleration at every scale for ai data analytics and hpc to tackle the world s toughest computing challenges.

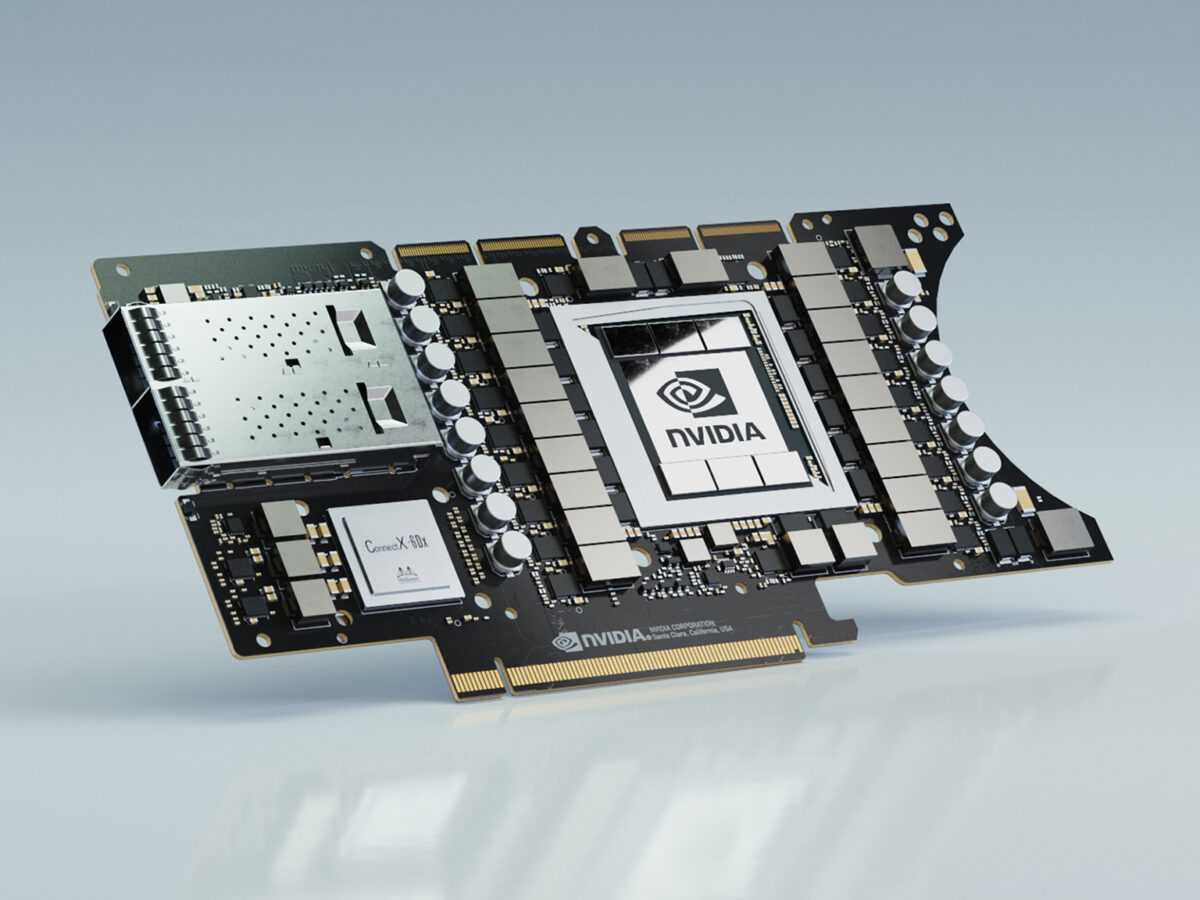

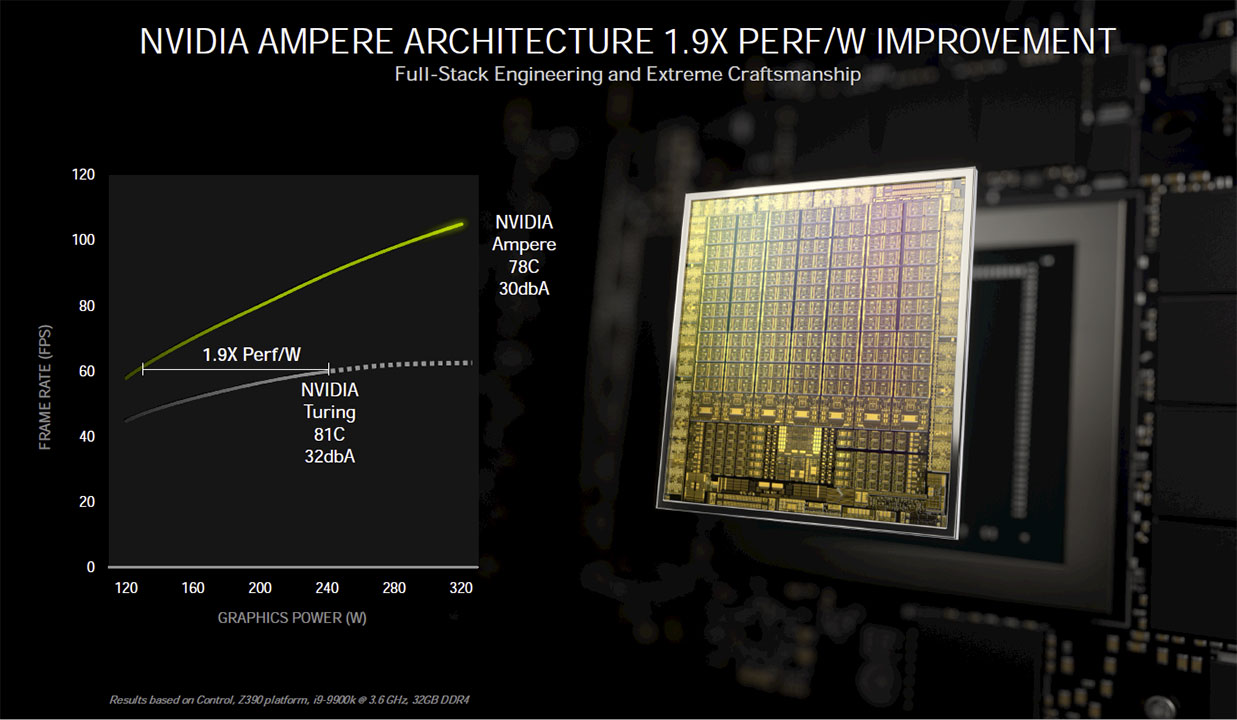

Nvidia a100 hbm2. Underscoring the advantage that an amd epyc nvidia a100 pairing has right now since amd is the only x86 server. Nvidia a100 gpu on new sxm4 module. Nvidia a100 ampere gpu launched in pcie form factor 20 times faster than volta at 250w 40 gb hbm2 memory. For the first time scale up and scale out workloads.

This article was first published on 15 may 2020. Iii nvidia a100 tensor core gpu architecture. Perhaps nvidia is planning a 48gb version in the future or there is something else going on. A100 hbm2 dram subsystem 34 ecc memory resiliency 35 a100 l2 cache 35.

A few folks reached out asking why it looks like the a100 package has six samsung hbm2 stacks but the total is not divisible by 6. This gpu is equipped with 6912 cuda cores and 40gb of hbm2 memory. This is also the first card featuring pcie 4 0 interface or sxm4. The a100 offers up to 624 tf of fp16 arithmetic throughput for deep learning dl training and up to 1 248 tops of int8 arithmetic throughput for dl inference.

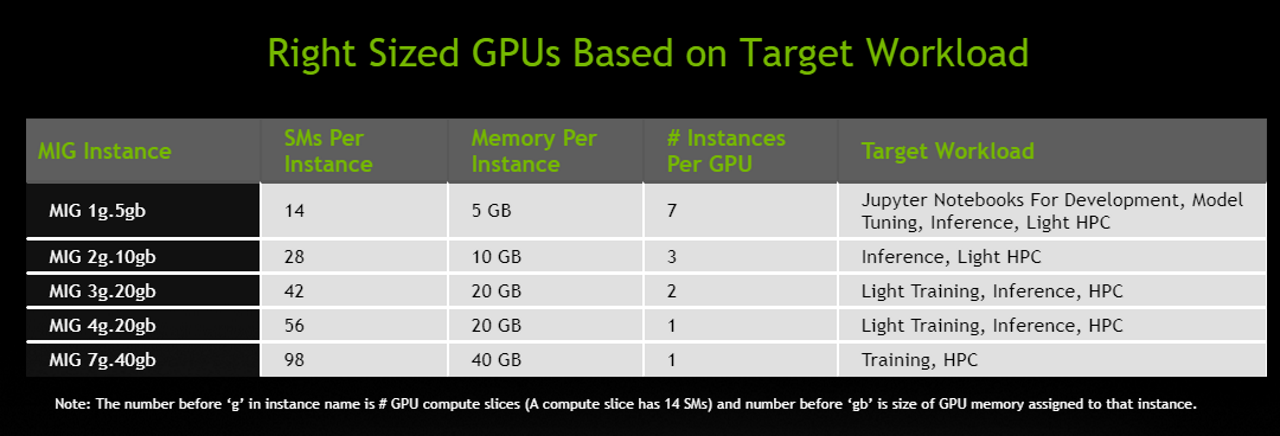

If the new ampere architecture based a100 tensor core data center gpu is the component responsible re architecting the data center nvidia s new dgx a100 ai supercomputer is the ideal enabler to revitalize data centers. A100 hbm2 and l2 cache memory architectures 34. As the engine of the nvidia data center platform a100 can efficiently scale up to thousands of gpus or using new multi instance gpu mig technology can be partitioned into seven isolated gpu instances to accelerate. The nvidia a100 is the largest 7nm chip ever made with 54b transistors 40 gb of hbm2 gpu memory with 1 5 tb s of gpu memory bandwidth.

Nvidia a100 gpu is a 20x ai performance leap and an end to end machine learning accelerator from data analytics to training to inference. As noted above while the images show six hbm2 stacks nvidia s specs table says the hbm2 has a 5120 bit interface which is five 1024 bit interfaces. Unified ai acceleration for bert large training. Nvidia s dgx a100 supercomputer is the ultimate instrument to advance ai and fight covid 19.