Nvidia A100 Gpu Server

The server is the first generation of the dgx series to use amd cpus.

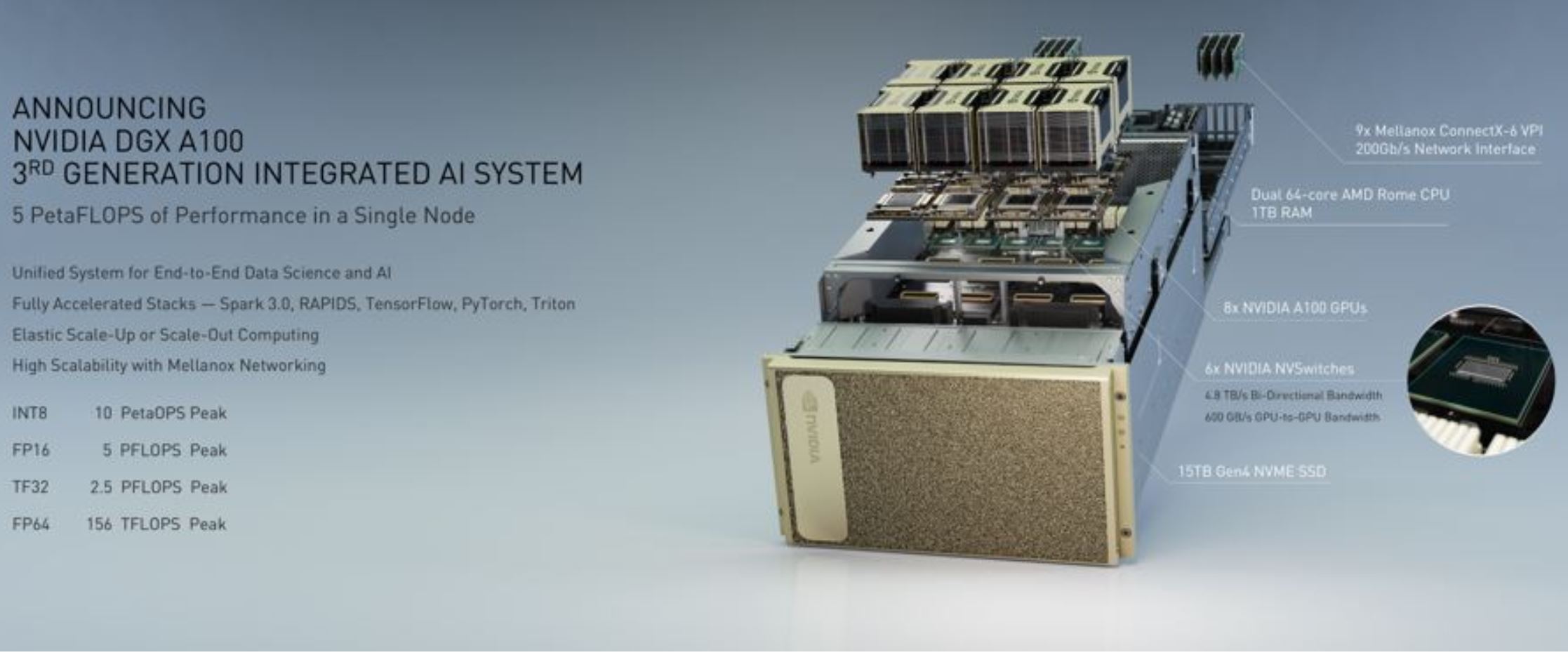

Nvidia a100 gpu server. 8x a100s 2x amd epyc cpus and pcie gen 4. Nvidia a100 ampere solutions scalable server platforms featuring the nvidia a100 tensor core gpu. In addition to the nvidia ampere architecture and a100 gpu that was announced nvidia also announced the new dgx a100 server. Nvidia adds a100 pcie gpus to boost ai data science and hpc server offerings.

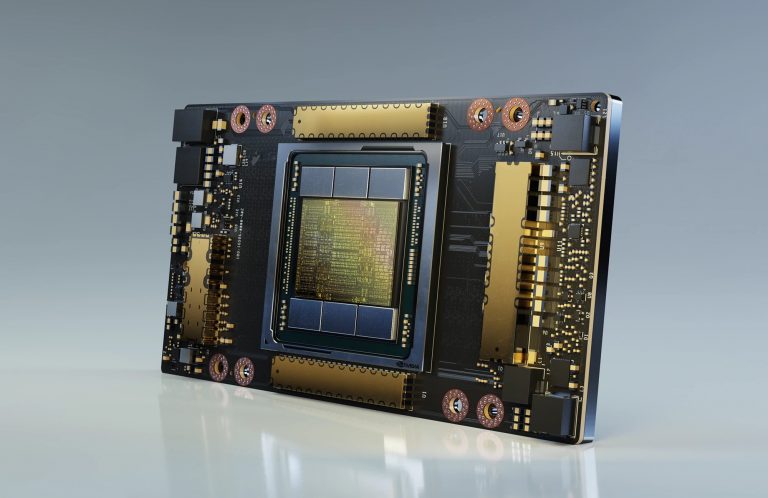

Following nvidia s powerful ampere architecture launch through their a100 tensor core gpu that s expressly designed to tackle data center woes nvidia is now bringing the gpu in a plug in pcie form factor dubbed the a100 pcie. Previously the only way vendors could deploy servers with the a100 gpu is either via. Nvidia hgx a100 4 gpu delivers nearly 80 teraflops of fp64 for the most demanding hpc workloads. The dgx a100 server.

Following the penetration into the supply chain of google cloud s compute engine platform nvidia has recently obtained more orders for its a100 gpu from the us based cloud computing giant for its. With the gpu baseboard building block the nvidia server system partners customize the rest of the server platform to specific business needs. The nvidia a100 tensor core gpu delivers unprecedented acceleration at every scale for ai data analytics and high performance computing hpc to tackle the world s toughest computing challenges. Nvidia hgx a100 8 gpu provides 5 petaflops of fp16 deep learning compute while the 16 gpu hgx a100 delivers a staggering 10 petaflops creating the world s most powerful accelerated scale up server platform for ai and hpc.

The new 4u gpu system features the nvidia hgx a100 8 gpu baseboard up to six nvme u 2 and two nvme m 2 10 pci e 4 0 x16 i o with supermicro s unique aiom support invigorating the 8 gpu communication and data flow between systems through the latest technology stacks such as nvidia nvlink and nvswitch gpudirect rdma gpudirect storage and nvme of on infiniband. Nvidia partners offer a wide array of cutting edge servers capable of diverse ai hpc and accelerated computing workloads. The a100 pcie gpu on the other hand has lower performance and scalability with the ability to only link two gpus with nvlink but its advantage is much wider server support making it much. Cpu subsystem networking storage power form factor and node management.

.jpg&w=480&c=0&s=1)