Nvidia A100 Bert

Empirical performance is another.

Nvidia a100 bert. 3 v100 used is single v100 sxm2. A100 gpu hpc application speedups compared to nvidia tesla v100. Trt 7 1 precision fp16 batch size 256 a100 with 7 mig instances of 1g 5gb. Lambda customers are starting to ask about the new nvidia a100 gpu and our hyperplane a100 server.

Framework network throughput gpu server container precision batch size dataset gpu version. Insieme a nvidia nvlink di terza generazione nvidia nvswitch pci gen4 mellanox infiniband e il software sdk nvidia magnum io è possibile scalare fino a migliaia di gpu a100. State of the art language modeling using megatron on the nvidia a100 gpu. Nvidia tensorrt trt 7 1 precision int8 batch size 256 v100.

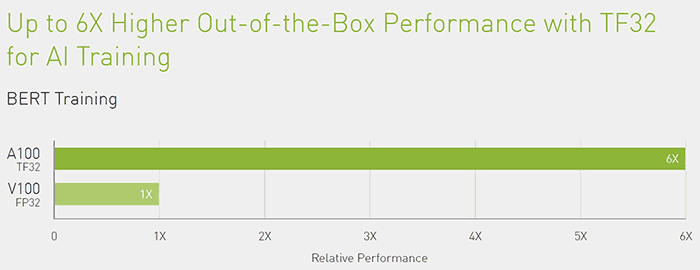

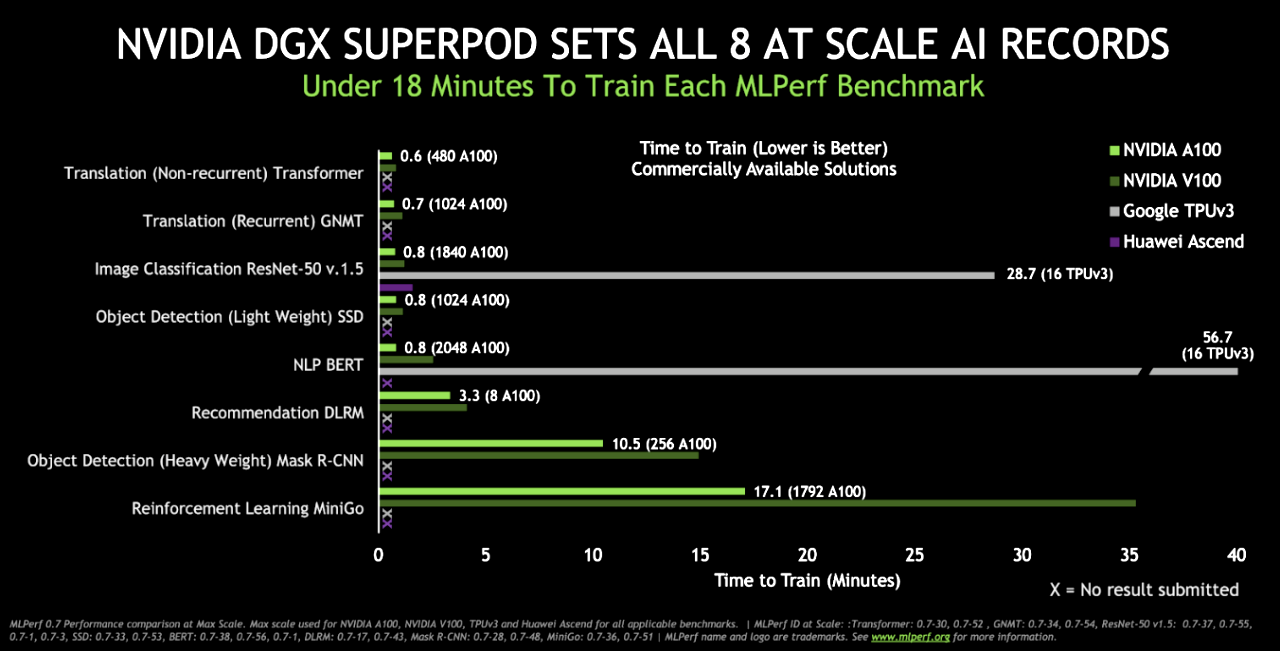

The a100 will likely see the large gains on models like gpt 2 gpt 3 and bert using fp16 tensor cores. A100 used is single a100 sxm4. Questo significa che i modelli ia voluminosi come bert possono essere addestrati in soli xx minuti su un cluster di xx a100 offrendo prestazioni e scalabilità senza precedenti. Specifications are one thing.

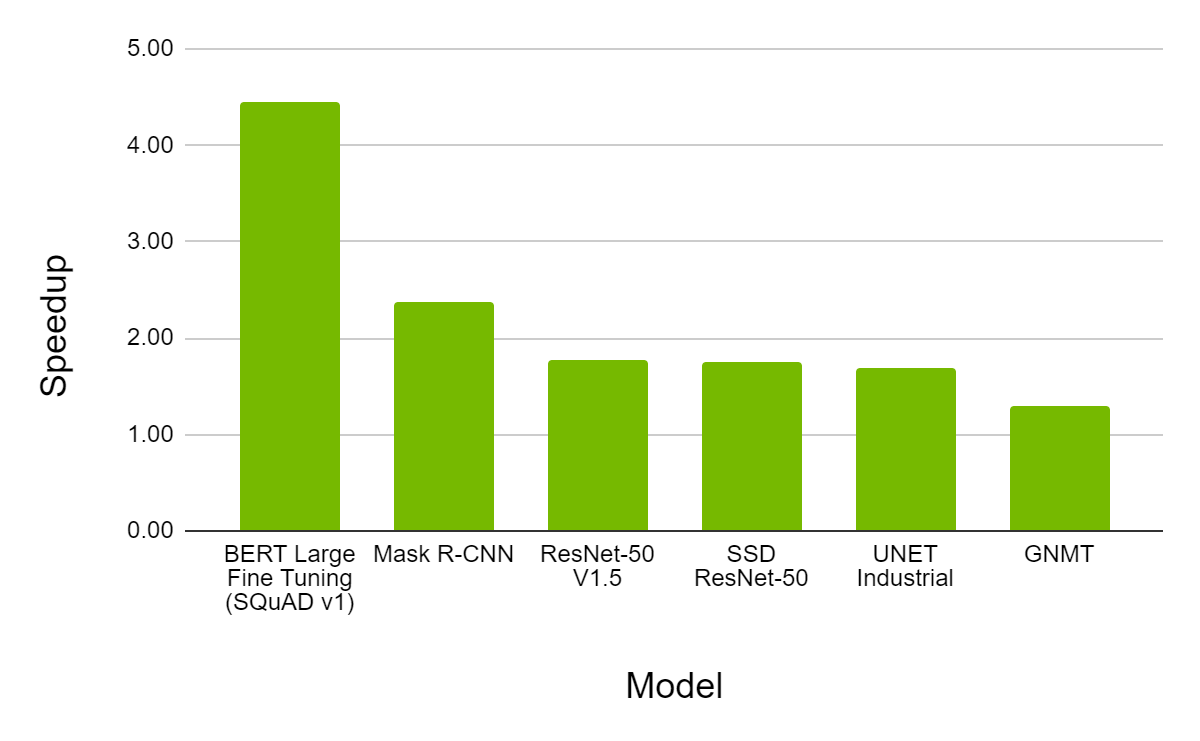

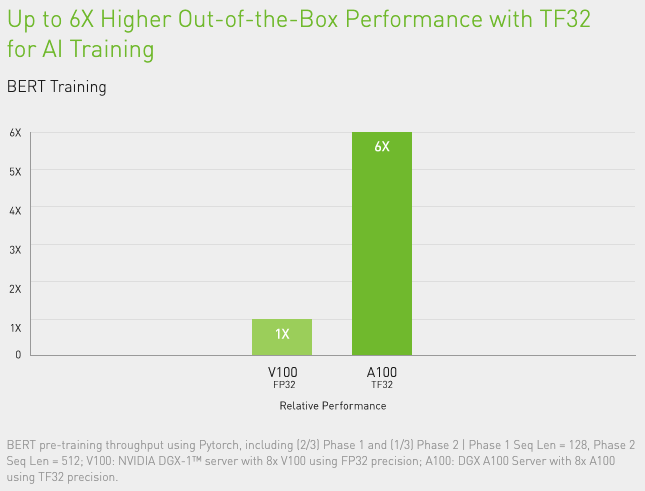

For language model training we expect the a100 to be approximately 1 95x to 2 5x faster than the v100 when using fp16 tensor cores. To benchmark and compare the v100 to the a100 test followed nvidia s deep learning examples library. Unified ai acceleration for bert large training and inference. Nvidia a100 bert training benchmarks.

Using sparsity an a100 gpu can run bert bidirectional encoder representations from transformers the state of the art model for natural language processing 50 faster than with dense math. Ai deep learning. The nvidia a100 tensor core gpu delivers unprecedented acceleration at every scale for ai data analytics and high performance computing hpc to tackle the world s toughest computing challenges. The workloads tested were bert large for language modeling jasper for speech recognition maskrcnn for image segmentation and gnmt for translation.

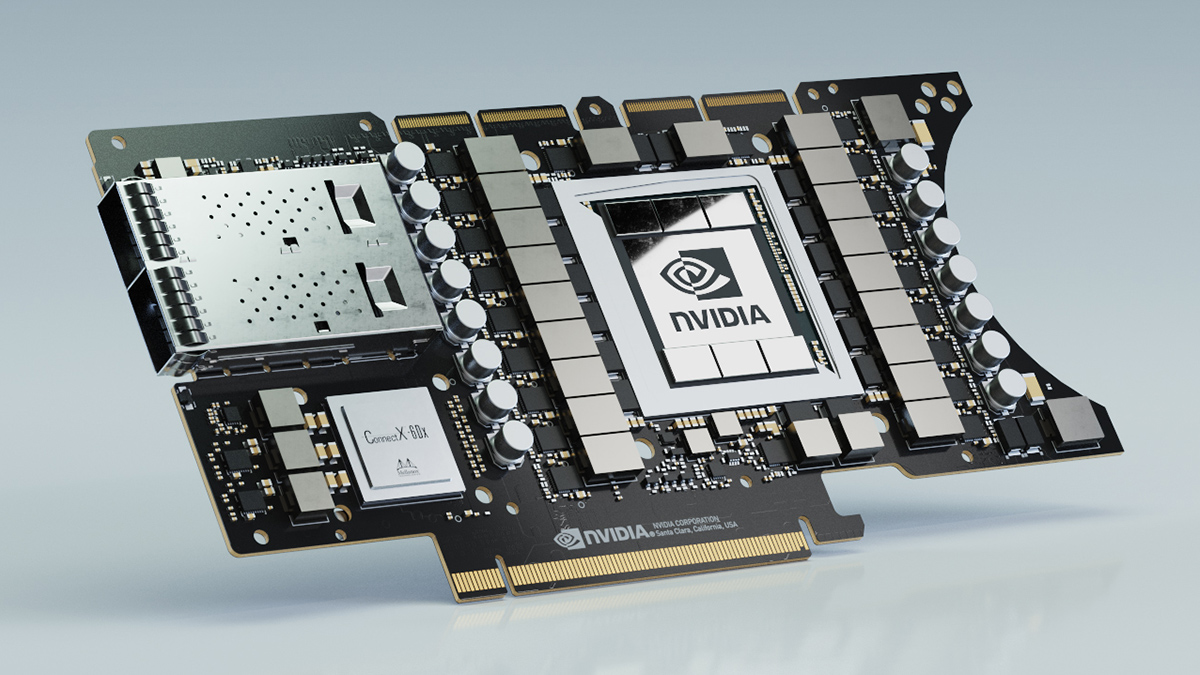

Nvidia a100 gpu on new sxm4 module. As the engine of the nvidia data center platform a100 can efficiently scale to thousands of gpus or with nvidia multi instance gpu mig technology be partitioned into seven gpu instances. Pre production trt batch size 94 precision int8 with sparsity. By mohammad shoeybi mostofa patwary raul puri patrick legresley jared casper and bryan catanzaro may 14 2020.

A100 is part of the complete nvidia data center solution that incorporates building blocks across hardware networking software libraries and optimized ai models and applications from ngc representing the most powerful end to end ai and hpc platform for data centers it allows researchers to deliver real world results and deploy solutions into production at scale.